By guillaume blaquiere.Nov 26, 2022

In this article, the “App Engine” term only represents the App Engine Standard version.

All the cloud providers offer serverless services. Serverless is wonderful! You have no servers to set up, install, manage or provision. You simply use the services and pay-as-you-use them.

On Google Cloud, there are many serverles services. On the serverless compute domain, there are similarities between Cloud Run and App Engine. And that obviously leads to asking which one to choose for my use case.

I previously compared Cloud Run with Cloud Functions, in terms of cost and features, and it totally makes sense to ask the same question for Cloud Run and App Engine.

So, let’s compare Cloud Run with App Engine.

The products similarities

As I already said in introduction, the 2 products are similar in many domains (not exhaustive):

- Scalability to 0

- Min and max instances

- Versioning and traffic splitting

- IAM and access control

- Customer-managed runtime service account

The cost and some additional features are the key differentiators. Let’s dive in.

App Engine: the weight of the legacy

App Engine is the oldest service of Google Cloud, released in 2008, and maybe the root of many other services such as Cloud Functions, Compute Engine or Cloud Run.

I interviewed Wesley Chun, Developer Advocate at Google Cloud, and he explained that story well.

The pricing model, in automatic scaling mode (the most similar to Cloud Run behavior), is the following

- Instance Type F1 (0,25 vCPU, 256Mb of memory): $0.05 per hour

- The instance is billed 15 minutes after the latest processed request

- There is 28h free of F1 instance equivalent per day (and per project)

To be comparable to Cloud Run, we will use F4 instance type, therefore 1 vCPU and 1Gb of memory. With the F4 instance type, 4 times more CPUs leads to a free tier 4 times shorter, so 7H.

Cloud Run: The new age of pricing model

Cloud Run is much more modern. Released in 2019 and it has a more granular pricing. The main difference is about the CPU allocation which is based on the request management: you pay the resource only when a request is being processed, none after, even if Google Cloud continues to keep your instance warm.

The “Per request” pricing model is the following

- 1 vCPU cost: $0.0864 per hour

- 1Gb of memory cost: $0.009 per hour

- The instance is billed at the 100ms upper round after the latest processed request

- Free tier is applicable at Billing account. In the worst case, I will ignore it in the comparison.

Always ON CPU allocation

Cloud Run offers different CPU allocation options. One of them is “Always ON”.

Usually, when Cloud Run finishes processing a request, the CPU is throttled (below 5%) and it’s impossible to process anything on the instance.

The “Always ON” option removes the CPU throttling when no request is processed and so, you can continue to perform processing on the instance (background process, async operations,…).

Of course, because the CPU is still allocated to your service, you will pay for it. And that, up to the instance shut down (15 minutes after the latest processed request)

This behavior is the same as App Engine CPU allocation and it will be interesting to also compare the cost with this option.

The “Always ON” pricing model is the following

- 1 vCPU cost: $0.0648 per hour

- 1Gb of memory cost: $0.007 per hour

- The instance is billed 15 minutes after the latest processed request

- Free tier is applicable at Billing account. In the worst case, I will ignore it in the comparison.

Pricing model comparison

Comparing pricing models is not really obvious. There is 2 major differences

- The billable time after the latest process request on the instance

- The free tier (ignored for Cloud Run)

I neglect “volume of request” and the “egress” cost for the 2 products

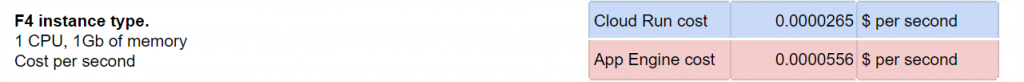

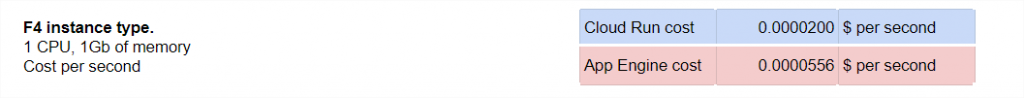

However a first raw comparison between the 2 products can enlightened a first difference:

- “Per Request” option

Cloud Run is 2 times less expensive for the same amount of resource!

- “Always ON” option

Cloud Run roughly costs a third of App Engine for the same amount of resource!

Theoretical scenario

The cost is based on the used resources (size of CPU and Memory), but also on usage duration. That’s why, an “in action” scenario is important to state on the major cost difference in the 2 products at use.

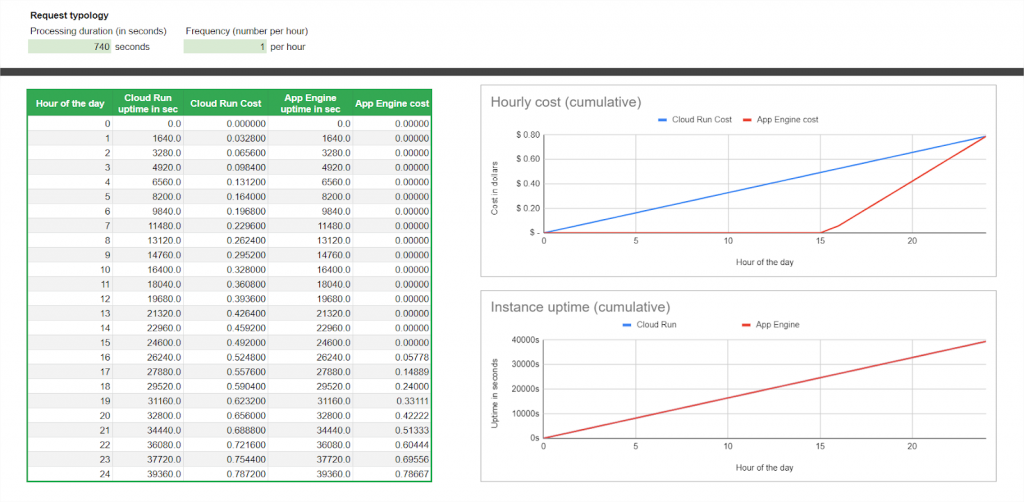

I propose to compare the cost of only one instance, which receives periodic requests (frequency can change, but it is evenly dispatched over 1 hour) and with a defined request processing duration.

I modelized that in this Google sheet, with the cost per hour over the day and the cost per day over the month (both are cumulative).

You can find 2 sheets, one for each option:

- The “Per request” option; the default Cloud Run behavior

- The “Always ON” option; with the no CPU throttled option set

Duplicate it in your account to update the values and perform your own simulation.

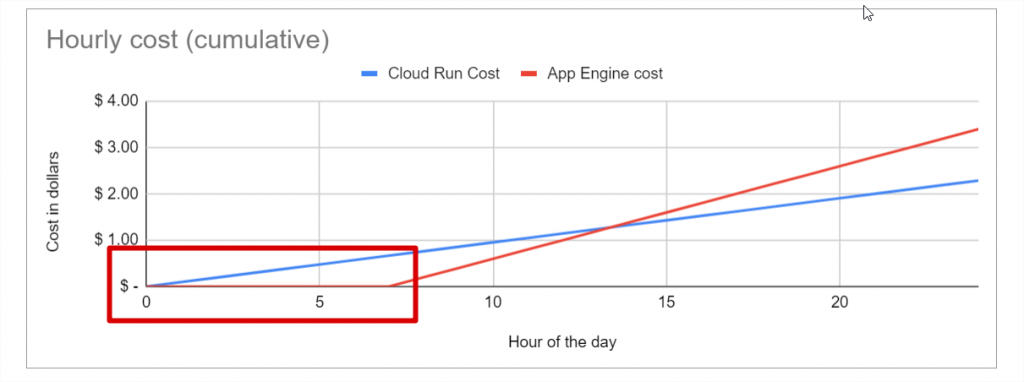

The free tiers advantage

Whatever the option, the clear advantage of App Engine is the free tier. The first 7 hours of the day are always free (in case of 1 instance). See the flat line at the beginning of the day

Free tiers exist also on Cloud Run, but I chose to ignore it, because it’s per billing account (and mutualized across several projects) and not per project.

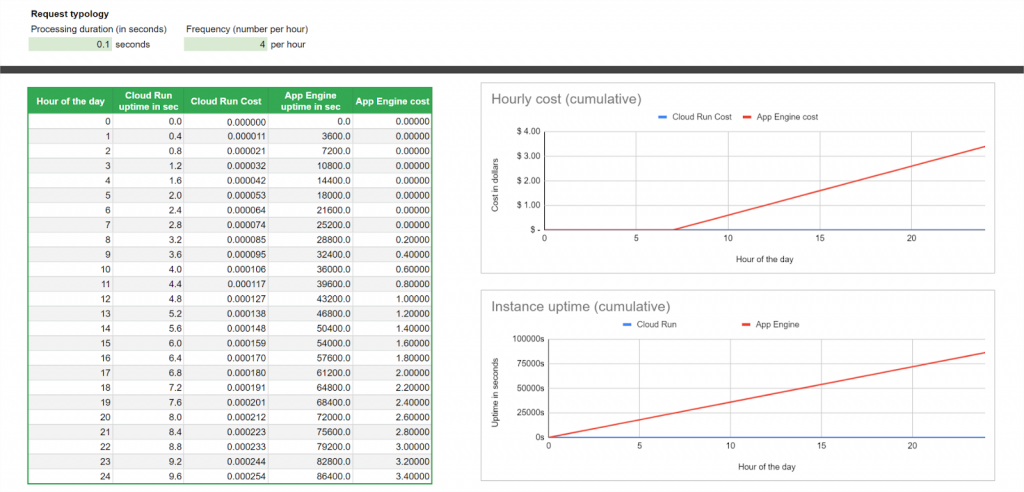

The “Per Request” option pricing model

However, the weight of a legacy model, and especially the 15 minutes to continue to pay an unused instance totally changes the game. App Engine has a severe disadvantage when there are sparse requests

The worst case is 1 request of 100ms every 15 minutes (4 per hour)

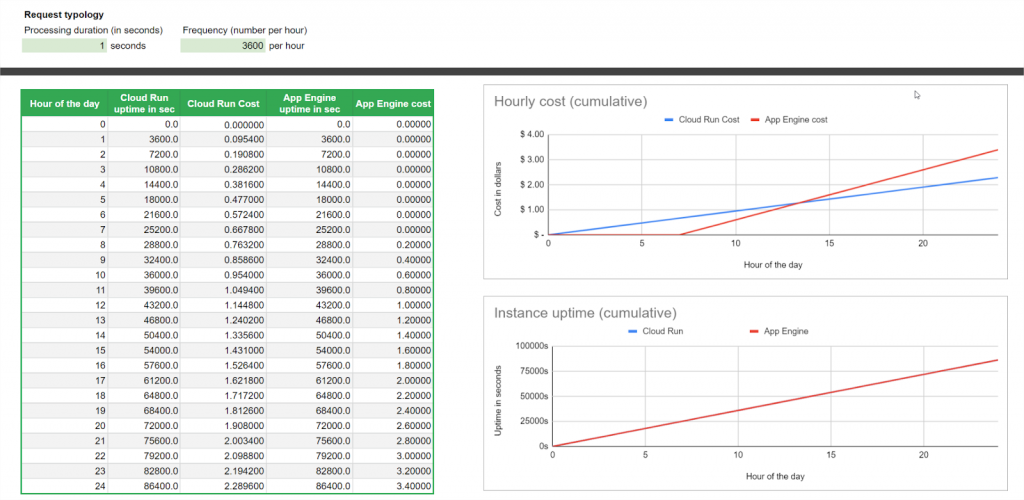

The cost difference is less visible as the number of requests increases and/or the request processing duration increases. In fact, all the cases that reduce the “pay for nothing” App Engine instance.

In the best case for App Engine, we can have a request processing duration of 1s and 3600 requests per hour, therefore a full usage of the instance with no waste.

But even in that case, and with the help of the free tier, App Engine is still almost 50% more expensive than Cloud Run.

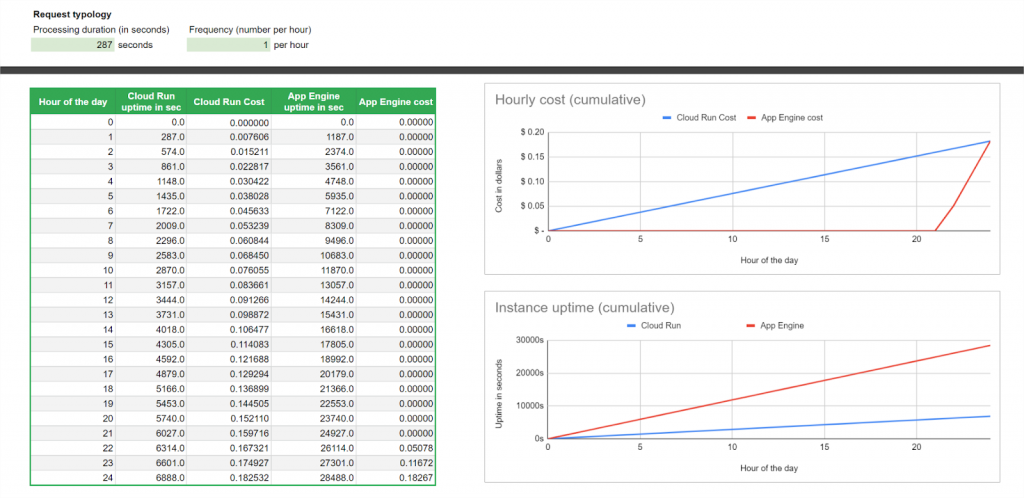

he real advantage of App Engine is when the traffic is very sparse and the usage stays in the free tiers. Cloud Run, and because I chose to ignore the free tier which is shared between all the projects of the billing account, you start to pay, a little, from the first request.

The inflexion point is around 1 request per hour with a processing duration of 287 seconds (about 5 minutes)

That can perfectly fit an hourly process use case!!

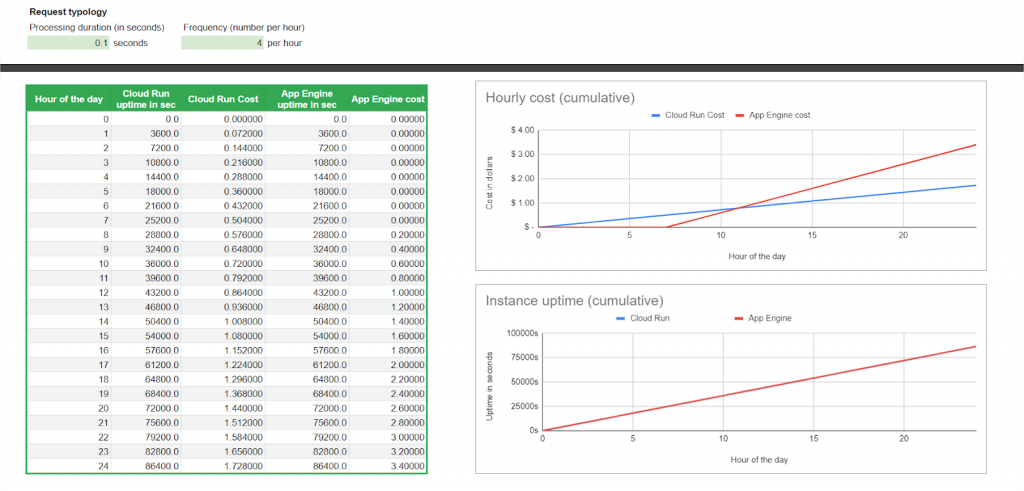

The “Always ON” option pricing model

To be closer to the App Engine behavior, the “Always ON” CPU allocation option is the solution to continue to perform processing outside request context.

By the way, the CPU is still allocated to the instance and to be billed up to 15 minutes after the latest request, as App Engine.

This time, the uptime of the 2 services is the same (because of the same behavior). Therefore, only the raw cost and the free tier make the difference.

Here, a 100% uptime example. The raw cost is still at the advantage of Cloud Run

About the free tier, the advantage is still for App Engine. This time, the (theoretical) inflexion point is around 1 request per hour with a processing duration of 740 seconds (about 12 minutes and 20 seconds)

It’s totally theoretical because the max timeout of App Engine is 10 minutes for HTTP request (60 minutes for Cloud Run)

The legacy advantage

However, a quick conclusion that App Engine is out of the game would be wrong!! Indeed I didn’t mention a real cool feature of App Engine that Cloud Run doesn’t have.

You can serve static files for free with App Engine. With Cloud Run, you have to build a container with an NGINX (or similar) to serve the static source and therefore to consume CPU and Memory.

In addition of the static file serving, App Engine also offers a much more granular configuration to control and customize the autoscaler (Latency, CPU, throughput, idle instances)

I don’t know if you need that level of configuration. I personally like the “idle instance” config and I miss this feature on Cloud Run.

Going beyond the cost

As I said, the products are similar in many core features. However, Cloud Run is more modern and doesn’t suffer from the weight of the legacy. And so, evolve faster and easier in many interesting areas.

Recent great additions have been made in App Engine, like egress control and user-managed service account usage. They helped App Engine stay in the race but came months after they were added to Cloud Run

Multi region capability

App Engine is sticky to one region. Worse, if you set up the App Engine in the wrong region, you can’t change it.

Only solution: delete your project and create a new one!!

On the other hand, Cloud Run is multi-region compliant, and coupled to a HTTPS load balancer, the closest Cloud Run service from the client serves the request.

Simply use the same service name in different regions, and the routing is automatic!

CUD and cost optimisation

Another interesting option, if you are an intensive user of Cloud Run, you can commit an usage per region across all your Cloud Run services.

You even have a recommender that helps you to make the right Cloud Run CUD choice to optimize your billing!

Resource allocation

Because one size does not fit all, customization is paramount. App Engine offers 4–5 instance classes according to the scaling option chosen. And the power of the CPU determine the memory size (and vice versa)

Cloud Run is more convenient, you can scale independently the vCPU(0.25 -> 8) and the memory (128Mb -> 32Gb). And you can adapt precisely the instance size to your requirement, with less waste of resources.

Ingress control

Ingress control allows us to put the service out of the Internet. Of course the URL is still public, but the traffic origin is controlled. Set the ingress parameter to internal, only the requests coming from the project and the VPC SC are allowed, else they are rejected.

Cloud Run, and Cloud Functions, implement this feature, App Engine not yet.

And many more…

I won’t describe all the differences, but if you want to deep dive in the other cool features of Cloud Run, here the most interesting for me:

- HTTP request timeout

- Mounting local storage (GCS or Filestore)

- Secret Manager integration

- HTTP/2 support

- Portability and KNative compatibility

- Eventarc integration (support 125+ services as event source)

Developer freedom

Serverless products, like Cloud Functions, App Engine and other competitor solutions were always synonyms of restriction: Environment runtime, supported languages, embedded libraries,…

For instance, App Engine supports a subset of versions for 6 languages.

On the other hand, Cloud Run deploys containers, and, with the cost of a little effort (to configure and build the container), the developers take over the control of the execution runtime, the installed libraries and binaries, and even the language used, as long as the container runtime contract is enforced.

Road to the future

Despite all the great advantages (and my love), Cloud Run is not the solution for all the use cases.

Hourly/daily process use cases perfectly fit App Engine service. In addition, one of my recommended pattern for website hosting is:

- Hosting the static files on App Engine, for free

- Hosting the dynamic code (API, batches) on Cloud Run

- Set up a Load Balancer in front of the serverless services to route the traffic to the corresponding backend. Add CDN, Cloud Run multi-region deployment, Cloud Armor to go to the next level for your website.

For all the other use cases, I can only recommend Cloud Run. For the developers, that modern architecture, features and freedom mean more innovation, faster development, less constraints, highly customizable environment and therefore, more values at the end.

…Even beyond the raw cost!

I would like to thanks Steren for his review and the idea of that article.

The original article published on Medium.