By Keven Pinto.Aug 12, 2022

Dataflow Flex Templates are a great way to package and distribute your DataFlow Pipeline. That being said, many engineers trying to implement flex templates for the first time, face quite a few challenges. This tutorial and accompanying code should (hopefully) provide you with a standard way to Deploy and Test your Pipelines.

Caveat Lector !, this is not a blog on when to use a Flex Template or its advantages, for that, allow me to point you to this excellent blog written by 2 Google Engineers.

The main focus of this tutorial is the Testing of the Pipeline Container to ensure it is fit for Production, however explaining the testing approach without first building a template did not seem as an effective approach. So let’s begin !

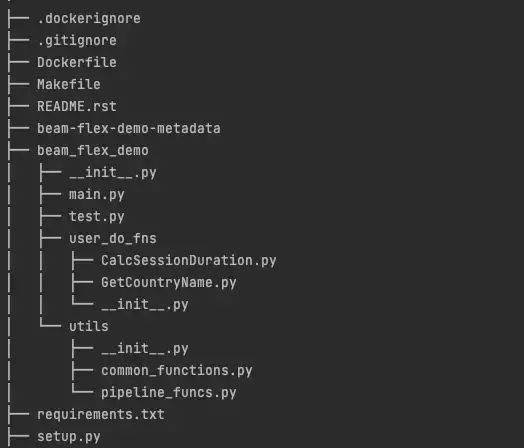

Project Layout

Let us Start by downloading the Code from here. I’d suggest you download and run this in your Cloud Shell (U will need a GCP Project) so that you do not face any OS challenges.

- Dockerfile : This is the spec for the container in which our pipeline code get packaged, it uses Google’s Data Flex Template Base Images.

- .dockerignore : Controls which files and folders get copied the container

- Makefile : This contains all the targets to automate build, test and run. this file is our friend!

- main.py : This is your Driver Program the main entry point to your Pipeline

- user_do_fns : This package contains all business logic for user defined DoFns

- utils : A list of common routines invoked in the pipeline via beam.Map()

- setup.py : A standard python setup file that allows for the deployment of this pipeline as a package on the container

- requirements.txt : Add any additional packages required to be installed on the Container here or leave blank like we have

- beam_flex_demo : This directory contains all the pipeline code

- beam-flex-demo-metadata: This JSON file contains Metadata about the pipeline and validation rules for its parameters — for more info refer to this.

A bit about the Pipeline

The pipeline self generates some data and writes it to a GCS Bucket. The name of the GCS bucket is provided by the user as a Runtime input param to the Pipeline.

The Code though basic, does provide an exemplar of how one goes about developing code in a modular manner with DoFns and functions in separate Packages & Modules

Pipeline Setup — Do this before anything else !

Edit the first 2 lines of the Makefile

GCP_PROJECT ?= <CHANGEME to your Project ID>

GCP_REGION ?= <CHANGEME to your region for Ex: europe-west2>

Once done, run the following make target from the root folder of the repo make init — Don’t forget to respond with a ‘y’ to any prompts.

This will do the following:

- Configure Private Google Access in your region.

- Enable the Dataflow Service

- Create a bucket that will store our Flex template Spec File — the name of the bucket will be

<GCP_PROJECT_ID>-dataflow-<PROJECT_NUMBER> - Create a Docker Repo in Artifact Registry to house our dataflow flex template image — The name of the repo will be

beam-flex-demo

Kindly ensure that the assets(Bucket and Artifact Registry Repo) mentioned above exist in your project before proceeding.

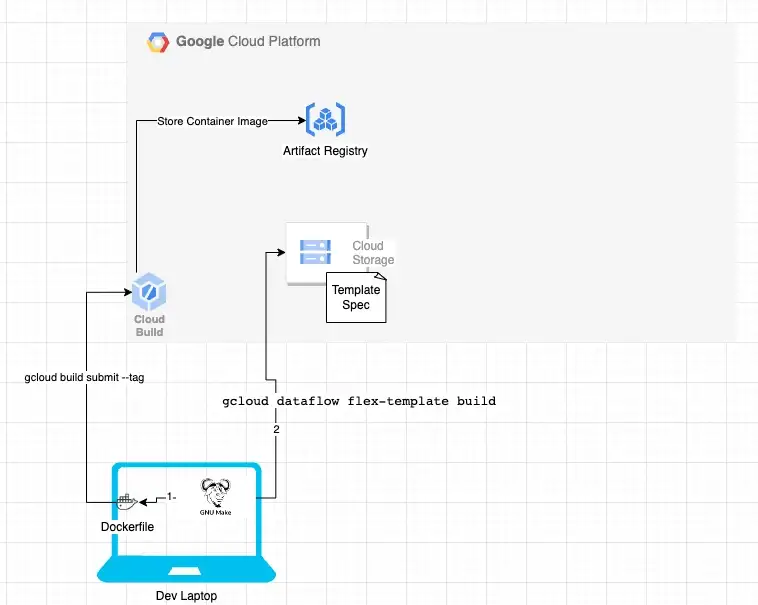

Build the Template

In the Root Folder of the Repo Type the Following Commandmake template

This will now do 2 things:

- Use the Dockerfile in this Repo to Build a Docker container that contains our code and Push it to the Artifact Registry Repo we created as part of Pipeline Setup

- Deploy the Template Spec File to the GCS Bucket we created as part of Pipeline Setup

Please check Artifact Registry for a Container and your GCS bucket for a template spec file before proceeding any further.

Dockerfile

Let’s take a closer look at the Dockerfile spec as this is where quite a few of us trip up!.

There are a few things i would like to point out here:

- Use Google’s Flex Template as your base as far as possible, it keeps the setup simpler — This is not to say that one cannot use their own container, more details here.

- Make sure you set the following two env vars correctly:

→ FLEX_TEMPLATE_PYTHON_PY_FILE : Pipleine driver filemain.pyin our case

→ FLEX_TEMPLATE_PYTHON_SETUP_FILE : Location of our setup file - Always pin installs to a version — we are locking

apache-beam to 2.40.0 - Don’t forget to install the package on the Container (python setup.py install) — Google quick start guide does not mention this step but it kept failing without this step , Happy to be corrected on this.

ARG TAG=latest

FROM gcr.io/dataflow-templates-base/python39-template-launcher-base:${TAG}

ARG WORKDIR=/opt/dataflow

RUN mkdir -p ${WORKDIR}

WORKDIR ${WORKDIR}

ARG TEMPLATE_NAME=beam_flex_demo

COPY . ${WORKDIR}/

ENV FLEX_TEMPLATE_PYTHON_PY_FILE=${WORKDIR}/${TEMPLATE_NAME}/main.py

ENV FLEX_TEMPLATE_PYTHON_SETUP_FILE=${WORKDIR}/setup.py

# Install apache-beam and other dependencies to launch the pipeline

RUN apt-get update \

&& pip install --no-cache-dir --upgrade pip \

&& pip install 'apache-beam[gcp]==2.40.0' \

&& pip install -U -r ${WORKDIR}/requirements.txt

RUN python setup.py install

ENV PIP_NO_DEPS=TrueBuild Speed

We use Kaniko to speed up our docker build-This means our first build will be a bit slow as the cache layers are being persisted to Artifact Registry but one should observe faster subsequent builds.

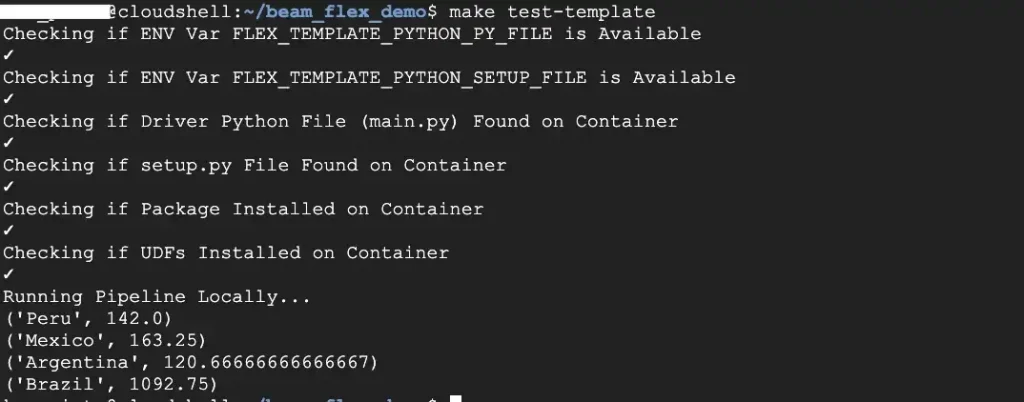

Testing the Container

The Challenge with deploying a container was ensuring that the container was valid. We wanted to add some checks to ensure we don’t catch these issues at runtime, we also wanted to ensure that these checks are part of our CICD pipeline going forward.

As a minimum we wanted checks for the following on the container:

- The 2 Env Vars FLEX_TEMPLATE_PYTHON_PY_FILE & FLEX_TEMPLATE_PYTHON_SETUP_FILE exist

- These above 2 variables reference actual files

- The pipeline has been successfully deployed as a package and can be imported

- A sub-package can be imported in python

The following tests cover all of the checks mentioned above. These are pretty self explanatory, kindly go through the same in the Makefile (test-template).

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c 'env|grep -q "FLEX_TEMPLATE_PYTHON_PY_FILE" && echo ✓'

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c 'env|grep -q "FLEX_TEMPLATE_PYTHON_PY_FILE" && echo ✓'

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c "/usr/bin/test -f ${FLEX_TEMPLATE_PYTHON_PY_FILE} && echo ✓"

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c 'test -f ${FLEX_TEMPLATE_PYTHON_SETUP_FILE} && echo ✓'

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c 'python -c "import beam_flex_demo" && echo ✓'

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c 'python -c "from beam_flex_demo.utils.common_functions import split_element" && echo ✓'

docker run --rm --entrypoint /bin/bash ${TEMPLATE_IMAGE} -c "python ${FLEX_TEMPLATE_PYTHON_PY_FILE} --runner DirectRunner --output output.txt && cat output.txt*"In order to run this type make test-template at the root folder of your repo. The output should look like the image below:

Also, note that the last test actually runs the pipeline locally using the direct runner. This is a full E2E test before moving this to deployment phase.

Run the Pipeline

CLI

Type make run at the root folder of your repo.

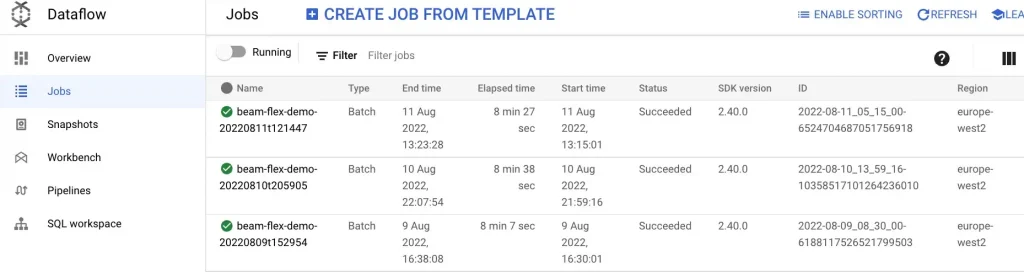

Log in to Dataflow and view the Pipeline, Don’t Panic if your job stays at Queued State for 4–6 minutes, this is normal as Dataflow is provisioning resources and performing pre execution checks. You should see a Dataflow Job named beam-flex-demo-(date +%Y%m%dt%H%M%S)

Once the Job shows a Succeeded status, you will be able to locate your file under the following GCS location

<project_id>-dataflow-<project_id>/out/beam-flex-demo-YYYYMMDDtHHmmSS/output-*

Cloud Console

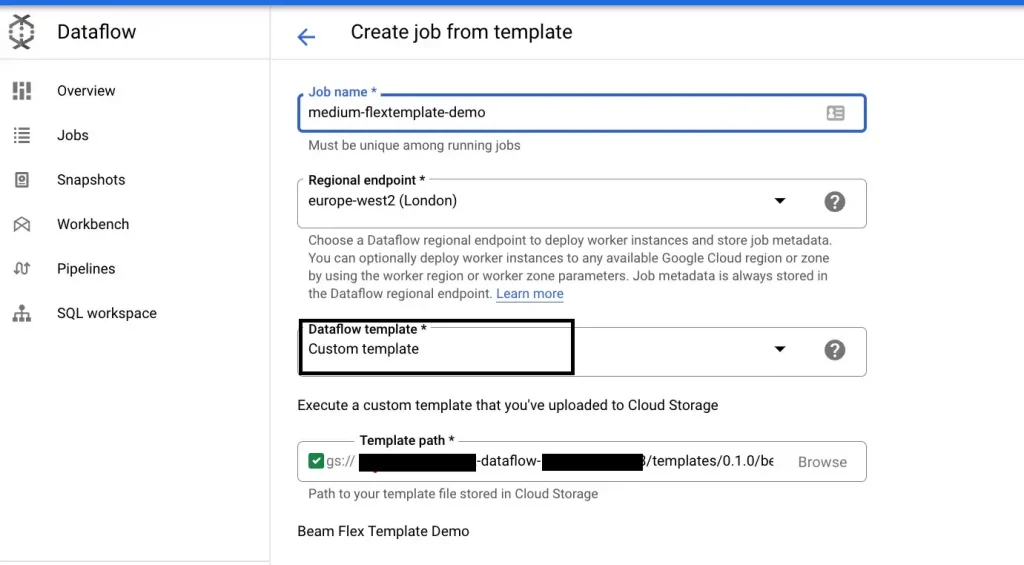

From the Google Cloud console, select Dataflow →Jobs →Create Job From Template → under templates, select Custom → then Select the spec file from the GCS bucket(<project_id>-dataflow-<project-number>/templates/0.1.0/beam-flex-demo.json) , Select the bucket to store the output and then click on Run Job

Troubleshooting

Ideally, if you have followed all of the above steps, the need should not arise. But in the unfortunate event this does occur, kindly refer to this guide.

The most common reason I have found is that Private Google Access has not been configured, please ensure it is set or set it for the correct subnet and VPN if you use a specific VPN.

If you run into this error like one of my colleagues during make test-template

denied: Permission “artifactregistry.repositories.downloadArtifacts” denied on resource

Try adding yourself manually to the Repo with the command below:

gcloud artifacts repositories add-iam-policy-binding beam-flex-demo --location=<YOUR REGION> --member=user:<YOUR USER NAME> --role=roles/artifactregistry.reader --project <YOUR GCP PROJECT>

That’s it!, we have built, tested and executed a flex template. I do hope you try this tutorial. I’d also like to hear if you’ll have added new test cases (Hint! check for standard packages like apache-beam).

Finally, Big thanks to Lee Doolan for Testing the Repo, highlighting issues and suggesting improvements.

References

About CTS

CTS is the largest dedicated Google Cloud practice in Europe and one of the world’s leading Google Cloud experts, winning 2020 Google Partner of the Year Awards for both Workspace and GCP.

We offer a unique full stack Google Cloud solution for businesses, encompassing cloud migration and infrastructure modernisation. Our data practice focuses on analysis and visualisation, providing industry specific solutions for; Retail, Financial Services, Media and Entertainment.

We’re building talented teams ready to change the world using Google technologies. So if you’re passionate, curious and keen to get stuck in — take a look at our Careers Page and join us for the ride!

Disclaimer: This is to inform readers that the views, thoughts, and opinions expressed in the text belong solely to the author.

The original article published on Medium.