By Mohamed Labouardy.Jun 16, 2019

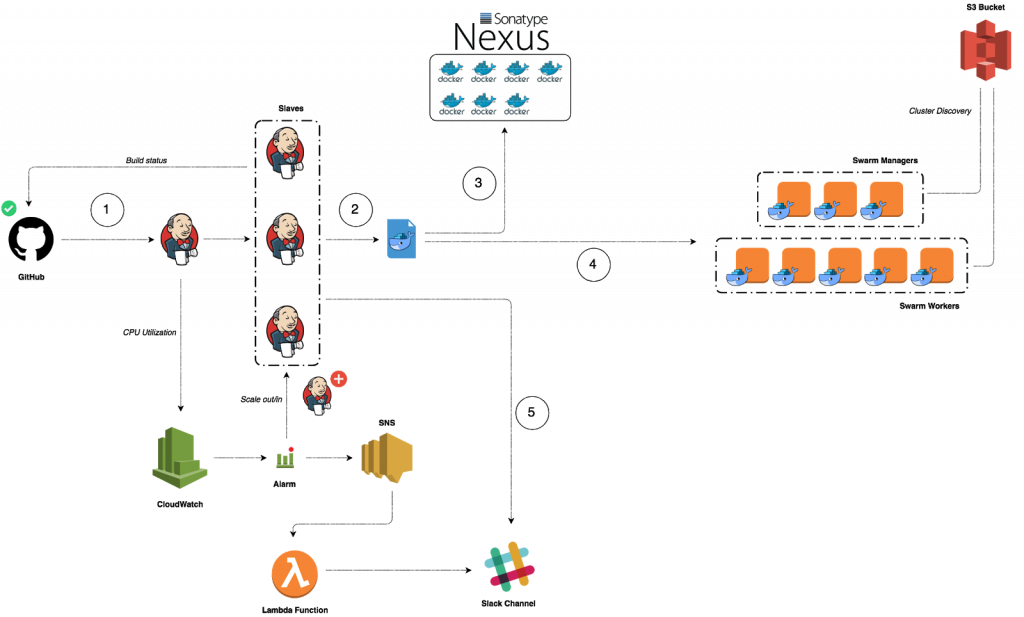

Last year I have given a talk at Nexus User Conference 2018 on how to build a CI/CD pipeline from scratch on AWS to deploy Dockerized Microservices and Serverless Functions. You can read my previous Medium post for step by step guide.

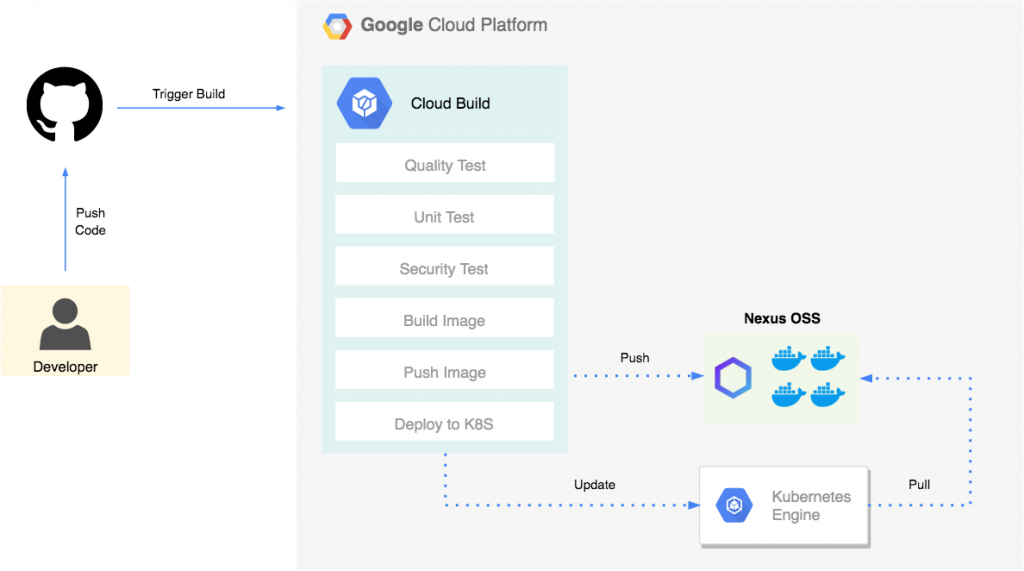

In 2019 edition of Nexus User Conference, I have presented how to build a CI/CD workflow on GCP with GKE, Cloud Build and Infrastructure as Code tools such us Terraform & Packer. This post will walk you through how to create an automated end-to-end process to package a Go based web application in a Docker container image, and deploy that container image on a Google Kubernetes Engine cluster.

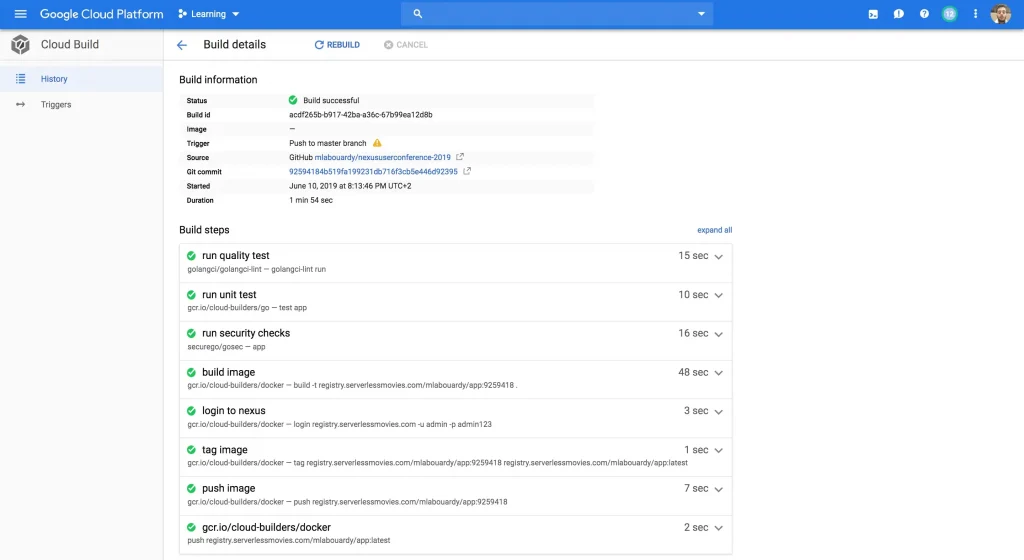

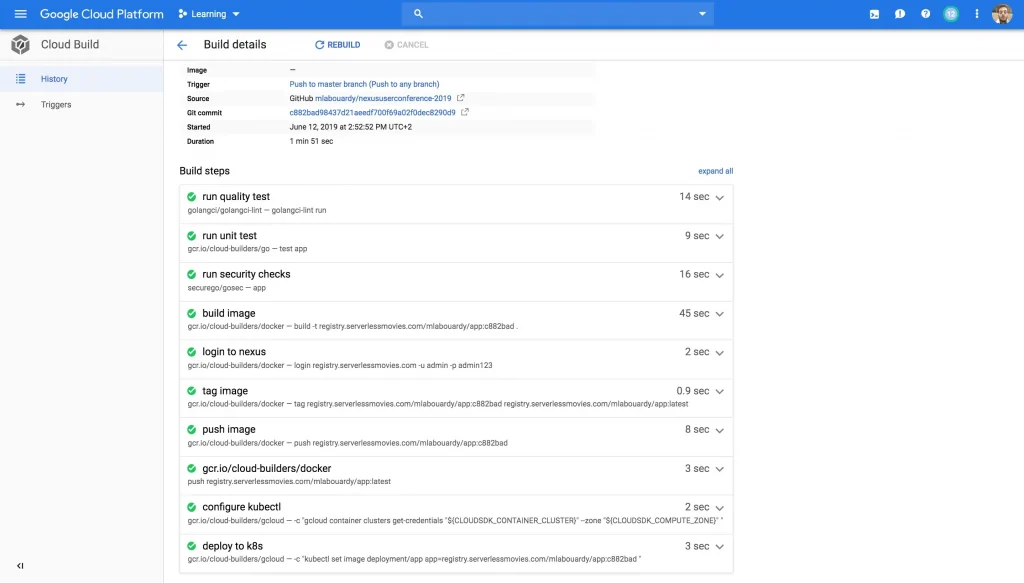

Google Cloud Build allows you to define your pipeline as code in a template file called cloudbuild.yaml (This definition file must be committed to the application’s code repository). The continuous integration pipeline is divided to multiple stages or steps:

- Quality Test: check whether our code is well formatted and follows Go best practices.

- Unit Test: launch unit tests. You could also output your coverage and validate that you’re meeting your code coverage requirements.

- Security Test: inspects source code for common security vulnerabilities.

- Build: build a Docker image based on Docker multi-stage feature.

- Push: tag and store the artifact (Docker image) to a Docker private registry.

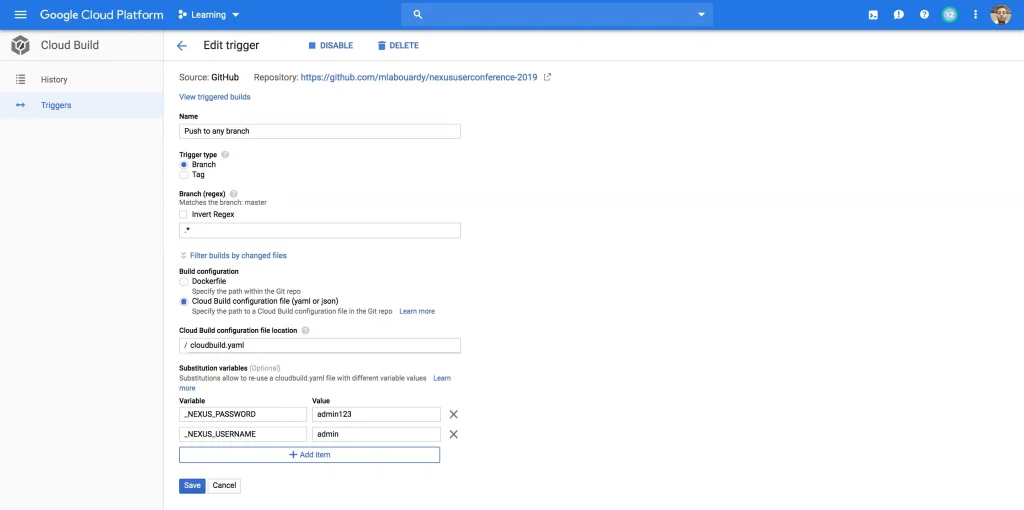

Now we have to connect the dots. We are going to add a build trigger to initiate our pipeline. To do this, you have to navigate to Cloud Build console and create a new Trigger. Fill the details as shown in the screenshot below and create the trigger.

Notice the usage of variables instead of hardcoding Nexus Registry credentials for security purposes.

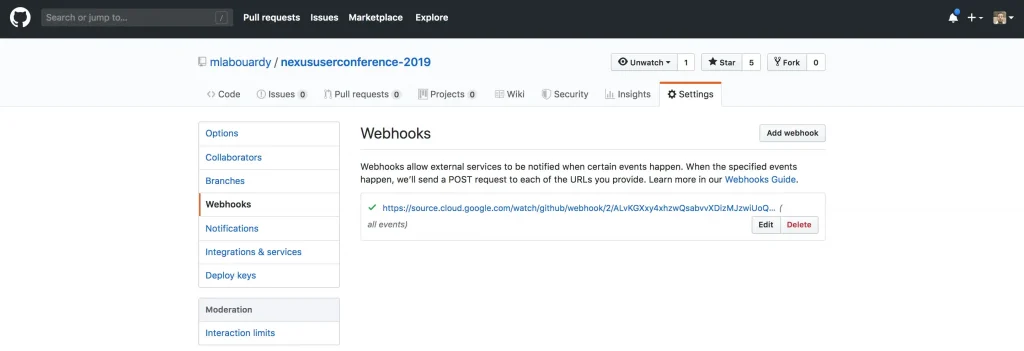

A new Webhook will be created automatically in your GitHub repository to watch for changes:

All good! now everything is configured and you can push your features in your repository and the pipeline will jump to action.

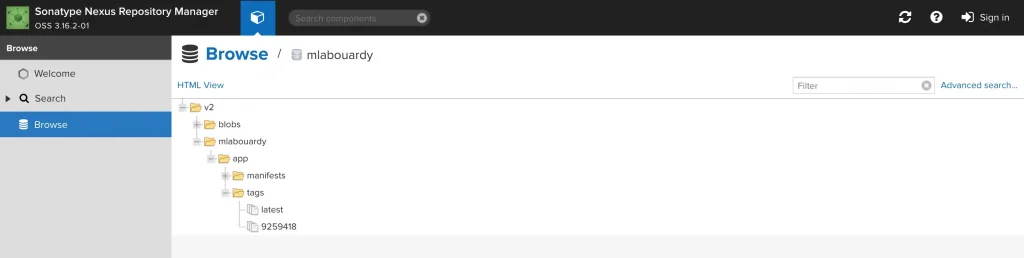

One the CI finishes the Docker image will be pushed into the hosted Docker registry, if we jump back to Nexus Repository Manager, the image should be available:

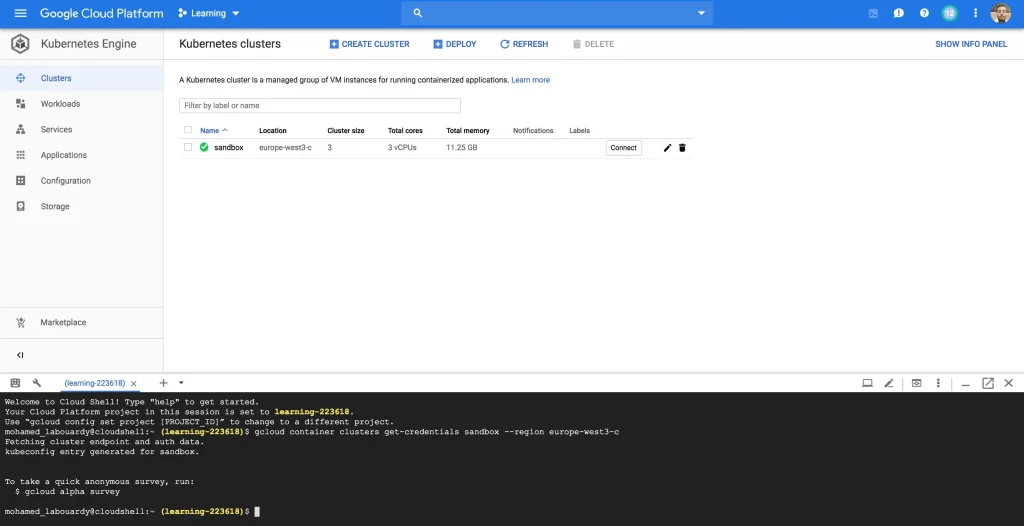

Now the docker image is stored in a registry, we will deploy it to a Kubernetes cluster, so similarly we will create a Kubernetes cluster based on GKE using Terraform:

Once the cluster is created, we will provision a new shell machine, and issue the below command to configure kubectlcommand-line tool to communicate with the cluster:

Our image is stored in a private Docker repository. Hence, we need to generate credentials for K8s nodes to be able to pull the image from the private registry. Authenticate with the registry using docker logincommand. Then, create a Secret based on Docker credentials stored in config.json file (This file hold the authorization token)

Now we are ready to deploy our container:

To pull the image from the private registry, Kubernetes needs credentials. The imagePullSecrets field in the configuration file specifies that Kubernetes should get the credentials from a Secret named nexus.

Run the following command to deploy your application, listening on port 3000:

By default, the containers you run on GKE are not accessible from the Internet, because they do not have external IP addresses. You must explicitly expose your application to traffic from the Internet. I’m going to use the LoadBalancer type service for this demo. But you are free to use whatever you like.

Once you’ve determined the external IP address for your application, copy the IP address.

Point your browser to that URL to check if your application is accessible:

Finally, to automatically deploy our changes to K8s cluster, we need to update the cloudbuild.yaml file to add continuous deployment steps. We will apply a rolling update to the existing deployment with an image update:

Test it out by pushing some changes to your repository, within a minute or two, it should get pushed to your live infrastructure.

That’s it! You’ve just managed to build a solid CI/CD pipeline in GCP for whatever your application code may be.

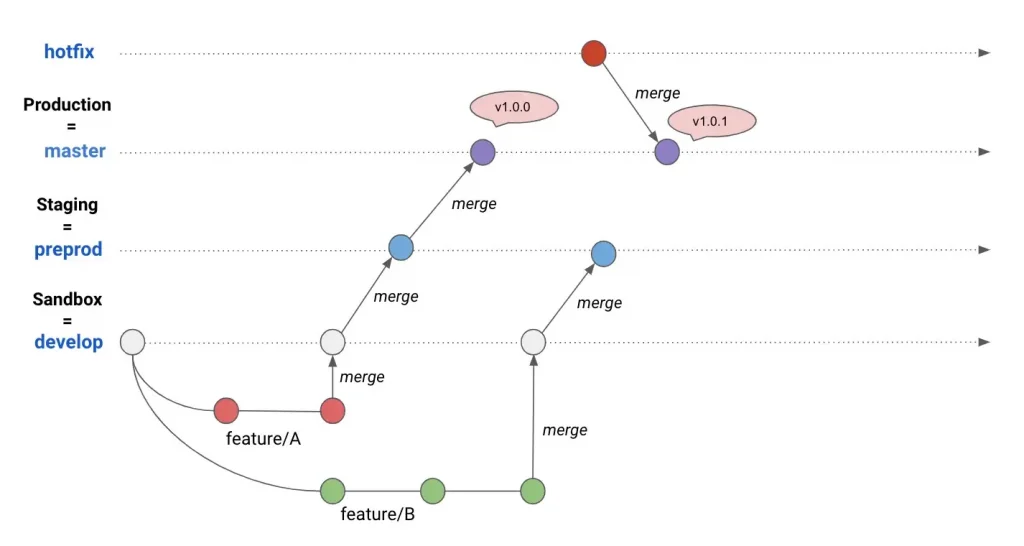

You can take this workflow further and use GitFlow branching model to separate your deployment environments to test new changes and features without breaking your production:

Drop your comments, feedback, or suggestions below — or connect with me directly on Twitter @mlabouardy and Github @nexususerconference-2019.

The original article published on Medium.