By Peter Billen.Oct 26, 2022

In this article, we evaluate how data can enrich a landing zone and set you up for a modern, scalable, future proof and user centric solution.

Disclaimer: I work at Google in the cloud team. Opinions are my own and not the views of my current employer.

When organizations start using Google Cloud, setting up a landing zone is a best practice. Such a landing zone organizes and controls how to deploy, use, and scale services in a secure way. It includes multiple elements, such as identities, resource management, security, and networking. It provides the foundation for different teams that will implement workloads and will evolve over time as their needs change.

Typically organizations set up a dedicated team to manage the landing zone and enable the different workload teams. This team helps with the workload assessment, project setup and team enablement. It becomes the Single Point of Contact for guiding the organization on Google Cloud.

Therefore, it is important that landing zones are designed in a modular manner with a focus on automation. This allows embedding and standardizing many principles including reusability, repeatability, traceability, scalability and much more. On many occasions, the concept of Infrastructure as Code will be emphasised. And although it has its value, it needs to be framed in a bigger and more elaborate solution.

What is the value data will add in a landing zone?

At the start of the cloud journey the landing zone is set up. Its elements are typically well described and implemented::

- How is the organization and project structure defined?

- What identity solution is used to authenticate users?

- What security policies are applicable?

- How is billing and cost control set up?

- … and so forth

Yet, the focus is mostly on the technical features and settings while the operating model and services are given less attention. However, when the landing zone becomes operational different stakeholders across the organization will start using it and will generate different interactions across the different topics that have been defined. These interactions generate data and call for users to interact with the cloud team. It is from this perspective that an integrated solution can play an invaluable role providing

- Cloud users with self-service capabilities

- The cloud team an efficient and effective foundation to manage and implement

- A central solution to control and govern the landing zone

- Relevant and up-to-date information to stakeholders across the organization

In a nutshell, this comes down to organizing the automated services by orchestrating them centrally and storing the necessary data in a landing zone metadata platform. Each service will store its output so that it can be used as input by other services. And this makes the case for managing the landing zone in the same way as a data and integration platform that handles the organizations’ data and insights.

Let’s take the following question: can you provide a list of all your cloud projects? How would you respond to this? Sometimes I get an answer but often I receive a response like: let me get back to you; I will need to run a command/script; I have a spreadsheet somewhere that has the information; … And this is a fairly simple question. What if you would like to know who the business owner is, what the project is used for, what budget alerts have been set up and how they compare to the last 3 months actual consumption? This is where the landing zone metadata platform comes in. Would it not be nice to have the data available at all times, ready for services, users and stakeholders who need it?

As such, this solution can play an important role to support creating a cloud FinOps culture and mindset in the organization. It will provide the tools necessary to provide transparent, accurate and actionable data to all stakeholders. In this way, technology, finance, and business can understand and work together to optimize costs and accelerate business value realization.

Why use an integrated approach?

Basic principles are simple, integration and servicing require substantial effort. Also, it is impossible to account for all features and services from the start. Cloud capabilities and management tools are evolving at a fast pace — each having their specific focus, advantages and disadvantages. There is always a balance.

- Different types of users have different needs — for example: analyzing financial data requires deep analytical capabilities which are different from development teams that need to embed security policies into their solutions

- New technologies and services will become available — as technology continues to become more complex, you want to leverage the flexibility and the power that cloud platform options provide

- Organizational structures and processes can change — and you also want the same cloud platform options to be simple and intuitive for all users, acting as enabler and not dealing with how you optimize or transform internally

- Tooling will evolve with new features, new tools will emerge — how can you take advantage of these quickly and without constraints

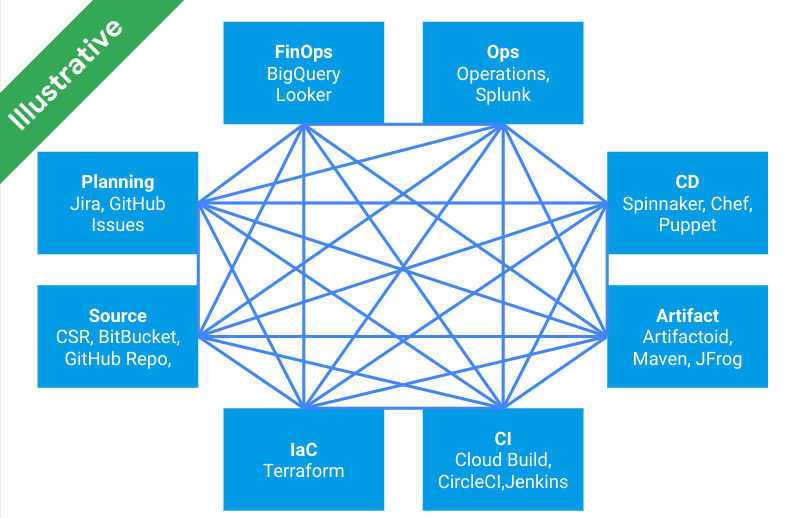

Therefore, it is essential to avoid creating point-to-point connections between different tools resulting in an unmaintainable state reducing visibility and transparency.

Instead, a modular platform that acts as an aggregator and processor overcomes these inconveniences — managing and controlling the different tools that are interacting.

How to build a landing zone metadata platform?

The term ‘platform’ is well chosen. At the core of your cloud solutions; it is never finished, grows and evolves over time. It needs to be treated as a product, not a project — with dedicated ownership embedded into your organization’s central cloud team governance structure.

Start small and grow in phases. Experience shows that it is best to start small, experiment, build confidence, and then scale. The core principles of system design describe how to achieve a robust solution and introduce changes atomically, minimize potential risks, and improve operational efficiency. By focusing on use cases, key capabilities get implemented progressively allowing the vision and strategy to become reality while getting buy-in from the different IT and business stakeholders. It will be the central cloud team that helps drive the change so that the new way of working becomes the norm.

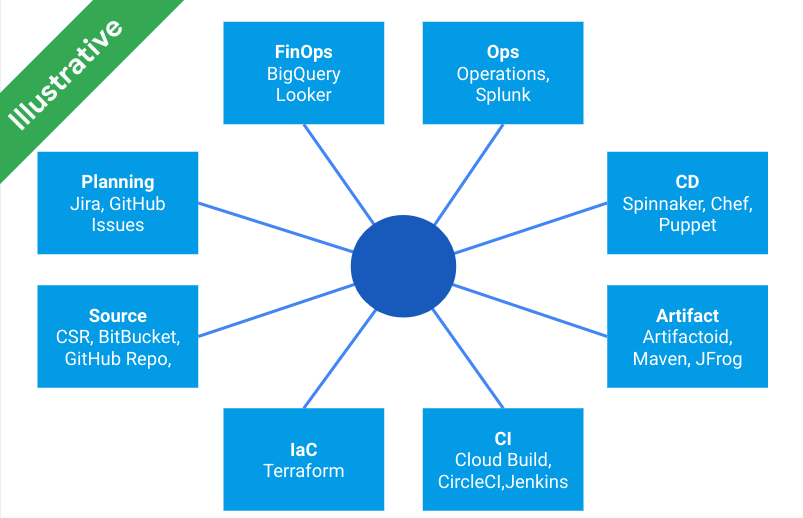

As stated before, modularity is an important trait that such a platform needs to have. Making choices should be evaluated against key criteria that include focus on ease of use, execution, integration and scalability:

Keeping these at the center of the design and implementation will ensure that:

- New technologies can be added easily

- Migration cost reduces when replacing technologies

- Users and systems are assisted by insights and automation

- New ideas and possibilities can be quickly implemented

When it comes down to leveraging the capabilities of different products, decoupling enables you to use the innovation from each as it becomes available, data uniformization ensures talking the same language. Having said this, every product has its unique characteristics — requiring specific conditions to trigger actions. In this case, it is best to keep execution as close as possible to the respective product and not to over-generalise since the product’s value may be flattened.

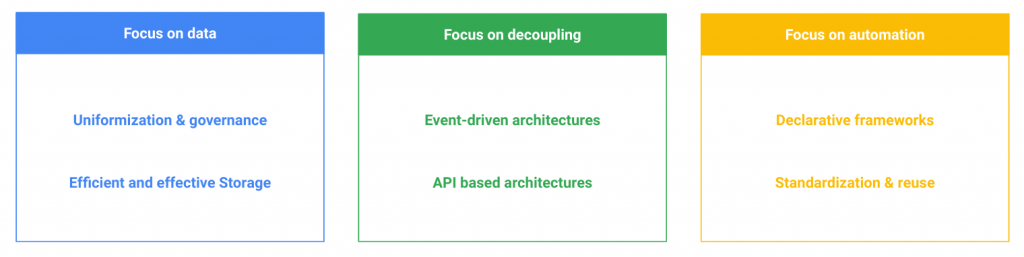

Typically, a combination of a cloud native platform framework and a selection of best-in-class tools will offer the best flexibility and future readiness. The essential building blocks are:

- Developer tools to build, run and monitor

- Secure and monitor the solution and infrastructure

- Orchestration and integration capabilities supporting any type of modern integration pattern: APIs, events, streams, files, CDC, etc.

- Data management solutions to process and structure metadata

- User experience capabilities to build effective user interfaces

- Self-service insights and analytics, and leading AI solutions to help predict, automate and drive business outcomes

A key component of this platform will be the metadata store that will unify and connect the different data concepts. This will be the ‘brain’ of the solution while the beating ‘heart’ will be formed by the execution orchestrator.

How to get started?

The path for implementing this type of an integrated solution will vary. Do you want to start out small and give it a try? Then starting with the project overview question referenced before is a good use case. It allows you to experiment, learn and expand easily.

Note: the concepts below are illustrative and intended to guide and inspire you instead of providing an exhaustive list of choices and actions that are needed to build the solution.

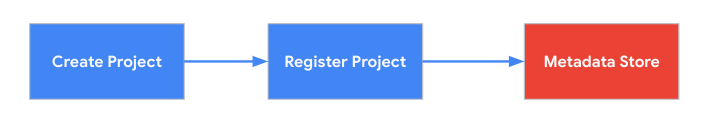

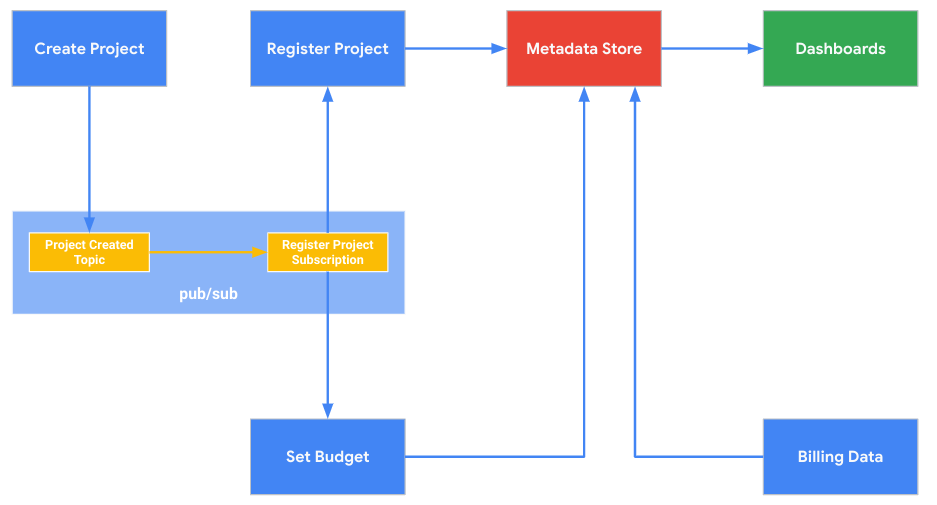

Responding to the initial question “Can you provide a list of all your cloud projects?” can be done by making sure that every time a cloud project is created, this is also registered. As part of the landing zone setup the principle of Infrastructure-as-Code (IaC) will be used to automate the project creation. So, the next point along the way is to register this in a data store.

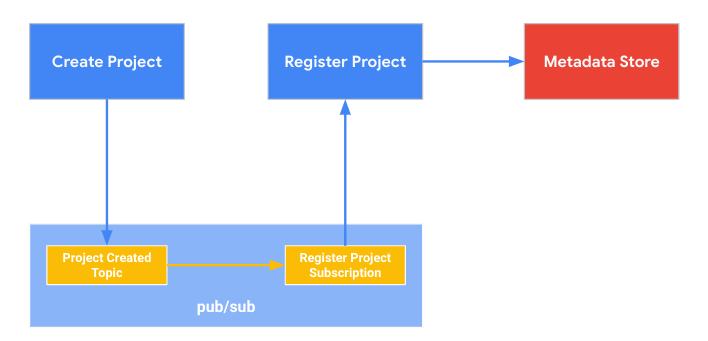

Earlier, the key criterion of decoupling was discussed. This comes down to ensuring to not use a single large automation code base, but rather build individual services that connect to each other using events or APIs. For this example we will use Pub/Sub as the integration middleware.

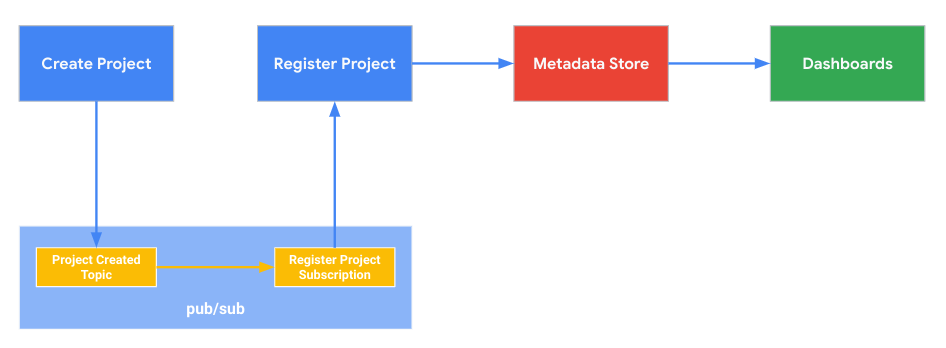

During the registration process additional data can be stored such as the business owner, description, type of project, allowed budget, etc. First, we will make the information visible to the different stakeholders.

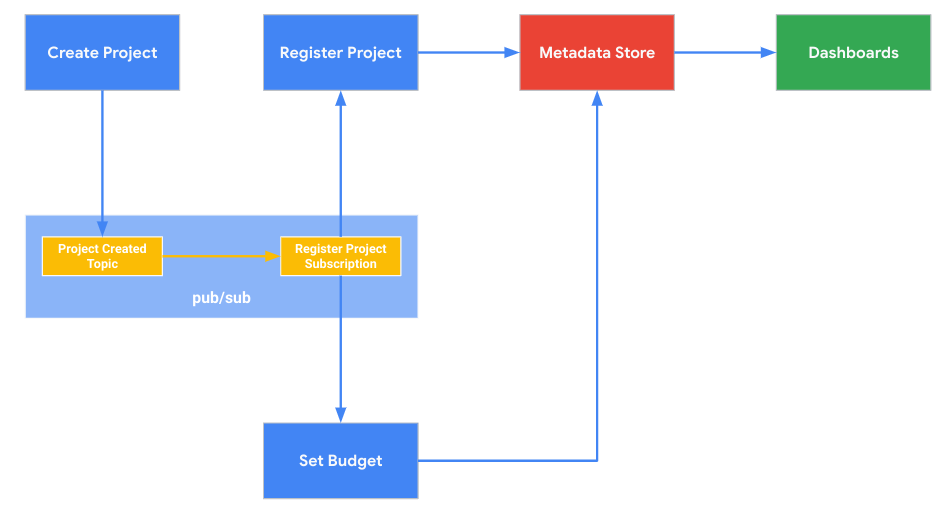

As the allowed budget has been identified during project creation, this allows now to expand the solution to also set this budget programmatically — this again using individual services, so that updating budgets is decoupled from the project creation logic.

Next, there is a list of projects and for each project the budget that has been created (automatically) can be consulted and reported. But, how much was consumed over the past three months? Is the budget realistic or set too permissive? This can be achieved by making the billing data available in the metadata store.

And slowly our solution is growing, but also the value it has for the organization. The metadata store is getting more data which can be used to create new services:

- When budget alerts are triggered, a Pub/Sub integration can perform actions (for instance turning off virtual machines) and notifications that can be customized with configuration stored in the metadata store.

- A service can be developed to send automated notifications to project owners when budget alerts are defined too permissive.

- A service can be developed to periodically have a review of the consumption and budget alerts by project owners

- And much much more…

This example illustrates the concept and the possibilities are endless. It continues from Infrastructure-as-Code and moves into FinOps. But it can expand to auditing and control, policy enforcement, self-servicing, …. This is where each organization will define its own priorities and journey. From experience, I will end with a couple of good practices for consideration as you get started and move forward:

- A complete solution needs much more than integration and a data store, other capabilities include dashboards, self-servicing, machine learning, alerting, …

- A key component however is the data model that needs to be set up for evolution

- Make sure to document and look at how to leverage code to contribute

- Also focus on automation for the platform’s own delivery pipeline

- Use serverless cloud features when you can

- And include error handling and monitoring from the start when the solution doesn’t work as intended

The original article published on Medium.