By Kishore Jagannath.Sep 20, 2022

Network endpoint groups aggregates backend services deployed across multiple environments under a Google Cloud Load balancer to leverage the superior network performance and security offered by Google. They enable multiple use cases and a good understanding of NEGs is highly essential in architecting your service deployments.

Introduction

Traditionally load balancers distributes and balances requests across instances. Typically a load balancer will front end a group of similar services running across different physical or virtual machines, distributing traffic based on a load distribution algorithm.These load balancers assumed a service per instance, which was true until the age of containers. Containers and container based workloads changed the notion of how application services are run. An entire application service can be packaged as an image and run anywhere by listening on a specific port. Also multiple instances of a container based service can be scaled to run on the same instance. As container based workloads gained significant popularity and adoption over the years, it is essential to support this concept of scalable application services listening on a port irrespective of the instances they are running in. So Network endpoint groups(a.k.a NEGs) were introduced to represent a group of scaled services listening on a port. In short NEG enables us to takes a leap from instance based load balancing to service based load balancers.

These NEGs are typically configured as a group of scalable services, behind a load balancer in GCP so that they can be reached via a single endpoint. Also these NEGs enable services running outside GCP(on-premise or different cloud provider) to utilise the superior capability of Google Front Ends and CDN network. Different types of NEGs are supported in GCP to support different workloads.

Typesof NEGs in GCP

Let’s look at the different types of Network endpoint groups in GCP.

- Zonal NEG : NEGs for Services running in a VM within a zone and also GKE pods.

- Serverless NEG : Enable serverless services such as Cloud run, Cloud functions and API gateway as backends to a Google Load balancer.

- Internet NEG : NEG’s to access backends outside GCP from a Google load balancer

- Hybrid NEG : Configure services deployed in on-premise as backends to a Google Load balancer.

- PSC NEG : Configure services running across networks and projects as backends to a Google Load balancer.

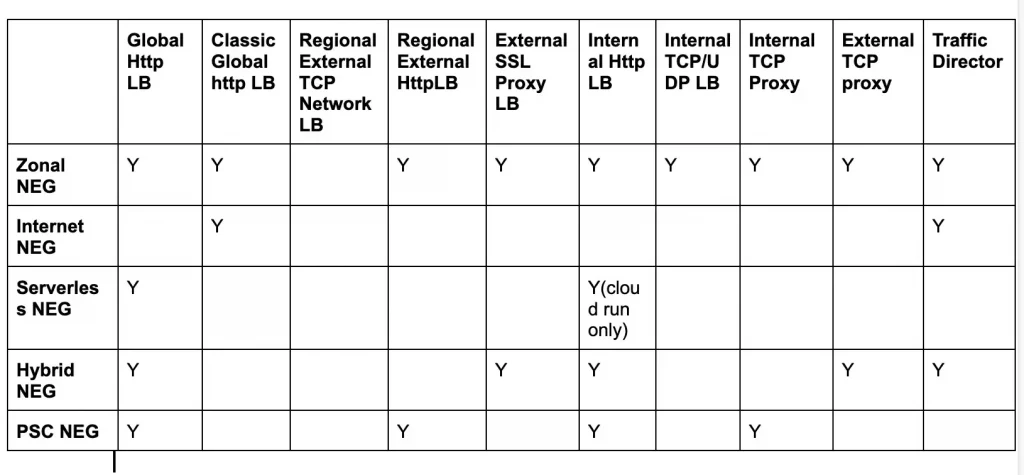

NEGs support for GCP Load Balancers

Below table list the NEGs supported by each GCP Load balancer. Please refer the GCP documentation for latest information on Load Balancer support.

Details

Let’s get into the details of the Network End point groups by discussing the purpose and use cases that these NEGs enable.

Use case 1: Connect to multiple GKE pods directly(Zonal NEG for GKE)

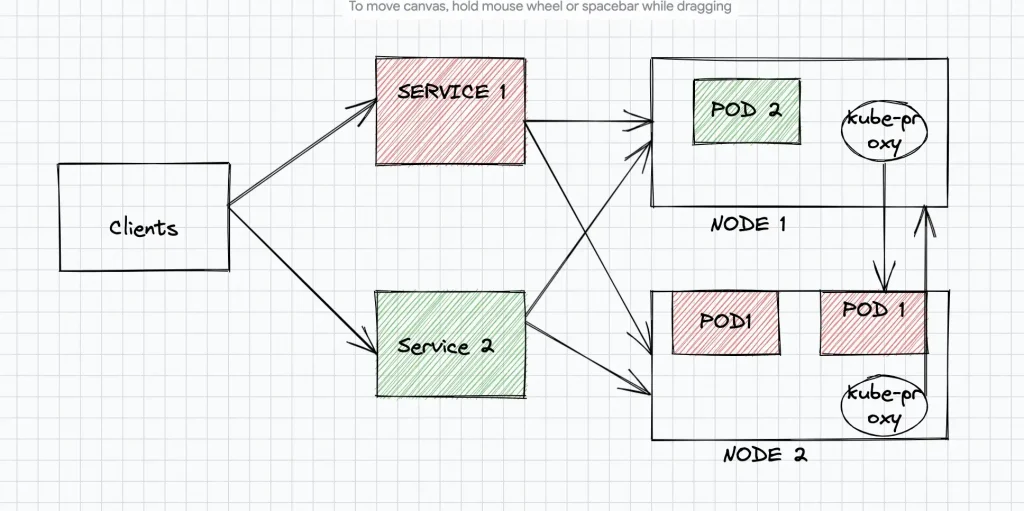

In Kubernetes, a service exposes a set of pods providing a specific functionality on a specific internal pod-ip and port. These pods belonging to a service, can be scaled up or down and they typically run in GKE cluster nodes. These services essentially act like a Network Load Balancer and routes requests to multiple pods connected to it in a specific(round robin, weight-based) fashion. A typical kubernetes service routes inbound requests to pods in two hops. The request is initially sent to the underlying VM nodes in a GKE cluster, which again uses kube-proxy to route the requests to the specific pod running the service. So to connect to specific pod exposing the application functionality two hops (service -> VM -> pod) are required.

In the below figure requests from the clients reach the Kubernetes Service and then sent to one of the backend instances (Node Pool) in GKE Cluster. The “kube-proxy” service running in each node redirects the request to the correct pod via ip-table rules.

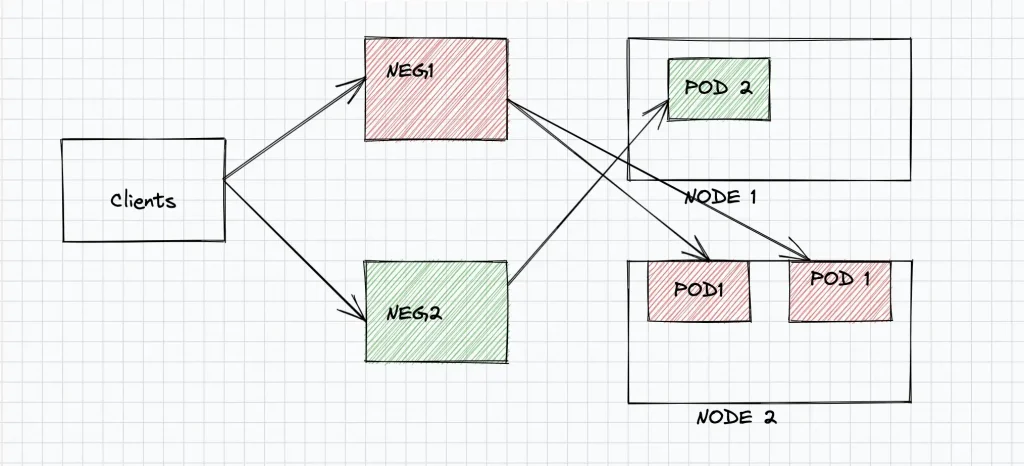

With NEGs, the pods are directly exposed via the “pod-ip:port” . In this case clients communicate with the pods directly, without contacting the kube-proxy within each instance. This results in lower latency and better performance. Also GKE automatically manages the NEG endpoints as you scale up/down the underlying pods. So new end points are added to the NEG during scale-up and existing endpoints are removed during scale-down

A “Kubernetes Service” can be exposed as a NEG in GKE via a simple annotation

annotations:

cloud.google.com/neg: '{"exposed_ports": {"80":{"name": "NEG_NAME"}}}'

Refer GKE Documentation on :

Standalone NEGs, Container Native Load Balancing

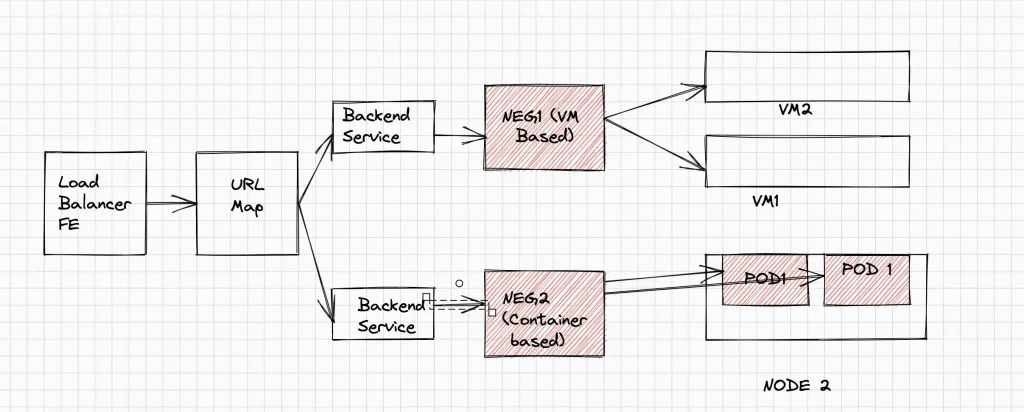

Use case 2 : Container and VM based Backends for same service(Zonal NEG)

Zonal NEGs enable a set of applications running in VM’s to be exposed as a group of endpoints behind a Load Balancer. Let’s consider the case where you are transitioning from VMs to containers. In this case some of your applications will be containerised, while some of the applications may continue running in VMs. You could use Zonal VM based NEGs to add VMs and GKE container based NEGs as backend for kubernetes pods. With this a single load balancer can now serve both VM based workloads and containerised workloads as illustrated in the above figure.

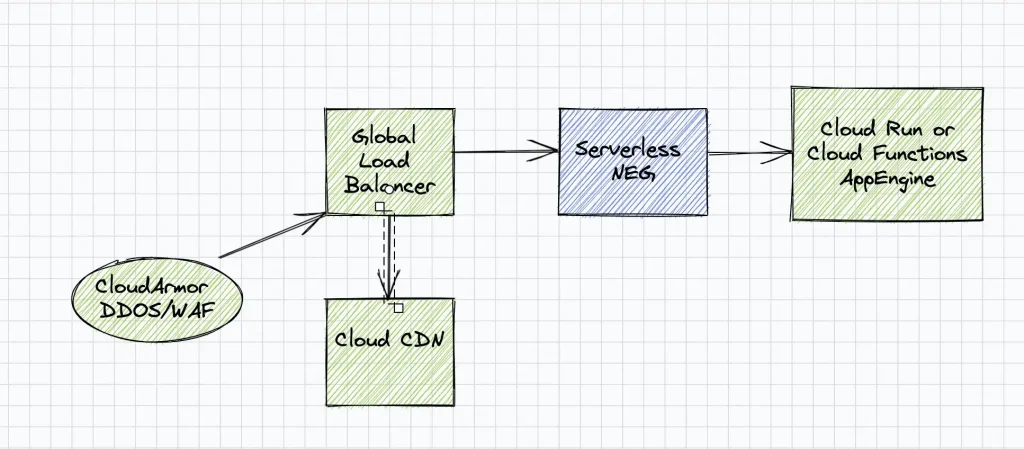

Use case 3: Expose Serverless applications via Load Balancer(Serverless NEG)

Let’s consider the case where your applications are deployed in either Cloud Run , Cloud Functions or App Engine serverless managed environments. While serverless end-points could be directly exposed to the outside world for public access, security and performance could be a concern for services that are widely used. These services could be attached as Serverless NEG backend service and exposed outside via a Global Load Balancer in GCP. A Global Load Balancer provides improved performance by accepting requests closer to the user via GFEs, and routes all traffic with high speed google network. Also the Load Balancer can be attached to Cloud CDN to cache static images/files, and serve requests from the cache thereby improving performance drastically. From a security perspective, a global load balancer could be secured against various DDOS and WAF attacks via “Cloud Armor” policies configured on the Load Balancer. Thus “Serverless NEG”, brings the performance and security of Global load Balancers in GCP to the serverless world.

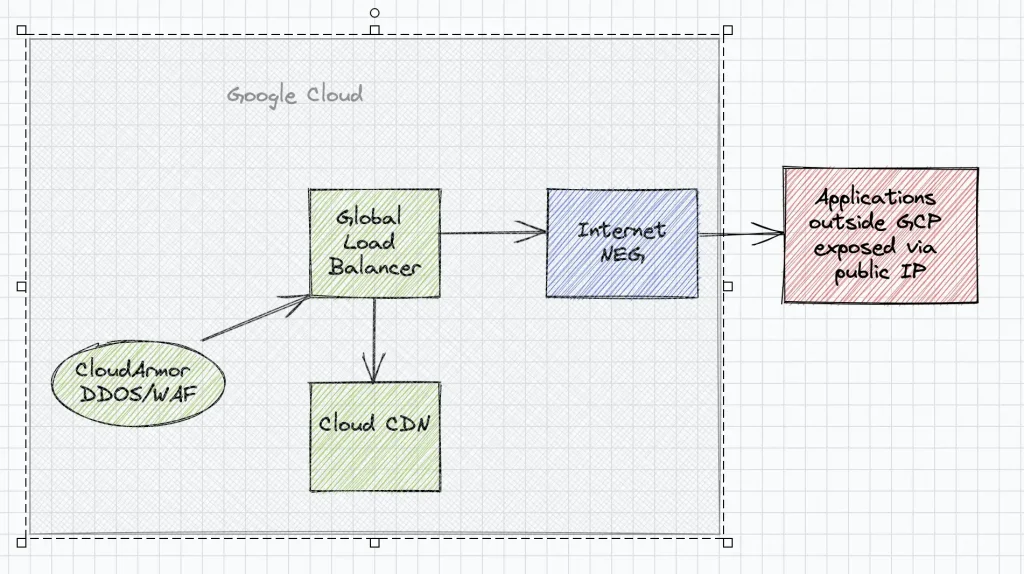

Use case 4: Leverage Google network, CDN and DDOS protection for applications outside GCP(Internet NEG)

While serverless NEGs bring the capability and power of GCP load balancers to the Serverless world, Internet NEG enables exposing backends running outside GCP via the Load Balancer. These backends are primarily services running in on-premises or a different cloud provider environment and exposed via public IP. Multiple endpoints could be added to an Internet NEG but the ip address should be publicly reachable via internet from GCP. Typically Internet NEGs are added as backends to a Global Load Load balancer of GCP to leverage the high performance of GCP networks, CDN and to guard against DDOS and WAF attacks with Cloud Armor. Thus Internet NEGs brings the performance and security features of Global Load Balancers in GCP to on-premise applications and non GCP cloud provider(AWS/Azure) applications.

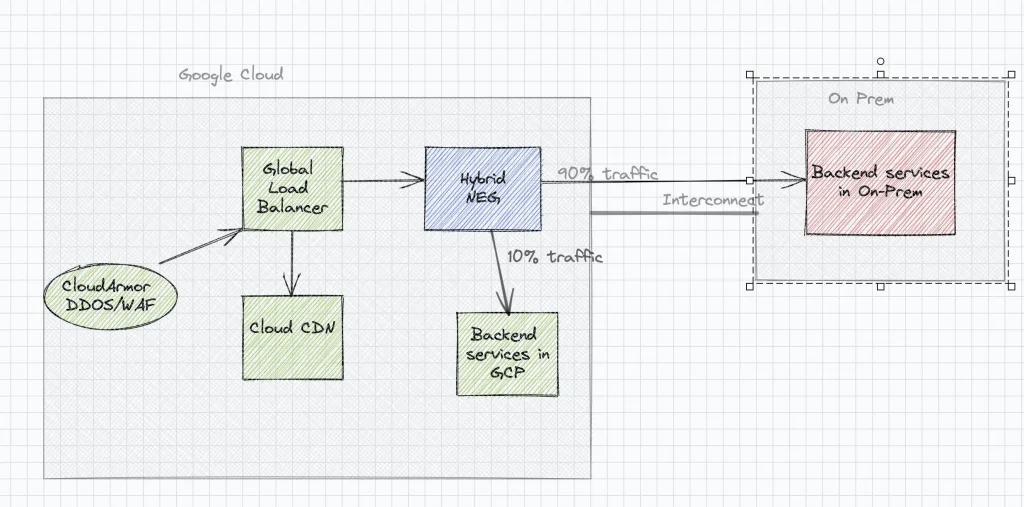

Use Case 5: Split Traffic between on-premise services and services in GCP.(Hybrid NEG)

Let’s consider the case when you are migrating your application services from on-premise (traditional in house datacenter running in an organisation) to GCP. Post migration you want to adopt the Devops best practices of Canary Release in the production environment. Instead of exposing all your users and thereby requests to the new migrated service in the cloud, a small portion of traffic can be directed to the backend service in GCP while a majority of traffic is routed to on-premise. The portion of request traffic assigned to the migrated GCP backend service can be gradually increased. This ensures that the migration to GCP follows fail safe canary release strategy.

Hybrid NEGs can be created to achieve this strategy in GCP. A load balancer backend service could point to an endpoint (private endpoint) residing in on-premise. The pre-requisite for this is that for the private endpoint in on-premise is reachable from GCP (Interconnect connection).A Backend service could have one of the backends as GCP based services(Instance Groups, Nodes etc) and other backend as a Hybrid NEG pointing to on-prem private ip endpoints. Routing rules can be configured to achieve weighted distribution of traffic between the backends

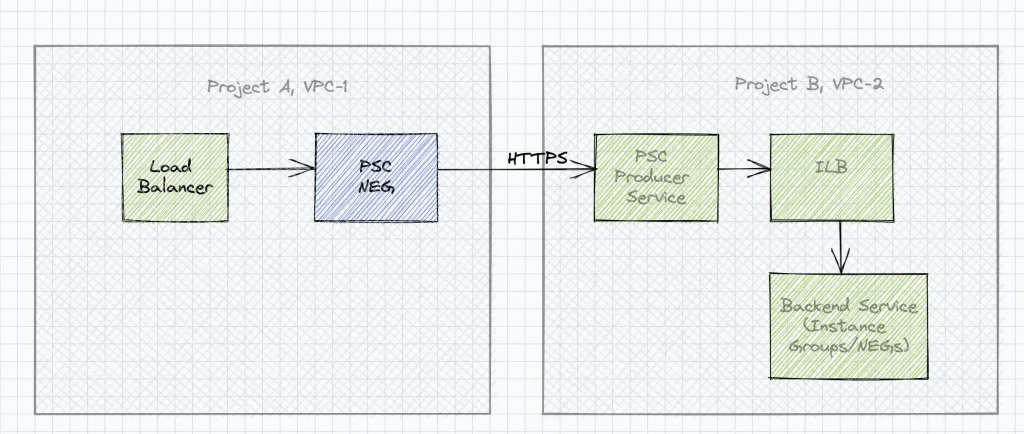

Use case 6: Expose services running in different / networks via Load balancer.(PSC NEG)

One of the important features of Private service connect(a.k.a PSC) is to consume services across network and project boundaries without the need for VPC Peering. PSC lets you publish a service behind a Load balancer via the “PSC Publish Service” functionality. On the Producer side, any Https service can be attached to an internal load balancer and published as a service. On the consumer network (in same of different project across organisations) you can create a PSC service attachment as backend service on the supported load balancers(refer table above) by specifying the service url of the published service running in a different network / project.

This enables external load balancers to be managed in a different network and project than the producer service. Note: PSC backend service on the load balancers currently supports only Https protocol (as on date).

Conclusion

As discussed in this article, Network Endpoint groups are an important concept which enables various use-cases in GCP. Remember one of the primary functions of a NEG is to expose a group of services. Where these services reside and how they are exposed determines the type of NEG that has to be created. Also make sure you refer the latest support of NEGs across different load balancers by referring to the latest GCP documentation as a lot of work is currently happening in PSC.

References

- Overview of NEGs

- Zonal NEGs

- Internet NEGs

- Serverless NEGs

- Hybrid NEGs

- PSC Overview

- PSC NEG

- Cloud Armor

The original article published on Medium.