By guillaume blaquiere.Nov 23, 2021

Serverless comparison between different regions for Cloud Run, Cloud Functions and BigQuery is a first dimension to perform choices. But cloud developers also need to choose and to use languages to implement their application. There are a lot of choices, some products limit the possibility, but all developers have their preferences and opinions.

I started this comparison many months ago and, after comparing the results, I never wanted to write this article to avoid a useless languages’ war. However, after discussion with Henrique Joaquim and the usefulness for his thesis of my previous articles on serverless compute performances, I chose to finish and release it to help him in his thesis.

So, if the product and the region matters, does the language also matter?

The test protocol

I chose to compare the 4 most popular languages, supported in the majority of products:

- NodeJS

- Python 3

- Java

- Golang

Of course, I’m not expert on all of them, and I limited the tests to the Fibonacci Algorithm (in recursive mode).

I also chose to compare the performance on Cloud Functions and Cloud Run.

The protocol is simple:

- Deploy the Cloud Run and Cloud Functions service in the same region (

us-central1in my case) and with the same 2Gb of memory/1 vCPU - Run a dummy query to avoid the cold start

- Run 3 times in a row the same Fibonacci complexity (43 in my case)

- Gather and compile the results

To reproduce the test on your side, you can simply follow the README.md file, that explains how to deploy, run the test and clean up your environment.

The test result

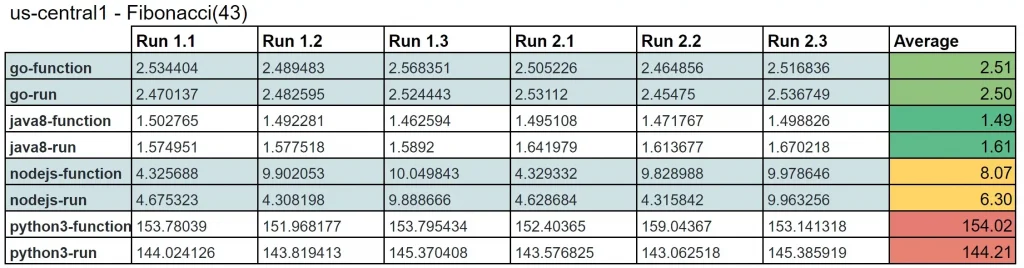

After 2 perf test runs, I got these results (the raw data are in the result.csv file)

We can observe 2 unexpected things

- Cloud Run is always slightly faster than Cloud Functions (as expected). However, it’s not the case in Java.

I suspect some JVM flags added by default during the Cloud Functions container creation (automatically by Google Cloud and Buildpacks) that I haven’t added in theProcfile. - NodeJS results vary from simple to double. 1 time fast and 2 times slow with Cloud Functions, and 2 times fast and 1 time slow with Cloud Run.

I ran the tests several times and I always got this result. I’m unable to explain it, especially because Nodejs is my weaker language among the 4. Please suggest!

Except for these 2 unexpected things, we can conclude without surprise that compiled languages (Golang, Java) are faster than interpreted languages (Python, NodeJS).

However the performance differences are unexpected for me:

I kept the 2 values of NodeJS, I don’t know what is the most realistic

- I didn’t expect Java in the first place!

- I didn’t expect Python to be 100 times slower than Java!!!

Other comparison input

Processing performances aren’t the unique point of comparison that we can perform between the languages. With a focus only on Cloud Run, we can compare:

- The container size

- The memory footprint

- The cold start duration

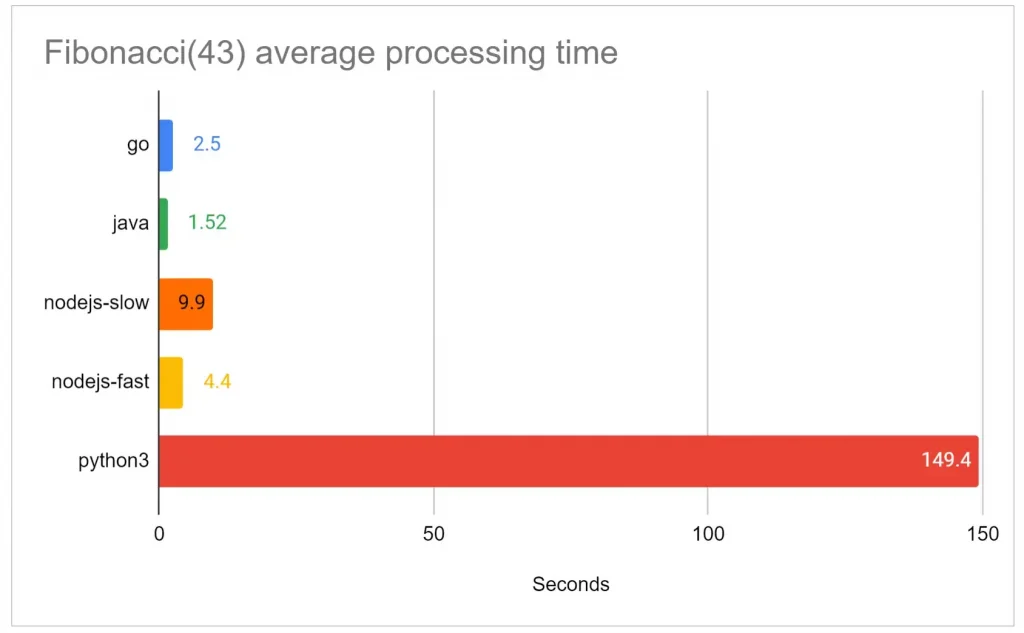

The container size comparison

The container size doesn’t impact Cloud Run service startup, but impacts the deployment time. It’s also the case in other container orchestration services, such as Kubernetes and GKE.

It also has an impact on the cost because storing larger imagines takes more space, and consequently, costs more!

The automatically built containers keep a size bellows 100Mb, except for Java which needs the whole (and heavy) JVM.

Here I compare the default containers created automatically by Cloud Run and without custom optimization. You can easily reduce (or increase) the size of the containers by customizing its creation with a Dockerfile for instance.

You can get the container image built automatically by Cloud Run, in artifact registry -> in cloud-run-source-deploy directory

Select the image that you want and have a look at the size.

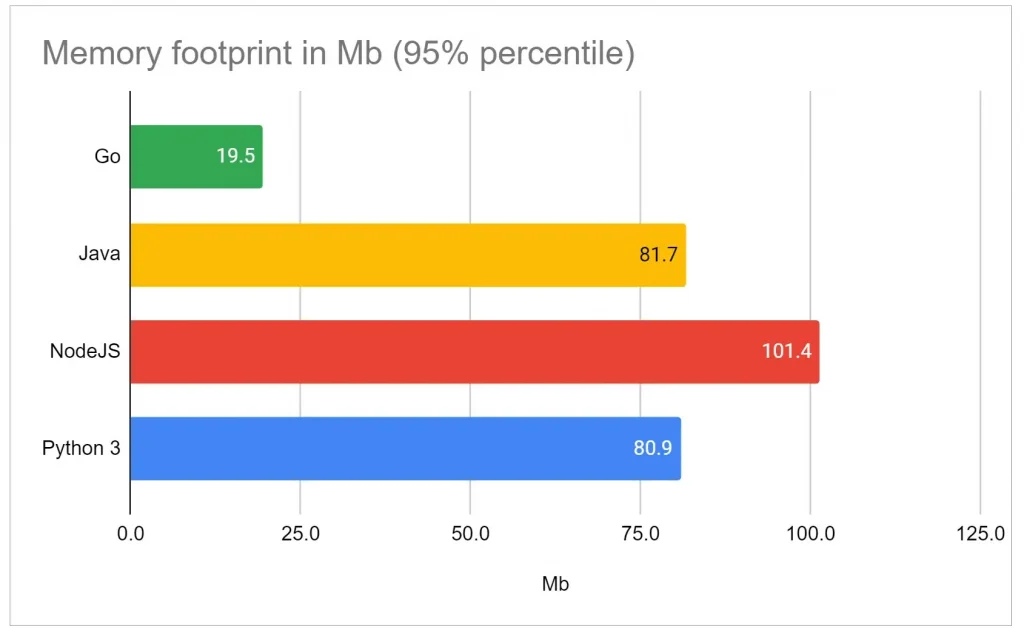

The memory footprint comparison

The memory can be critical for large applications. Here, it’s only a very basic and small application, with few/no dependencies.

However, the larger the memory footprint of the service is, the more expensive it is.

I took the 95% percentile of the Cloud Run metric graphs

With his efficient compilation, Go is very light.

Java, which has a bad reputation in memory usage, is not so bad, when no library or framework is used.

NodeJS and Python aren’t so bad.

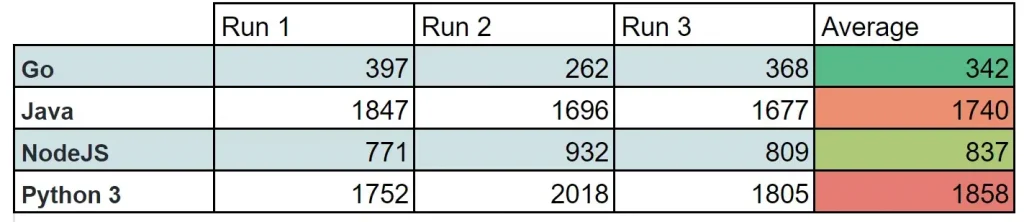

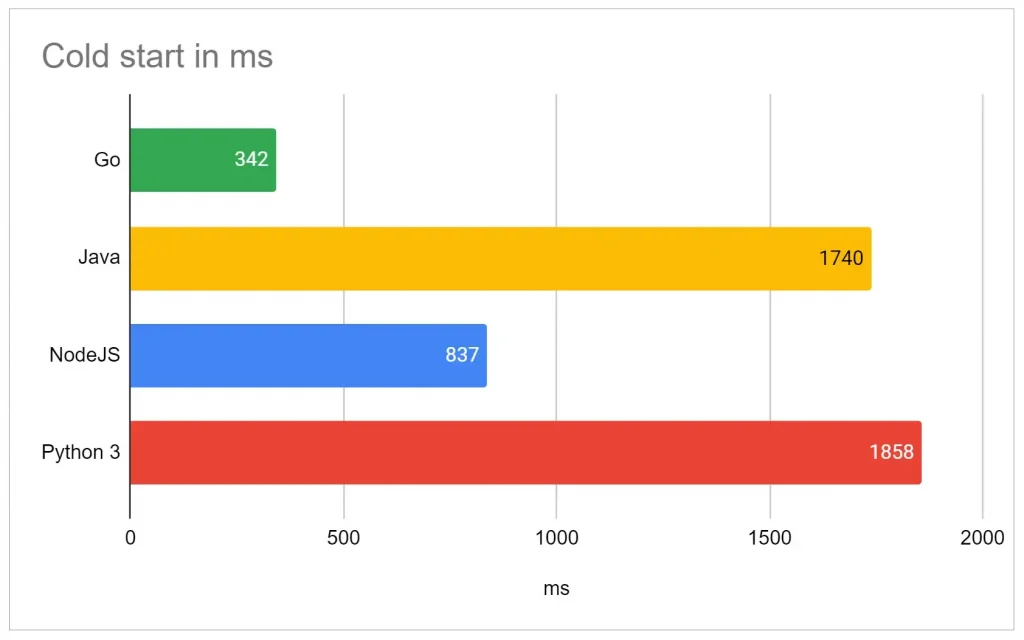

The cold start duration comparison

Most of the time, the cold start isn’t so important for real workloads, but sometimes it is! If the service is too slow to start a new instance, additional features, like min instances, are required to solve it; and, of course, it’s not without impact on the service cost.

I already performed a Java cold start comparison on Cloud Run, especially with and without framework comparison. Here the tests are without framework.

The results have been gotten from Cloud Logging, the first request after a deployment. I deployed 3 times and got each time the 1first requests with Fibonacci(1) computation.

Go is the quickest, and NodeJS also has great performances.

Without any surprise, Java is above 1.5s.

But the surprise comes from Python. Maybe the gunicorn library causes this latency. Please suggest!

The final comparison

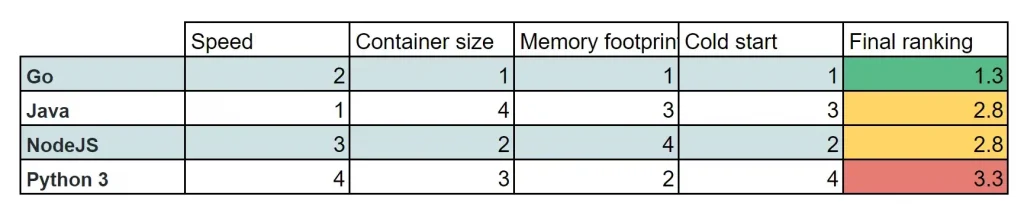

At the end, if we get all the rankings (without any priority consideration), we have that result

Go is a modern language developed in the cloud, for the cloud and we can see that it is very well adapted to serverless environment and microservices architecture.

Java and NodeJS have their strengths and weaknesses, and are to be considered seriously.

Python is my biggest surprise and is at the bottom of the ranking.

Considering the worldwide attraction for Python and the poor performance of it, I’m wondering about computation efficiency, energy saving and global warming…

However, I can’t explain this Python bad ranking and I will be happy to know more from Python experts, and maybe to update this article with some fixes.

Anyway, I think it’s also important to consider, in addition of those results, several other aspects when a development language choice is made:

- Developers’ knowledge, skills and preferences

- Developer availability and capacity to hire new resources/talents to grow the development team

- Library maturity and documentations

- Efficiency and reliability of the development loop

One size doesn’t fit all and it depends on your context. So, choose wisely with that additional insight in mind!

The original article published on Medium.