By Animesh Rastogi.Sep 19, 2022

Realtime Ingestion of your Data from your clients to a Data Warehouse like BigQuery can be critical to making your latest business data immediately available for analysis.

In GCP, you essentially use a combination Cloud Pub/Sub which is used for streaming analytics and data integration pipelines to ingest and distribute data. It’s equally effective as a messaging-oriented middleware for service integration or as a queue to parallelize tasks and intermediate Dataflow jobs before your data could be ingested into BigQuery with the proper schema. While Dataflow pipelines (including ones built with Dataflow Templates) get the job done well, sometimes they can be more than what is needed for use cases that simply require raw data with no transformation to be exported to BigQuery.

Luckily, GCP announced a new Subscriber Type called BigQuery Subscription which writes data directly from Pub/Sub to BigQuery. This new extract, load, and transform (ELT) path will be able to simplify your event-driven architecture. For Pub/Sub messages where advanced preload transformations or data processing before landing data in BigQuery (such as masking PII) is necessary, you would still need Dataflow in the middle though.

Now, let’s go ahead and create our pipeline. We shall do complete following broad tasks:

- Creating our Client (Node js) and Deploying it with Cloud Run Jobs.

- Creating a BQ Dataset and Table

- Configuring Pub/Sub

Creating the Client and Deploying to Cloud Run Jobs

We shall be using faker js (RIP Aaron Swartz) to generate massive amounts of fake(but realistic) transactions data.

Ensure that you create a .env file in your root folder with TOPIC_NAME and PROJECT_ID values set.

Now we need to containerise the client, push the container to artifact registry and deploy it as a Cloud Run Job.

Build your container and push it to Artifact Registry. You can refer to documentation here.

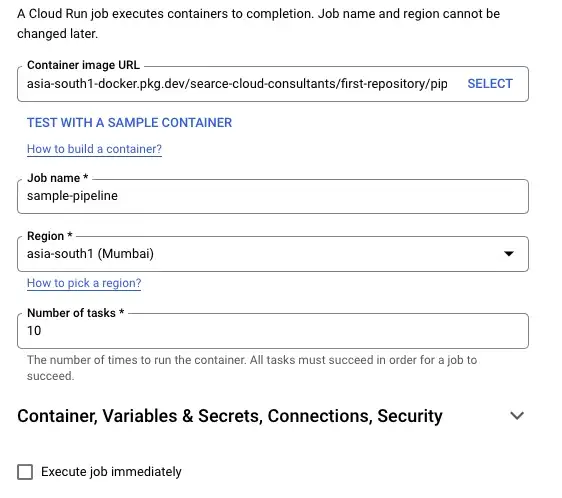

Navigate to Cloud Run in GCP Console and Select Jobs. Click on Create Job and fill in the details like below:

Don’t check the Execute job immediately checkbox. Click on Create

Creating a BQ Dataset and Table

Navigate to BigQuery in the GCP Console and Click on the ⋮ icon next to your project and Click on Create Dataset

Once you create your dataset, run the following query to create your table:

Configuring Pub/Sub

Navigate to Pub/Sub in GCP Console and Click on Schema from the left sidebar.

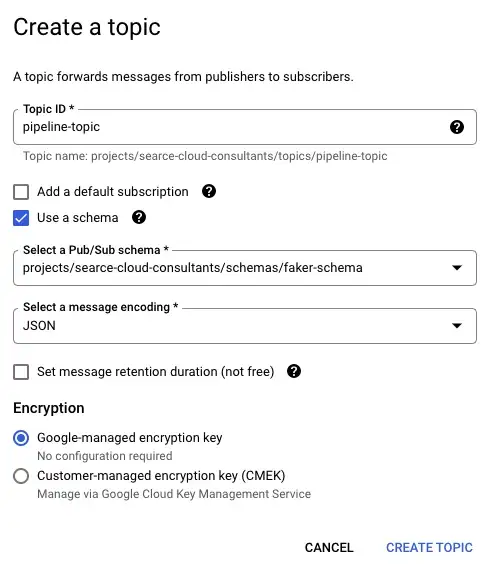

Click on Create Schema from the top. Give it a name and select Avro for Schema Type. Add the following for schema definition:

Once the schema is created, click on Create Topic, enter all the details and Click on Create.

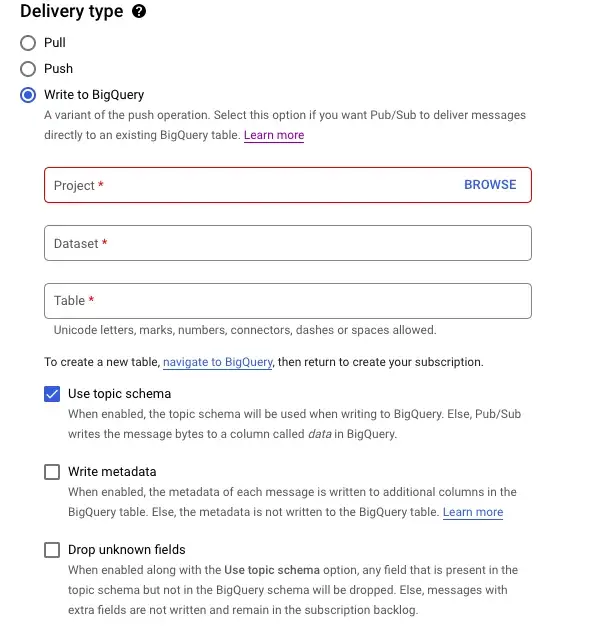

Go to Subscriptions and click on Create Subscription from the top. Give the subscription a name and add all the details from previous steps.

Once you create the subscription, that’s it. You’re done with the setup. Now let’s see it in action.

Go to Jobs in Cloud Run. Select the job you created previously and click on Execute.

You should now start seeing entries in your BQ Table. Once the Cloud Run Job is finished, run the following query in BQ to check the number of records ingested:

SELECT COUNT(account) FROM `<project_id>.<dataset_name>.<table_name>`

Summary

In this blog, we created a simple realtime pipeline to push data from Pub/Sub directly to BigQuery without having to use Dataflow in the middle. This is great for use cases where you don’t require additional processing in flight before storage.

With this new subscription type, you no longer need to pay for data ingestion into BigQuery when using this new direct method. You only pay for the Pub/Sub you use. This is much cheaper compared to the using Dataflow pipeline where you would be paying for the Pub/Sub read, Dataflow job, and BigQuery data ingestion using Storage Write API.

Reference

- No pipelines needed. Stream data with Pub/Sub direct to BigQuery

- Google Cloud BigQuery subscriptions

- Github-Automation Scripts/Streaming Pipline GCP

- The Brilliant Life and Tragic Death of Aaron Swartz

The original article published on Medium.