By Raju Dawadi.Mar 1, 2021

Docker is becoming a de facto for modern software deployment as well as development. From the build pipeline to CI/CD, the adoption of docker is increasing rapidly. Even the workflow automation like Github Actions is fully dependent in docker.

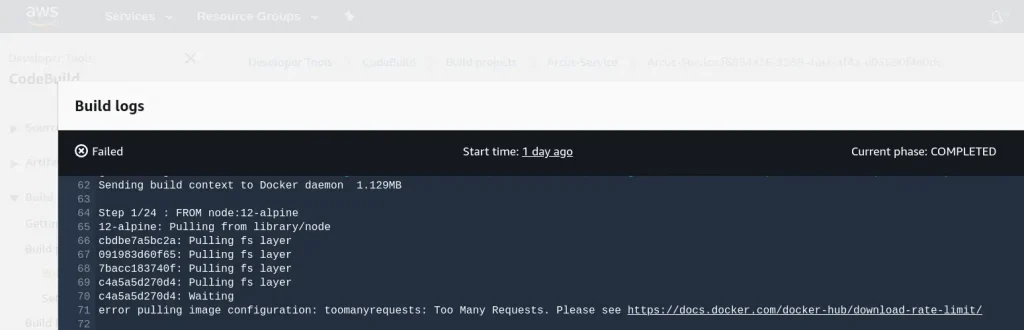

But with the recent change in the Docker Hub pull request limitation, a big number of users are going to be impacted. The cap of 100 and 200 pulls per 6 hours for anonymous and authenticated users respectively is surely gonna impact existing workflow. Though it was expected to come into effect from November 2020, we started getting request cap while using build services.

As the build services like: Google Cloud Build, AWS Code Build, Github Actions use shared IP address unless they reside inside NAT, its easy to hit the limit. So, we had two options either to get docker subscription($60 per year) or use image registry of the cloud provider.

Though the pricing is not high comparatively, we choose second option for 2 reasons:

- Avoid multiple authentication measures

- Utilize multi-zone and multi-region(after the Docker Hub Incident within AWS network)

- Easy to share image with GCP/AWS access control than docker.io token

- Container image scan out of the box

With only Container Registry, Google Cloud didn’t have option for selective access control on docker image but with the late introduction of Artifact Registry, this is also possible. Artifact Registry is a place not only for container images but also for language packages like Maven, npm with regional and multi-regional repositories.

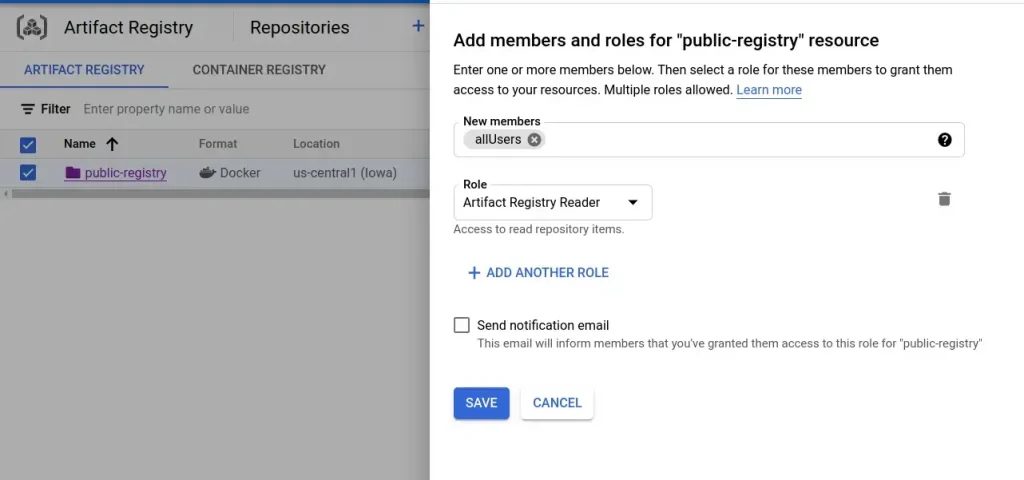

We can give fine grained control over the registry for authenticated or un-authenticated users based on the need. But make sure to not make any artifacts available publicly which could have private content.

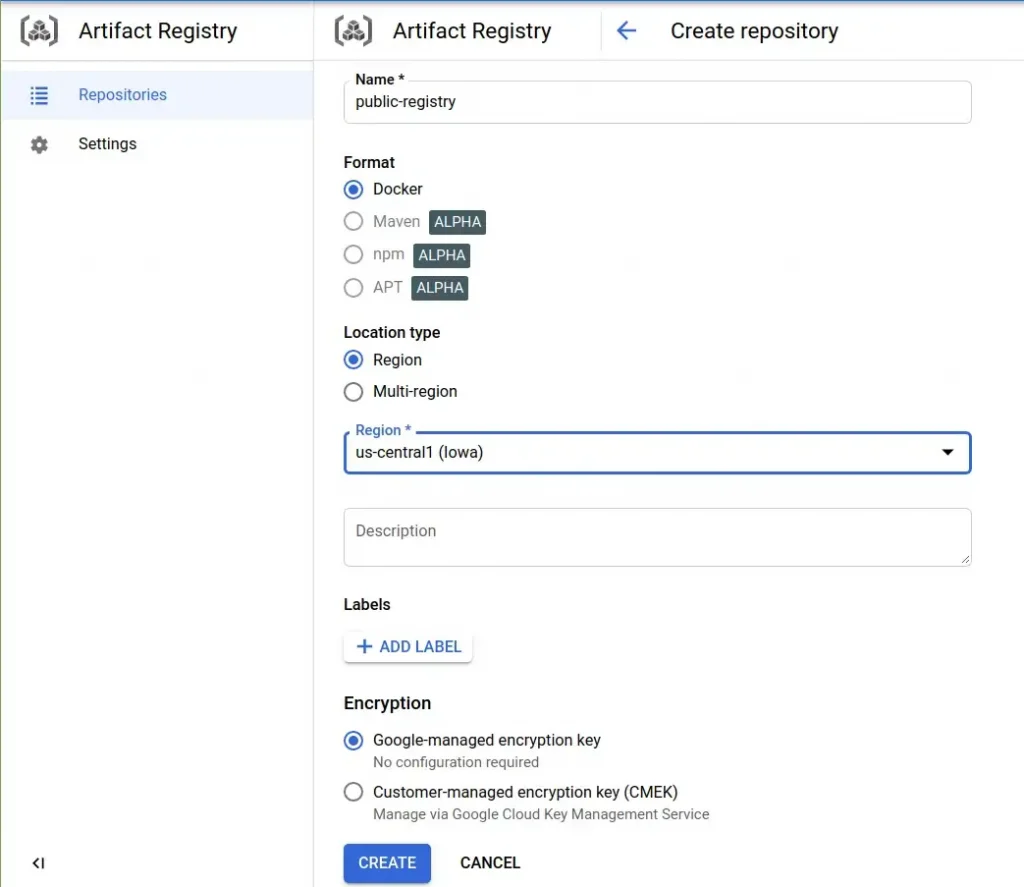

In this post, we are focusing on docker registry image access, both private and public. For that, we need to create an Artifact Repository by entering the repository name, region(multi-region is preferred if there is need of high availability).

After the repository is created, we need to push the image but for that authentication is required. Cloud shell is easy and free way to access Google Cloud products from terminal session. Another way is to use any instance or local system. For both of them, authentication step is necessary. After the system is authenticated, enter the following command to configure Docker to use Artifact Registry credentials when interacting with Artifact Registry:

$ gcloud auth configure-docker us-central1-docker.pkg.devThe region us-central1 will depend whether the artifact is regional or multi-regional.

Then we can push the docker image with:

$ docker tag python:3.8-alpine us-central1-

docker.pkg.dev/gkerocks/public-registry/python

# Format

# docker push LOCATION-docker.pkg.dev/PROJECT-ID/REPOSITORY/IMAGE:

TAG$ docker push us-central1-docker.pkg.dev/gkerocks/public-registry/pythonBy default the image will stay private. But for making it publicly accessible, on the “Show Info Panel”, we need to add allUsers with the reader access.

Then the image can be pulled by anyone without google authentication.

# Format

# docker pull LOCATION-docker.pkg.dev/PROJECT-ID/REPOSITORY/IMAGE:TAG

$ us-central1-docker.pkg.dev/gkerocks/public-registry/pythonThe access control is only on the artifact level(public-registry in above case) but not on image level(python in above case).

About Pricing

Though the docker images could be opened up for public usage, there is a charge associated based on the usage and storage. If the image is quite popular, one might be charged a minimal amount for the egress traffic which is determined by the docker pulls. So, make consideration on this.

The artifact charges in docker registry is for storage and network egress. For storage, 0.5GB per month is free and after that, $0.10 is charged per GB.

The egress charge is different based on the target region and in/out of google cloud. For details about pricing please visit the Artifact Registry Pricing page.

The original article published on Medium.

發佈留言