By Sergey Shcherbakov.Dec 16, 2022

Here is my attempt to summarise and disambiguate terms often used in technical discussions around arranging network ingress traffic into [single] Kubernetes clusters running in Google Cloud (GKE) or on-premise (Anthos on Bare Metal, Anthos on VMware).

The following small table of contents should lead you directly to the section of interest.

- Options Breakdown

- Service ················ GKE | Anthos | TLS | Example

- Ingress ················ GKE | Anthos | TLS | Example

- Gateway ·············· GKE | ··········· | TLS | Example

- Istio Gateway ······· GKE | Anthos | TLS | Example

- NEG Controllers ·· GKE | ··········· | TLS | Example

How many times did you need to connect a Kubernetes cluster to the outer world, make it accessible from public internet or within your organisation while keeping it secure and maintaining control over the setup?

Very often when such discussions commence there is no clear picture of the options available. The ambiguity of the component names and overloaded terms contribute to the confusion to large extent.

“We will set up an Ingress” - wait, did you mean a particular implementation? In which system is that going to happen? Is that all that is going to be needed?

“TLS will be terminated at the Gateway” - which kind of Gateway are you referring to? Are you sure that this will match your mutual TLS requirements?

“Who is going to own the network ingress in the team?” -what am I referring to here?

“Do we need to take care about load balancer in front?” - there are even more options about that!

Often times I’ve been in such discussions.

One way to navigate the rather ambiguous terminology and landscape is to list all possible variants and structurise them. Once all possible options are listed and structured it becomes possible to land on the same page and reduce confusion.

I will try to provide a succinct reference to the terms with their multiple meanings, short infrastructure design pattern diagrams and example configuration snippets.

Options Breakdown

It is convenient to start the network traffic ingress options list by classifying them by the product flavour.

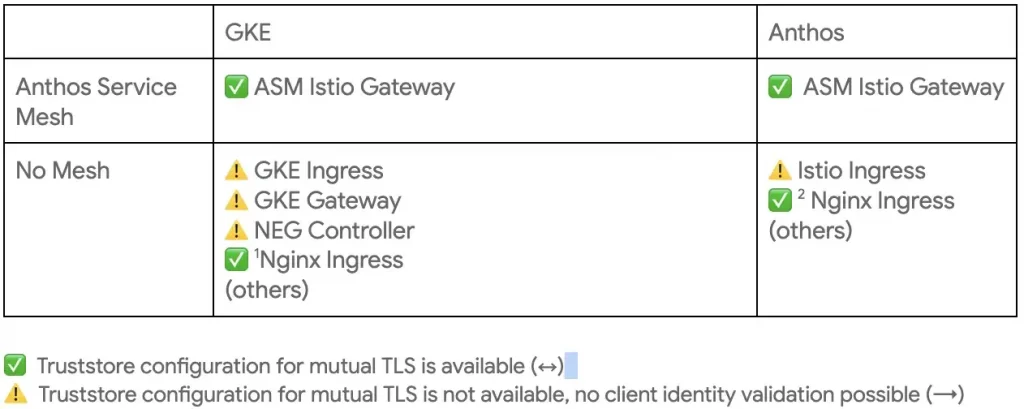

Available options and short terms meaning depend on whether we are in the context of Google Cloud (GKE service) or Anthos clusters outside of Google Cloud. Whether the Anthos Service Mesh (ASM) is available and/or required or not.

Please note that not all of the options provide an opportunity for setting up full fledged mutual TLS authentication. Such options are marked with a yellow question mark below.

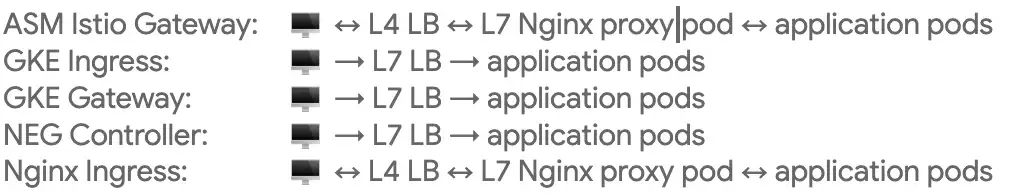

This is the network flow summary for the options in the table (double arrows mean possible mutual TLS setup, single arrows are one-way TLS connections):

We will now look into each of them in a greater detail.

Service

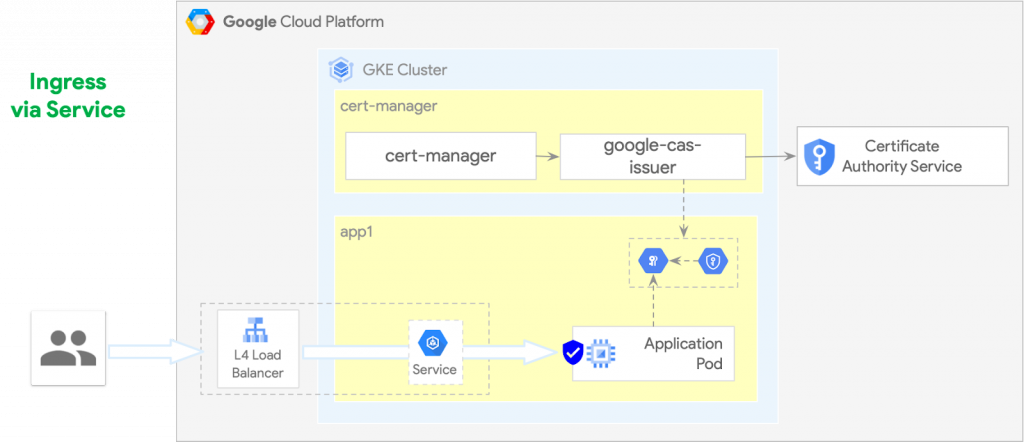

Kubernetes Service resource provides an option of defining the Kubernetes Service of type LoadBalancer. It is responsibility of the system external to the Kubernetes cluster to configure infrastructure resources outside of the Kubernetes cluster by looking at the Kubernetes Service definition.

Typically in this scenario the application service endpoints are exposed to the incoming (L4) traffic directly. Hence the TLS is terminated by the application itself.

This option can be useful in case if the application already has its own special component that can accept TLS connections and route them to the other application components.

Service — GKE

In GKE, the LoadBalancer Service type automatically manages Google Cloud L4 TCP load balancer resources. They will be created by the Google Cloud automatically.

Service — Anthos

In Anthos on Bare Metal, the LoadBalancer Service can automatically manage the bundled MetalLB load balancer, which is part of the Anthos installation and is represented by the software components running on the cluster master nodes.

Service — TLS

The TLS certificates need to be configured in the application services themselves, e.g. using cert-manager and Google CAS Issuer issued certificates. These are eventually stored in the Kubernetes Secrets that get typically mounted into the application pods.

Service — Example

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app.kubernetes.io/name: MyApp

ports:

- protocol: TCP

port: 443

targetPort: 9376

clusterIP: 10.0.171.239

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 192.0.2.127

Ingress

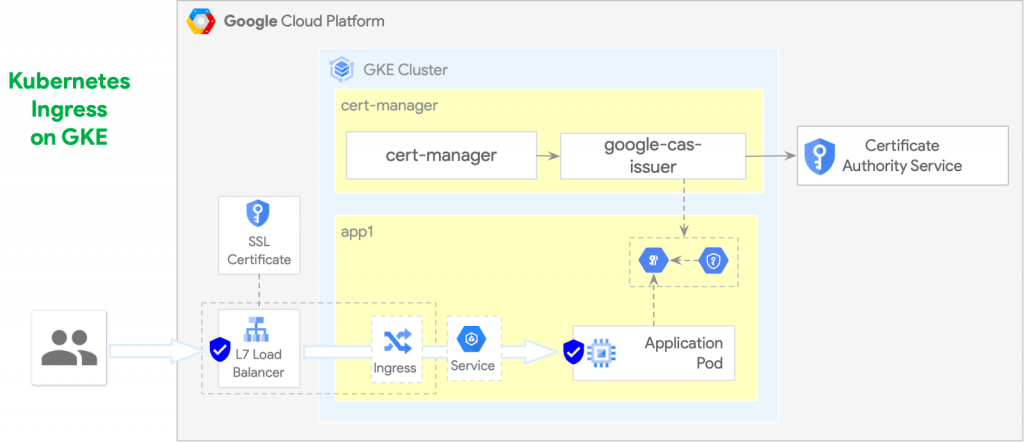

Kubernetes Ingress is an API object that manages external access to the services in a cluster, typically HTTP. Ingress may provide load balancing, SSL termination and name-based virtual hosting.

An Ingress Controller is responsible for fulfilling the Ingress.

The limitations of the Kubernetes Ingress resources include:

- Not possible to configure mutual TLS connection between the ingress proxy and the application backend services (corresponding settings are missing in the Kubernetes resource definition)

- Single Ingress resource that incorporates both load balancer as well as routing configuration doesn’t allow separation of concerns and flexible access configuration for multi-tenant environments (all concerns are in responsibility of a single team)

- In case of the Istio Ingress controller (which is present by default in ABM installations) it is also not possible to use TLS and encrypt network traffic between the reverse proxy and the application service

Because of its limitations the use of Kubernetes Ingresses is being gradually discouraged in favor of the Kubernetes Gateway resources described in the next chapter.

Ingress — GKE

In GKE, the GKE Ingress controller automatically manages Google Cloud L7 External and L7 Internal Load Balancer resources.

The incoming L7 TLS connections terminate at the GCE L7 External Load Balancer or L7 Regional Internal Load Balancer. The GCE load balancers forward incoming traffic to the Kubernetes cluster nodes.

Container Native load balancing can be configured on the target Kubernetes Service objects to make GCE load balancer forward traffic directly to the pods instead of the node ports.

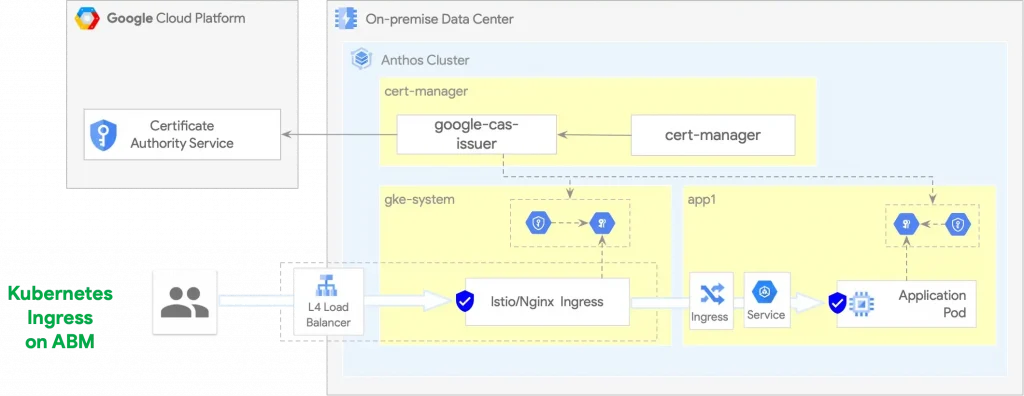

Ingress — Anthos

In ABM, the default out-of-the box installation of the Istio Ingress controller provides an option of automatically managing the Istio Ingress proxy processes as Kubernetes workloads. Unfortunately, this out-of-the-box option has all Kubernetes Ingress limitations listed above.

In order to configure the downstream connection between the Ingress proxy process and application backend service with mutual TLS an Istio Ingress implementation that supports such implementation can optionally be used. One of such implementations is the Nginx Ingress controller, which can be configured with TLS downstream connection encryption and TLS client certificates via custom Kubernetes resource annotations.

Ingress — TLS

TLS configuration such as ingress proxy server and client certificates are configured in the Kubernetes Ingress resource definition either directly or via additional custom Kubernetes resource annotations.

The Kubernetes Ingress can either use the TLS certificates and their private keys from the referenced Kubernetes Secret resources of type tls. The Kubernetes Secrets with TLS certificates and keys in that case can be managed by the cert-manager and Google CAS Issuer.

Alternatively, it is possible to specify the GCE SSL Certificate resource name in the ingress.gcp.kubernetes.io/pre-shared-cert Kubernetes Ingress annotation. The load balancer will then be configured with the referenced preconfigured SSL certificate.

Ingress — Example

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-myservicea

annotations:

cert-manager.io/issuer: "letsencrypt-staging"

spec:

tls:

- hosts:

- ingress-demo.example.com

secretName: ingress-demo-tls

rules:

- host: myservicea.foo.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservicea

port:

number: 80

ingressClassName: nginx

Gateway

Kubernetes Gateway is a collection of resources that model service networking in Kubernetes (GatewayClass, Gateway, HTTPRoute, TCPRoute, Service, etc.).

Kubernetes Gateway is a recent alternative to the Kubernetes Ingresses that addresses its drawbacks and limitations and is encouraged to be used instead of the Kubernetes Ingress going forward.

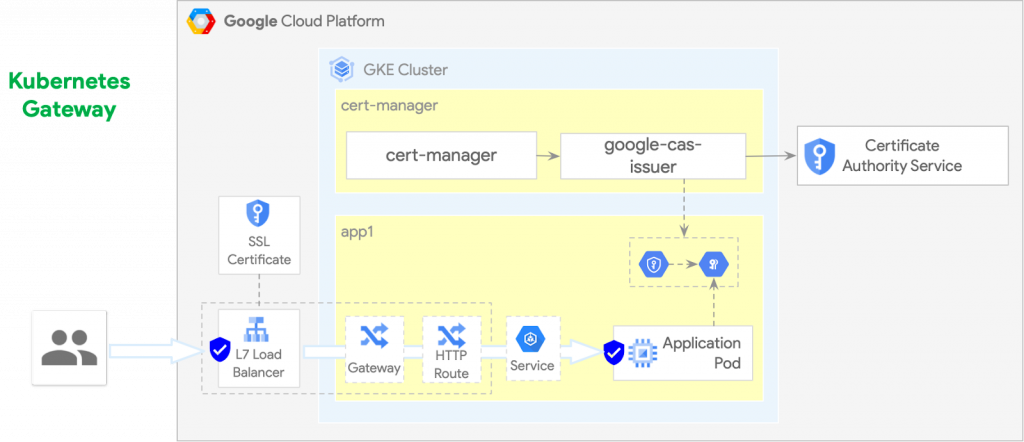

Gateway — GKE

In GKE, the Kubernetes Gateway is implemented by the GKE Gateway controller (this feature is in public preview at the moment). Via its Kubernetes resource configuration, it is possible to automatically manage:

gke-l7-rilbRegional internal HTTP(S) load balancers built on Internal HTTP(S) Load Balancinggke-l7-gxlbGlobal external HTTP(S) load balancers built on Global external HTTP(S) load balancer (classic)gke-l7-rilb-mcMulti-cluster regional load balancers built on Internal HTTP(S) Load Balancinggke-l7-gxlb-mcMulti-cluster global load balancers built on Global external HTTP(S) load balancer (classic)gke-tdMulti-cluster Traffic Director service mesh

Gateway — TLS

The TLS certificates and keys for the Kubernetes Gateway managed load balancers can be provided using following methods

- Kubernetes Secrets — Can be supplied and managed by cert-manager and Google CAS Issuer

- SSL certificates — Can be pre-provisioned (e.g. using Terraform) separately from the GKE cluster

- Certificate Manager

Gateway — Example

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: my-gateway

spec:

gatewayClassName: gke-l7-rilb

listeners:

- name: https

protocol: https

port: 443

tls:

mode: Terminate

options:

networking.gke.io/pre-shared-certs: store-example-com

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: httproute-example

spec:

parentRefs:

- name: my-gateway

hostnames:

- my.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /bar

backendRefs:

- name: my-service1

port: 8080

Please note the separate Kubernetes resources “Gateway” and “HTTPRoute” that define different aspects of the Kubernetes Gateway. This separation allows Separation of Concerns and Segregation of Duties. Different teams with different access permissions can now be tasked with managing external and internal aspects of ingress network traffic.

Istio Gateway

When Anthos Service Mesh (ASM) is installed and available in the Kubernetes cluster, it provides its own way of managing and organising the network traffic ingress into the service mesh.

ASM brings in the Istio Gateway Kubernetes resource and component. It is different from the Kubernetes Gateway resource object described in the previous section.

Anthos Service Mesh gives you the option to deploy and manage Istio gateways as part of your service mesh. A gateway describes a load balancer operating at the edge of the mesh receiving incoming or outgoing HTTP/TCP connections. Gateways are Envoy proxies that provide you with fine-grained control over traffic entering and leaving the mesh. Gateways are primarily used to manage ingress traffic, but you can also configure gateways to manage other types of traffic.

There are multiple ways of exposing Istio Gateways to the external clients. All options for ASM meshes running in the GKE clusters are well described in this public article.

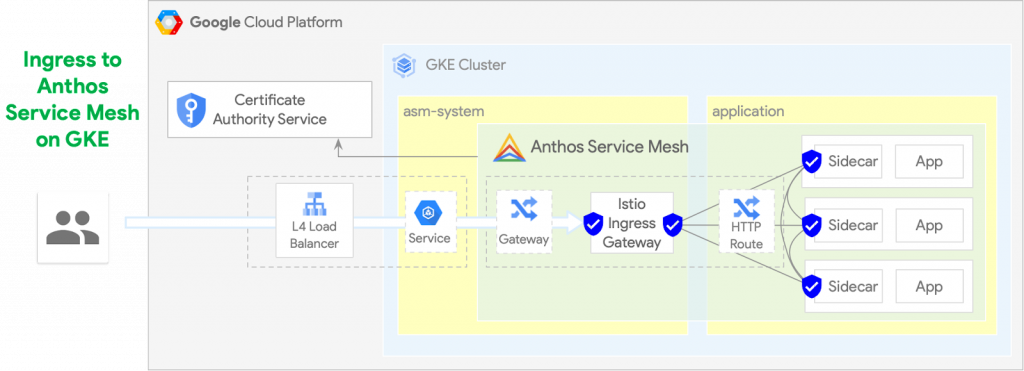

Istio Gateway — GKE

The basic way to expose the ASM mesh running in GKE cluster to the external clients is using the Kubernetes Service objects as depicted on the following diagram. Creating a Kubernetes Service resource object of LoadBalancer type on GKE creates corresponding Google Cloud L4 load balancers automatically.

Please note that the Anthos Service Mesh can integrate with the Certificate Authority Service as a source for the mesh workload certificates.

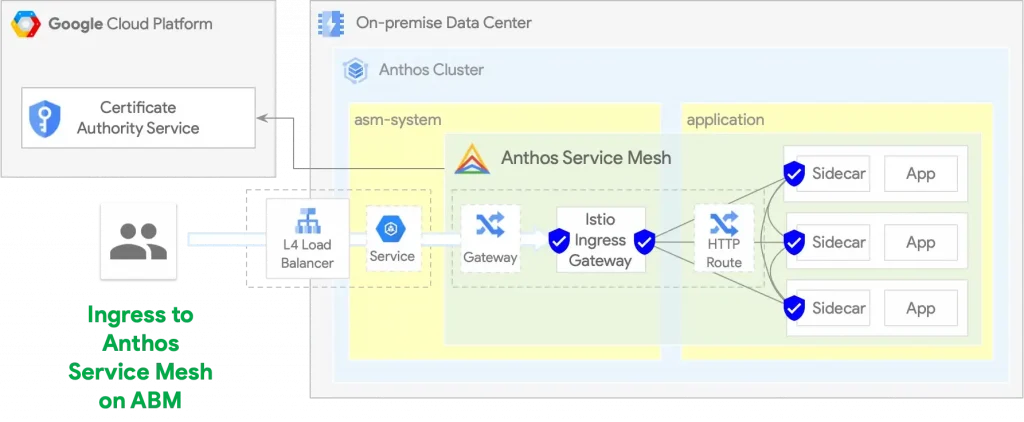

Istio Gateway — Anthos

A Kubernetes Service resource object is needed to expose the ASM mesh running in an Anthos on Bare Metal (ABM) cluster too. The type of the load balancer automatically controlled by the ABM components depends on the cluster installation configuration:

- Bundled L2 load balancing

- Bundled L3 load balancing with BGP

- Manual load balancing

The following diagram illustrates the Kubernetes component required to expose ASM mesh running in an ABM cluster.

Istio Gateway — TLS

The ASM installations on both GKE and ABM can be configured with TLS certificates provisioned by the Google Certificate Authority Service (CAS).

The ASM can be configured with the CAS resource names during the installation process and manages the workload TLS certificates lifetime automatically at runtime fetching them from the supplied CAS service pool or instance.

Example

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: frontend-gateway

namespace: frontend

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 443

name: https

protocol: HTTPS

hosts:

- ext-host.example.com

tls:

mode: SIMPLE

credentialName: ext-host-cert # Kubernetes Secret name

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: frontend-ingress

namespace: frontend

spec:

hosts:

- "*"

gateways:

- frontend-gateway

http:

- route:

- destination:

host: frontend

port:

number: 80

Please note the similar level of conserns separation as we have seen in the Kubernetes Gateway section.

GKE NEG and AutoNEG Controllers

NEG Controllers — GKE

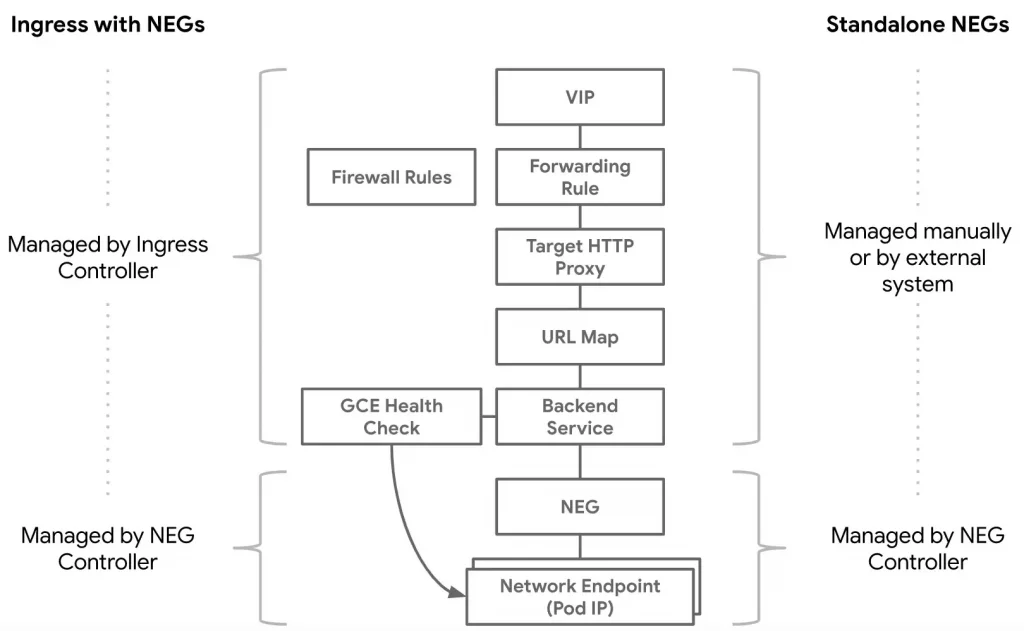

GKE provides a NEG controller to manage the membership of GCE_VM_IP_PORT Network Endpoint Groups (NEGs). You can add the NEGs it creates as backends to the backend services of load balancers that you configure outside of the GKE API.

The NEG Controller with Standalone NEGs covers the case when load balancer components automatically created by the Kubernetes Ingress do not serve your use case.

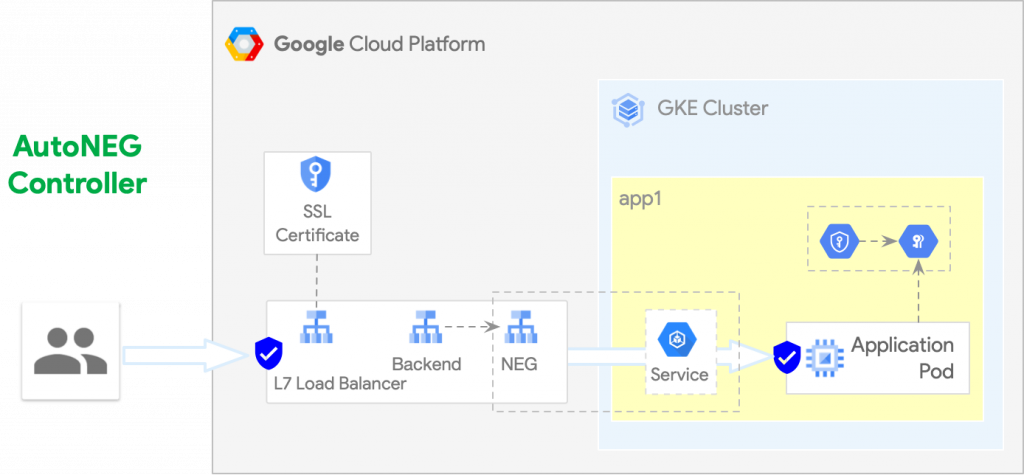

Additional Autoneg Controller provides simple custom integration between GKE and Google Cloud Load Balancing (both external and internal). It is a GKE-specific Kubernetes controller which works in conjunction with the GKE Network Endpoint Group (NEG) controller to manage integration between your Kubernetes service endpoints and GCLB backend services.

GKE users may wish to register NEG backends from multiple clusters into the same backend service, or may wish to orchestrate advanced deployment strategies in a custom or centralised fashion, or offer the same service via protected public endpoint and more lax internal endpoint. Autoneg controller can enable those use cases.

The benefit of using NEG controllers with Kubernetes Service resources is ability to further segregate the duties of teams who are responsible for the network traffic ingress into the Cloud including Load Balancers and the teams who have access and responsible for the Kubernetes internal resources and workloads.

It becomes possible to set up network ingress by provisioning Cloud resources using the Infrastructure as Code (IaC) system of choice (e.g. Terraform) and Kubernetes resources via declarative Kubernetes API.

NEG Controllers — TLS

In this scenario the TLS connections are handled and terminated by the Google Cloud Load Balancers. With NEG Controller, it is possible to reuse the load balancer components and connect them to the Kubernetes Services as defined in the Kubernetes cluster resources.

The TLS certificates and private keys can be managed in this case outside of the Kubernetes cluster following existing Terraform IaC way or Google Cloud API based automation. The Kubernetes resources in this case would not lead to the load balancer components modification in a way that could damage the Terraform state.

NEG Controllers — Example

apiVersion: v1

kind: Service

metadata:

name: my-service

annotations:

cloud.google.com/neg: '{"exposed_ports": {"80":{},"443":{}}}'

controller.autoneg.dev/neg: '{"backend_services":{"80":[{"name":"http-be","max_rate_per_endpoint":100}],"443":[{"name":"https-be","max_connections_per_endpoint":1000}]}}

# For L7 ILB (regional) backends

# controller.autoneg.dev/neg: '{"backend_services":{"80":[{"name":"http-be","region":"europe-west4","max_rate_per_endpoint":100}],"443":[{"name":"https-be","region":"europe-west4","max_connections_per_endpoint":1000}]}}

spec:

selector:

app.kubernetes.io/name: MyApp

ports:

- protocol: TCP

port: 443

targetPort: 9376

clusterIP: 10.0.171.239

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 192.0.2.127Conclusion

Did you notice a mistake? Have an interesting or new solution for arranging network ingress into Kubernetes clusters? Will be glad to learn and amend the list. Please comment and PM me!

The original article published on Medium.

在〈Ingress in Google Kubernetes Products〉中有 1 則留言

itstitle

excerptsa