By Abhinav Bhatia.Dec 6, 2021

“Every kid coming out of Harvard, every kid coming out of school now thinks he can be the next Mark Zuckerberg, and with these new technologies like cloud computing, he actually has a shot.”

–Marc Andreessen, Board Member of Facebook

So now that we understand what cloud is and its service models where we kind of also discussed the layers of a digital application (remember ?) the next question that comes in our mind probably is how do I start. The first question that I hope to address in this blog is where do I compute my data ? Over here I am gonna use Google Cloud platform to depict the various options so that you can start with your hands-on from the get-go.

A few Disclaimers

All opinions discussed in this blog series are my own and in no way should be attributed to be coming from the companies that I am or have been a part of. These are my learnings that I have tried to put in in as simple manner as possible. I understand that oversimplification can sometimes leads to an alternate version which might not be true. I would try my best not to oversimplify but my only request is to take all of this with a pinch of salt. Validate from as many sources as possible.

Please bookmark this link if you have to know more about the free tier options for GCP.

Essentially new customers get $300 in free credits to fully explore and conduct an assessment of Google Cloud Platform. You won’t be charged until you choose to upgrade. At the time of writing this article the free tier is of 90 days.

Get free hands-on experience with popular products, including Compute Engine and Cloud Storage, up to monthly limits. These free services don’t expire.

You would need a credit card in order to use a free tier account. This video from Google Cloud Tech kind of summarises how to go about using it and probably have all your questions answered.

Second way to try your hands is qwiklabs where you get a guided tour of various labs and quests and you can easily use that to get a timed access to a lab where you can easily learn GCP through labs.

Jumping back to the topic of this discussion where do I compute ?, the main idea and question that we have in mind is where do I run my application. There are choices which GCP gives and the way to choose between them is essentially what we’ll explore in this blog.

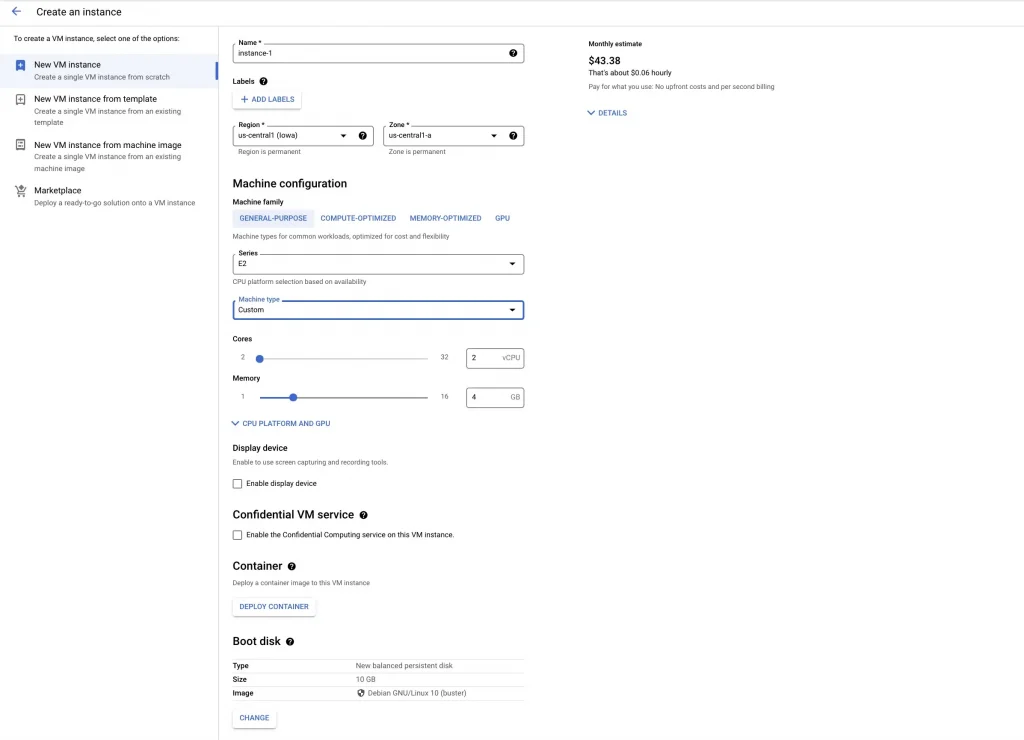

1.Google Compute Engine(GCE) [Virtual Machines]: Google Compute Engine(GCE) is essentially a Virtual Machine that is at your disposal for you to run your operating system, middleware, runtime, and your application with its data. You choose this model when you want a lot of control (you want to decide what operating system, what particular patch, what security updates, how much hardened the system has to be, control how much of resources I have to give to my application, set the right configuration of the resources either using config management tools or on your own) and you agree to do a lot of things on your own going for a least managed (allocate resources, choose instance types, choose operating system, create your network, setting up firewall rules, WAF rules, scaling of resources on your own or using cloud provided constructs like instance groups, monitoring, allocating storage, doing backup) option. It’s not that you are deliberately want to increase your work by going for a least managed option. You are essentially going for more control and you foresee the benefits of control would be more than the downsides of doing most of the work on your own. You still get the tools from Google Cloud to help you monitor ( Cloud Operations) or backup ( PD snapshots or Actifio) but as we discussed in the earlier blog, its your responsibility to configure and manage these tools and pay for them. The ball lies in your court.

Note: You can also create virtual machines using Google VMware Engine (GVE) where a VMware based Software Defined Data Center is given out at the click of a button (with Compute, Storage and Network Virtualization). Typically if you are already a VMware customer on-premise and wanted to have the same experience on Google Cloud while integrating with other Google Cloud Services, you would choose GVE.

- Typically you use a Virtual machine when you are running a COTS (commercial off the shelf) application/ISV (independent software vendors) applications and they have only certified it to run on a virtual machine where they have a specific ask for an operating system with a particular version (major and minor release). Basically you are happy running the app as it is then VMs come to your rescue.

- If you are migrating your applications from another cloud/on-premise and you don’t know who wrote your code (if you have to change your app). You don’t want to spend time rearchitecting into containers(more on that in a bit) and you want to choose the safest option, VM is your best bet.

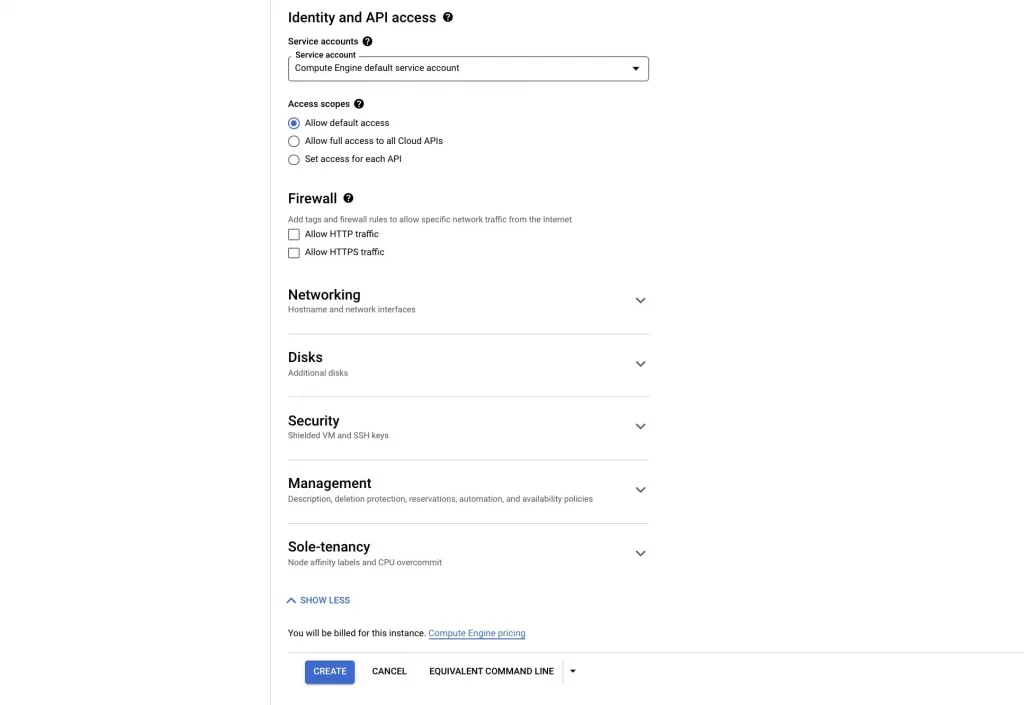

Before understanding GKE, let’s just take a pause and understand containers. With VMs, we understood that using a hypervisor we created a virtual version of CPUs,RAM (essentially a server) on which we run our own operating system and the rest of the stack. But essentially VMs help in creating a slice of the data centre in a virtual form so that it is easy to manage and deploy. But on top of a VM we still have to install our operating system, middleware, runtime, application and its data for every virtual machine and if there are 1000s of those, we would have to do this for the entire 1000 and if there would be updates/patches and security updates we would have to manage that on our own. Of course as I said earlier, there are tools (config management) that would help to ease the job but doing that for a large set of virtual machines is something that can become too complex too soon.

Containers come to the rescue where we package the application along with its libraries, middleware, executing command (eg node server.js) along with the working directory where the binaries are present) inside a container image. Basically the idea is to contain everything inside this image so that it can be ported anywhere and run anywhere.

In order to understand the next part of containers, I would have to oversimplify what operating systems are. Please read up on a technical definition of OS but essentially Operating systems are nothing but a bunch of software (instructions) running between the hardware and your application/user and helps in the translation of instructions from high level (from user/application (like java/c++) to low level (byte code).

With Containers, you are dividing this operating systems into multiple containers, essentially doing operating systems virtualization. The operating system softwares (the bunch of instructions) are stored inside memory which is a storage area. Kernel is the main software out of those operating system softwares which essentially does a lot of low level tasks which lives in the kernel space in memory. All other programs including our own application lives in the user space. All containers share the same kernel space but each has its own user space

Containerization is not a concept which competes with Hypervisor which does Hardware Virtualization. It works hand in hand with VMs. So while you can ( and its a big debate question also) run containers directly on baremetal servers where you are not installing or managing the extra hypervisor layer, but generally a lot of deployments of containers is on Virtual machines where you are getting best of both the worlds (benefits of virtual machines in maintenance and management and the container world advantages of portability and more agility ). A famous container runtime is called as Docker. There are many others like runc, containerd, Windows based containers (yes it’s not a linux only concept, even windows have containers now). In order to run containers in a more production ready system where you are using a bunch of machines (called a cluster) to run containers, you also need a layer which can orchestrate various tasks and does that for you wherein you create objects (or constructs) to manage your deployments, networking, security, high availability and many more administrative and application functions. A famous orchestration layer is called as Kubernetes. There are others out there like docker swarm and Apache Mesos.

PS: Containers and Container Orchestration is a whole new topic in itself. The goal of this blog series is to explain cloud in a simplified manner.Probably in some of the future posts I’ll cover these concepts in detail.

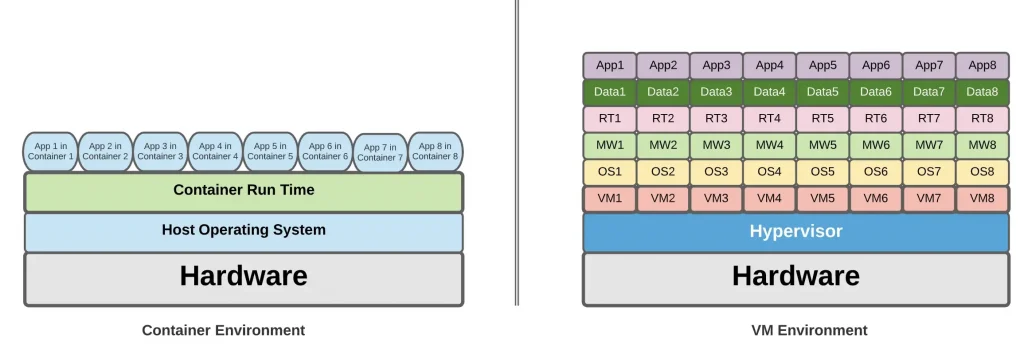

2.Google Kubernetes Engine (GKE) [Container Engine]: So if your application is containerized Google Kubernetes engine is one of the best container orchestration engine out there in which Google Cloud manages the entire control plane operations and you get the worker nodes to manage and work upon. Again with GKE, a lot of control is still with you but essentially these controls all apply to the cluster you want to create, the kind of scaling, network and security constructs. So in a GCE model, you were thinking of all the constructs for a single VM and taking advantage of other tools to apply the same constructs to other VMs, in GKE you are thinking about a cluster as a whole.

A famous analogy of cattle vs pets apply here where as a best practice it is always advised to use your servers like cattle who are numbered, you manage them as a whole, if one goes down, you replace it with the new, but the rest 999 of those are still performing the job in the interim. Kubernetes is that shepherd which helps you treat your servers in that manner. In the model where you are creating virtual machines and installing components on top of them and doing this repeatedly for the next 999 machines manually or using tools, you are treating your servers as pets where if one goes down you put all your efforts to bring it up. Thats not the modern recommended way of using compute. Even when you are using GCE, there are other constructs like instance groups that should be utilized in which you are configuring a group of VMs together and doing rolling updates, rollbacks but all of that and much more is also available in the kubernetes architecture.

So essentially you would choose Google Kubernetes engine when

- You have a containerized application or if you want to move into this new modern architecture and that’s where you are ok to spend some effort containerizing your existing VM based apps.

- You want to move from monolithic to microservices based architecture. Microservices is not only a container based architecture. Its more of a design pattern where you are breaking down your application into smaller microservices (think about an ecommerce application microservices like checkout, return, buy, upload a product etc) so it is easy to rip and replace, deploy and manage. Containers are one of the best deployment models for microservices ( but there are people who use VMs also for microservices)

- If you are migrating your applications from another cloud/on-premise, you would choose GKE if

– if you want to modernize your application because the current app is not what you wanted it to be and you have to make it a lot better.

– Generally for stateless workloads kubernetes or containers is the best bet. You can definitely host stateful workloads (like databases) on containers but there are a lot of things that goes around managing a databases. Kubernetes through some extent (like Operators/Stateful sets) helps in achieving those but complexity creeps in and it becomes a game of balancing the pros and cons.

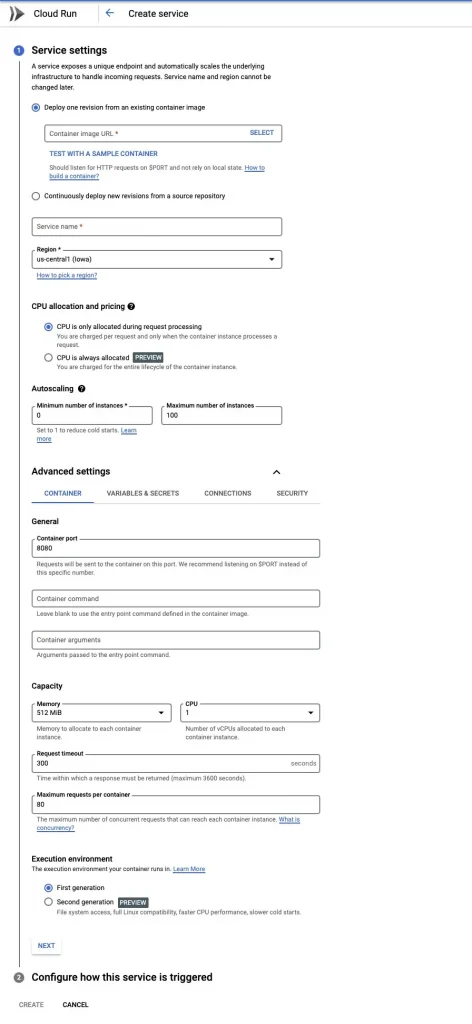

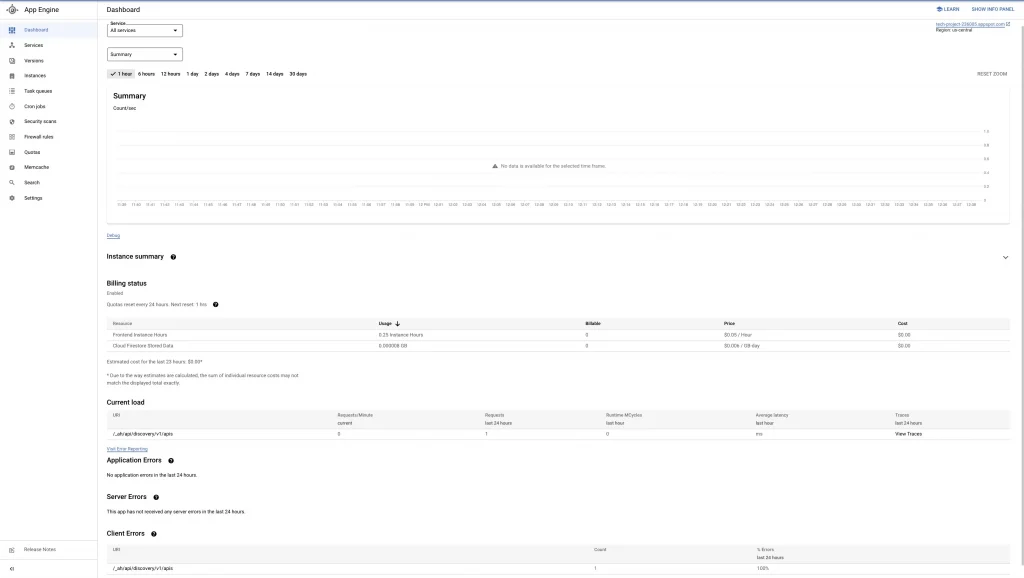

3.App Engine / Cloud Run [Serverless]: The third way that you can use cloud for hosting or migrating your applications in the serverless stack. Serverless just means that you at no point would be managing these servers, (you might see them in some serverless deployments, for example there is an option to get SSH access to the App engine instance but you would never deal with them). I’ll discuss about App Engine first. App Engine is the PaaS service from Google Cloud where you only need to provide your code and App Engine takes care of the rest which includes providing you with the hosting environment, operating system, runtime environment. App Engine comes in two flavors Standard and Flex. This does a good job in explaining the differences between the two but essentially app engine standard runs your application code in a sandbox environment, can scale the application down to zero, and is recommended for applications that “Experiences sudden and extreme spikes of traffic which require immediate scaling.” Whereas App Engine flex runs your code inside a docker container, has a minimum 1 instance even when the app is not in use and is recommended for “applications that receive consistent traffic, experience regular traffic fluctuations, or meet the parameters for scaling up and down gradually.”

Cloud Run based on the open source Knative stack offers to run containers in a serverless manner. The difference between App Engine Flex and Cloud Run is that Cloud Run can scale down to zero and there are other difference in the billing models where Cloud Run offers a more granular pricing. But this is not to say that one is better than another. Your parameters might be different and it is recommended to try these two different services and finding it out on your own to see which one suits your use case. You can see and check the difference here.

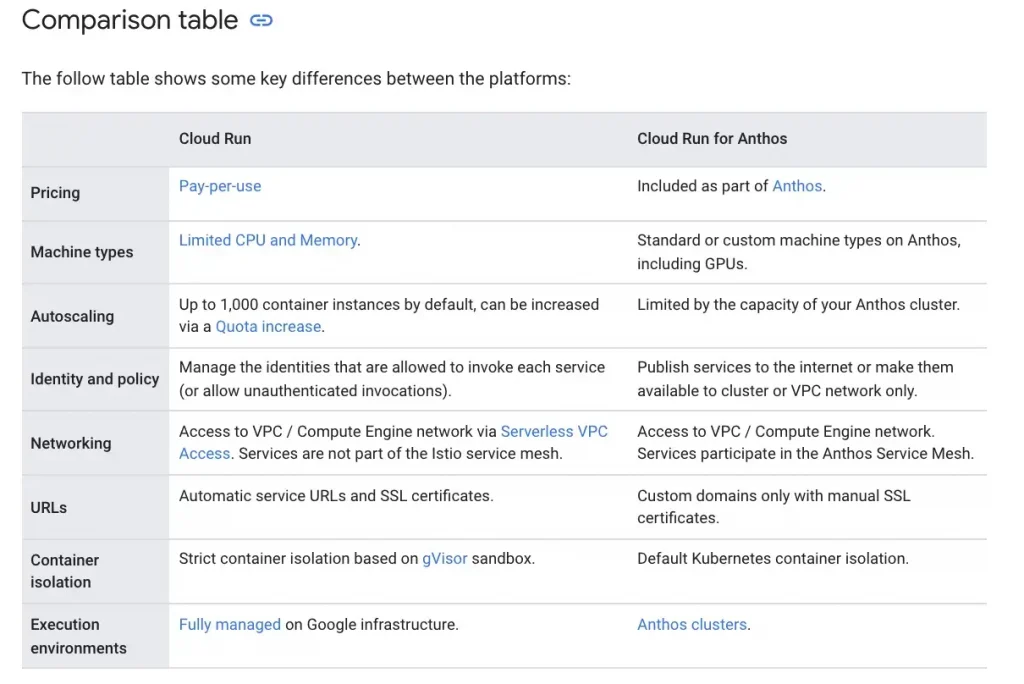

There is another option called as Cloud Run for Anthos where you get to run Knative based Cloud Run in your GKE environment with Anthos subscription. You can scale down the Pods (a kubernetes construct) to zero but since the nodes would not be scaled down you essentially would pay even if your application is not receiving any traffic. It doesn’t support background processes but if it is running on your own GKE clusters besides other applications there is a lot of control that you get some of them is highlighted here (like choose your compute, memory and autoscaling requirement).

Note: While WebSocket use and GPU/TPU access are technically possible with Cloud Run for Anthos, they are not officially supported.

So to conclude you should choose a serverless way of hosting your applications for the following use cases:

- You have a skilled team. The main criteria is how big or skilled is your team. If you perceive that your team should better spend their time on other value add activities like writing the best efficient code possible rather than managing servers then serverless is your best friend. You would still need a devops or (cloudops team) to make sure the code is deployed smoothly on the serverless infra but it can be a small team as compared to the team managing VMs, storage, backup etc. Skillsets would be slightly different of the serverless team so bear that in mind before hiring resources.

- At the end of the day, most of the decisions in IT are based on a question “Am I meeting my IT budget ?”. The budget is set basis the overall direction a company is going towards and its important that you don’t overrun your budget. Is Serverless cheaper than hosting on a VM ? The short answer is that it depends. If your web application does scale down to zero (example during night times), there is no background processing and number of requests are not that huge cloud run would be the best choice, but if the number of requests becomes too huge, background processing is required and you want to keep minimum number of instances at all times, cloud run can be expensive than GCE but then you would have to see how expensive that is as compared to hiring your full fledged devops team. I deliberately didn’t put an exact number on number of requests because it is advisable to do a test comparison between running your web application on VMs vs Cloud Run (or other serverless options) to get the right cost comparison which would be specific to your use case. There might be other scenarios wherein one might be better than the other but it is always the best idea to get the right comparison by testing it yourself.

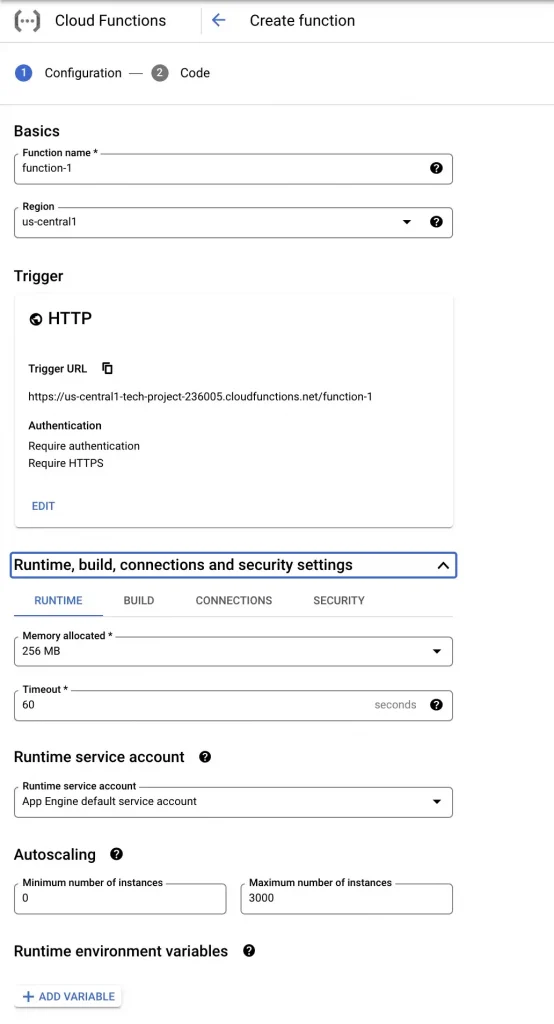

4.Cloud Functions/ Cloud RUN with Eventarc[Event Driven Serverless]: An application is not a single big chunk which keeps on running the entire day serving the users. It’s made up of different modules each with a different function, a different purpose. For example, in a simple web application which converts a .doc file to .pdf the document processing module of the application would only start working when someone uploads a file. Similarly in an ecommerce application, a person like me spends most of the time to find the best deal and only place an order after a lot of research, so order processing only comes in when someone clicks on the buy button. The point is many of the components of an application are event driven. Do this when that happens. In a traditional monolithic world you probably run your VMs 24×7 because you can’t predict when the users click on buy or when do they upload a document. In a modern world where you are dealing with microservices, you have an option to use an event driven framework.

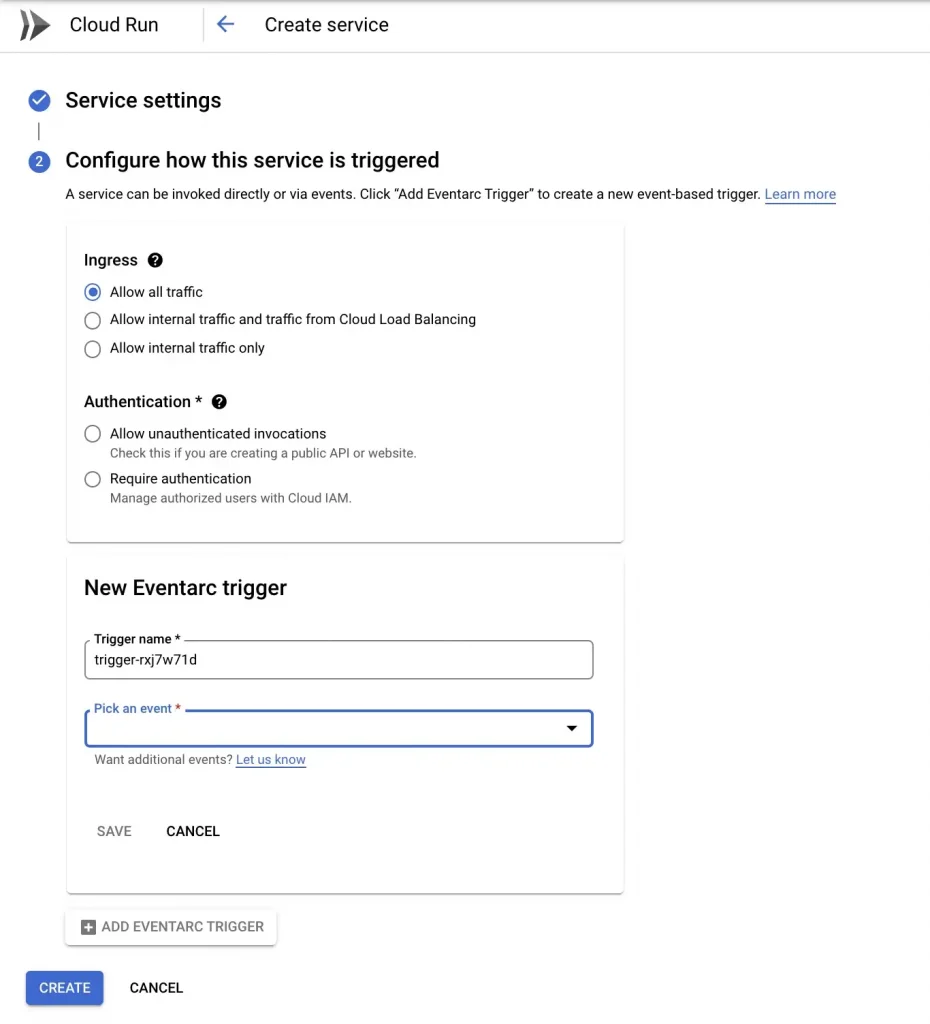

There are two choices on GCP at this point. Cloud Functions in which you only have to write the code in response to a trigger. The other option is Cloud Run where an Eventarc trigger can be defined and the corresponding container image needs to be provided which would run in response to the trigger.

Befor echoosing any services, always have a look at the limitations page to check if your application is not hitting any of them. You can find Cloud Functions Quotas and Limitation and Cloud Run Eventarc here. An important difference at this stage is the time limits. Cloud Function, at this point of time, can support only 9 minutes of processing time whereas Cloud Run can do 60 minutes.

To conclude, you would use an event driven serverless framework when

- Your application does work dependent on external triggers.

- You have a microservices based architecture, because only then your can use these event driven serverless framework for some of those external triggers dependent microservices.

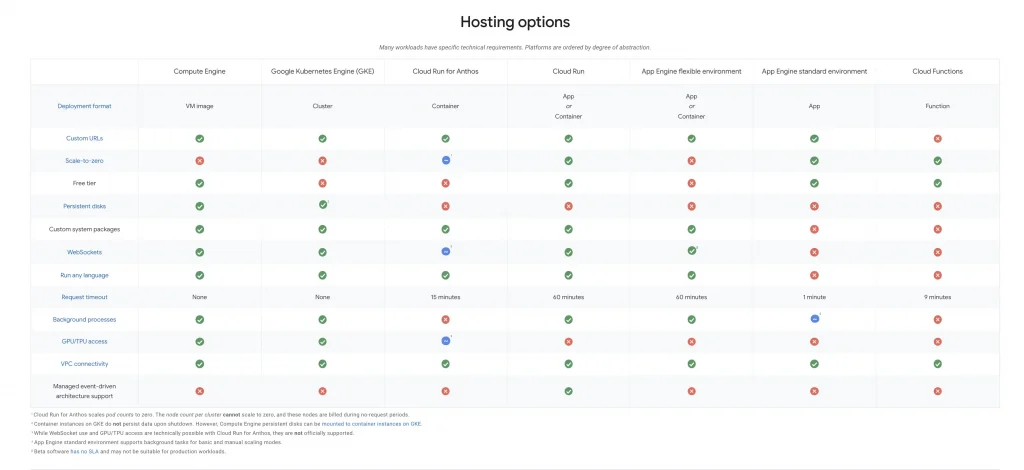

So those are the various options that you would have to think upon while deciding where do you run your compute. The below picture is a best summarization of all the options that we discussed (the original can be found here).

So hopefully up until this blog you are able to understand the compute options on cloud. An important piece that goes together with compute is storage and this is what we would study next in Part 4: Where do I store my data on Cloud ?

Check out the other parts here:

- Learning Cloud through GCP — Part 1: What is Cloud ? where I have tried to demystify cloud in as simple manner as possible so that our mind can put a picture to it (Is Cloud Model similar to a Vending Machine / a utility company ?)

- Learning Cloud through GCP — Part 2: How can I consume Cloud ? where I would first discuss the layers of a software application and then understand the different service models in which those layers are bundled and sold by a Cloud vendor with a Shared Responsibility Model. (IaaS, PaaS, SaaS, FaaS, XaaS)

- Learning Cloud through GCP -Part 3: Where do I compute on Cloud ? (this blog)

- Learning Cloud through GCP -Part 4: Where do I store my data on Cloud ? where I would try to answer a very pertinent question on how to select the right storage unit to hold your data. (OLTP vs OLAP, ETL vs ELT, SQL vs NoSQL, File vs Block Storage, What is NewSQL ?)

- Learning Cloud through GCP -Part 5: How do I connect to my Cloud ? where I would discuss some of the important networking and security constructs available on cloud. (Load Balancers, DNS, CDN, WAF, VPC)

The original article published on Medium.