By Abhinav Bhatia.Dec 6, 2021

Today, where people want cloud to be is not about [infrastructure and packaged apps]. It’s really about, ‘Can you give me new capabilities that I could not get before?

Thomas Kurien, CEO, Google Cloud

So in the last part we discussed the computing options on cloud classified into four — VMs, Container Engine, Serverless and Event Driven Serverless where we went through what these are and some of the criteria that might help you choose between them.

A few Disclaimers

All opinions discussed in this blog series are my own and in no way should be attributed to be coming from the companies that I am or have been a part of. These are my learnings that I have tried to put in in as simple manner as possible. I understand that oversimplification can sometimes leads to an alternate version which might not be true. I would try my best not to oversimplify but my only request is to take all of this with a pinch of salt. Validate from as many sources as possible.

Compute and Storage are often spoken about in the same breath. You can’t compute something without data. There is data processing happening at every point of the application stack and sometimes you need a place to store them. Let’s take an example of an e-commerce application. I go to its web site and search either as a guest or authenticate myself. In both the cases, the e-commerce business needs a way to log my interaction so that they can learn about my purchase behaviour and recommend me products in the future. But they store my details as a guest (which device I am coming from, cookie information, time of the day etc) or as an authenticated user (my profile information which would have my address and cards information) somewhere. I enter my search for a trolley bag, and I get presented with a multitude of options for me to look at. Each one of those options has some preview information which list the price, delivery date available, image and a few other details . On clicking any one of my options, I get presented with a detailed catalogue of the product which has several images, detailed information, reviews, a place to ask questions, similar products that others purchased and a whole plethora of information that would help me to finalize my purchase. After doing much research I finally pick one and click on buy. It then asks or rather confirms my address, card details and places the order. Once an order is placed, it keeps tracking and showing updates (again some data which is stored somewhere) until it is delivered (or fulfilled). If I chose to return it back if the product has some problems, that’s another processing chain which would have a lot of details like reason of return, return tracking, refund processing and refund invoice being stored somewhere. The point is every application would have to deal with data and storage in its lifecycle, some of it has to persist (store permanently), some of which is only available for a certain duration from a cache and expire afterwards (a new price of a product, or a new image of the product), and some of which is required as a scratch space or a temporary storage area for the application.

Types of Data

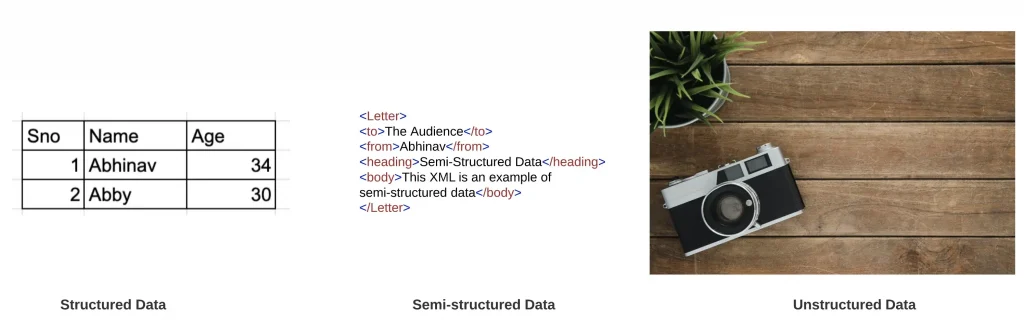

Data can be classified under various buckets but the most important classification is about the structure of the data. If the data is organizable, can be put under rows and columns like in a database, it is called structured data. If the data cannot be put in rows and columns but have some organizational capabilities, the data is called semistructured data (like XMLs/JSON). Lastly, if the data cannot be organized in a predefined manner like images, PDFs, videos, then that data is called unstructured data. Typically Machine learning has come to the rescue to make sense of this data and analyze them ( see Vision AI for images/Video AI for videos/Natural Language AI for text)

Databases

Databases is an important pillar or component of an application you have to think about while you architect or conceptualise your application. These are applications (or middleware) whose primary purpose is to manage the system of records, the data. There are critical operations like concurrency control, integrity, security, backup and many more which a Database Management System (DBMS) performs. How do you want to structure and store your data is a very important decision every architect goes through while designing an application. Because its that important piece which is responsible for storing your data (your source of truth), making sure that your application is able to fetch the information when required and in a way that not much time is spent in that process because if it is too time consuming then the application overall becomes less performant. Lets jump on to discuss the various types of databases that are available

1.Relational (SQL) Databases: You would typically use a relational database like MySQL, PostGre, MSFT Sql Server or Oracle when

- Your dataset has a Schema: Your data has a fixed schema ( tables, columns, data formats is all decided upfront and can’t be changed later). There are use cases like transactional data, like in the case of a banking application the account transactions of various customers (debit/credit) are generally stored in a relational database.

- Your dataset is Relational: Your entire database can be put in a tabular format consisting of not only one table but a set of tables interlinked with each other. For example, Purchase table is dependent on product table consisting of all the product ids and would only accept products transactions as long as it is coming from the product tables. So a query has to go through a lot of interlinked tables which ensures that there is referential integrity to get your answer.

- Follows ACID property: if your DBMS design follows ACID properties — Atomicity- A transaction must either succeed completely or if it fails it is reverted back to the state when the transaction started. No Partial run.

Consistency-Database goes from one valid consistent state to another with a transaction

Isolation- Databases moderate contentious access to data so concurrent transactions appear to run sequentially.

Durability- A completed transaction data will persist even in case of a power outage or any sort of system failure.

Cloud SQL from Google Cloud is a Managed Database as a Service offering which offers managed MySQL, PostGreSQL and MS SQL server at this point of time. Move to the same type of database would typically also involve the use of Database Migration Service and Move to a new type of database would take help of the Datastream(in Preview) service from GCP.

2.Non Relational (Not Only SQL) Databases: NoSQL Databases are a popular breed of databases like MongoDB, Google’s Firestore, Cassandra, Redis which are used when

- Your dataset Schema is flexible: Think about a product catalogue. A product like mobile phone would have different fields/attributes to describe the specifications as compared to a product like a trolley bag. Some might have a few fields than others. So it’s difficult to describe it upfront.

- Your dataset is Non Relational: Data storage models in NoSQL is basically Document consisting of mostly JSON documents (like MongoDB), Key-value consisting of either key-value pairs (like Redis, Memcache/ GCPs Cloud Memorystore), Wide-column: tables with rows and dynamic columns ( like Google Cloud Big Table or Apache Cassandra), Graph: nodes and edges (like Neo4j). In order to link multiple types of data you still have an option to nest the data inside a document. For example nested JSON.

- Follows BASE properties: Basically Available, Soft state, Eventually Consistent. BASE databases give preference to achieving availability over consistency. So these are Basically Available which means that there is no immediate consistency, because their main aim is to ensure availability of data by spreading it across multiple nodes. Data remains in a Soft state which means value of data might change because it is Eventually consistent which means that while the data is getting replicated, it is possible to get stale data during that time but eventually it becomes consistent.

Another important theorem which we should touch upon before discussing the next category is CAP which says that in a distributed system, you can only achieve two out of the three objectives

— Consistency: Linearizability. All nodes in the system would have the same view of data

— Availability: The nodes are always available to serve requests

— Partition Tolerance: if the network breaks between multiple nodes, the system remains online.

People often classify distributed NoSQL databases into either an AP or CP systems. This is a quite strict definition of classifying your databases and if we go by this article by Martin Klepmann, no database fall into these categories.

3.NewSQL: Its the best of both worlds (SQL and NoSQL), NewSQL databases are meant for OLTP (Online Transactional Processing applications) where both ACID and horizontal scalability is required. This wikipedia page highlights why they were conceptualised

NewSQL is a class of relational database management systems that seek to provide the scalability of NoSQL systems for online transaction processing (OLTP) workloads while maintaining the ACID guarantees of a traditional database system

Many enterprise systems that handle high-profile data (e.g., financial and order processing systems) are too large for conventional relational databases, but have transactional and consistency requirements that are not practical for NoSQL systems.

Some of the famous databases in this category are Google Cloud Spanner, Amazon Aurora, Cockroach DB and many others. This is an interesting article about Spanner, True Time and CAP theorem that Eric Brewer himself has written ( who formulated the CAP theorem in the late 1990s) if you want to understand this category of databases better.

Datawarehouses/Data lakes

So that was all about Databases which are part of Online Transactional Processing Systems. These are systems which are used typically by the users of the application (internal or external) and provides transaction oriented application like Banking, Ecommerce or Online Travel Agents selling airline tickets. Everything around would probably have an OLTP system.

But every mature business would also start thinking on investing in building an Online Analytical Processing System where you are building tools for analysis of business decisions. If you have sold million tickets across 7 countries but need to know which country did the largest number of sale or need to find out which branch among 1000 in 7 countries is the most profitable or any other complex query like why were the sale so low in XYZ branch. Is it because the region where the branch was located was in a complete lockdown ? Basically if you want to find out answers about your business, you need systems that traverses across all the different source of data in your business, if needed get a pipe to an external publicly available databases (like on lockdown) as well, marry the two, do a lot of transformations and create one holistic view of what is happening in my business. This final state is called a Datawarehouse if the purpose is well defined as you have a very strategic purpose to gather certain business insights. You define your schema before data is stored and essentially does ETL-Extract Transform and Load Operations.

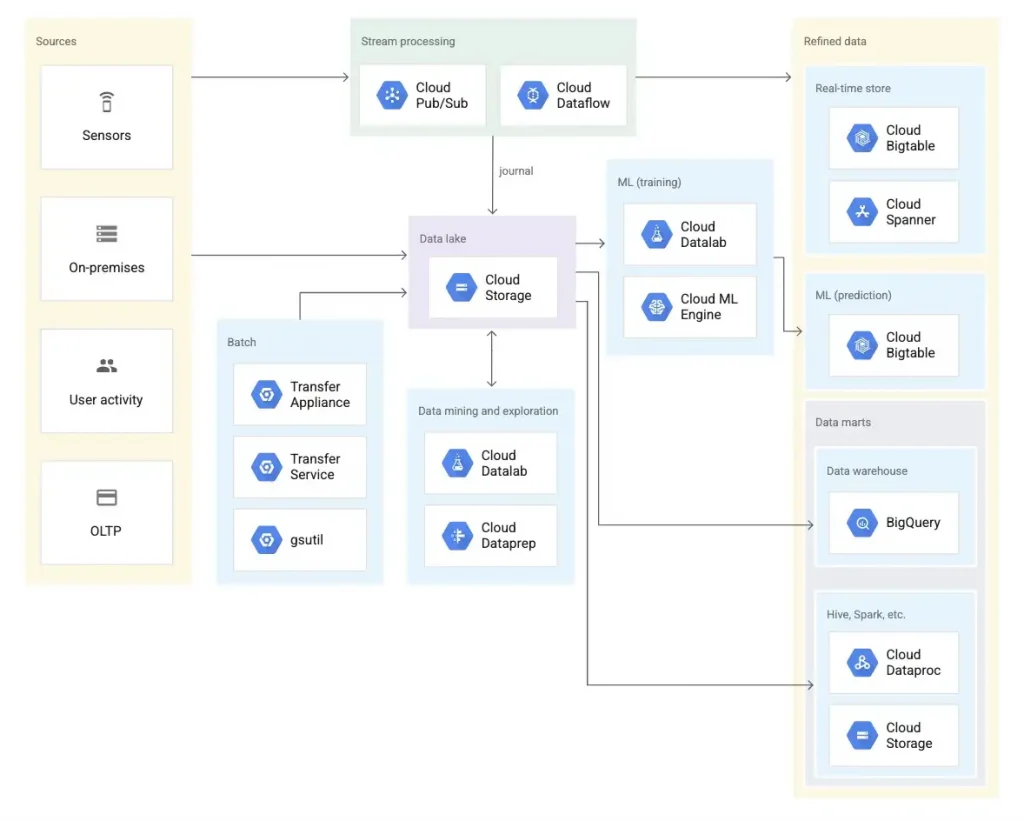

But if you are dumping everything under the roof of raw data (structured, semistructured, unstructured) into a final state where the purpose is to get an in-depth analysis to find out how to move ahead of your competitor, or answer queries that are still undefined, then that final state is called a Data Lake. Since the objective is different or rather undefined while capturing the data, we generally do a ELT-Extract Load and Transform operation and schema is defined after data is stored. Data lakes were born to harness the power of big data (data is growing at a humongous Volume, Velocity and Variety) and use the magic of machine learning for Predictive Analytics. Note: You can also use Machine learning techniques in a Datawarehouse .

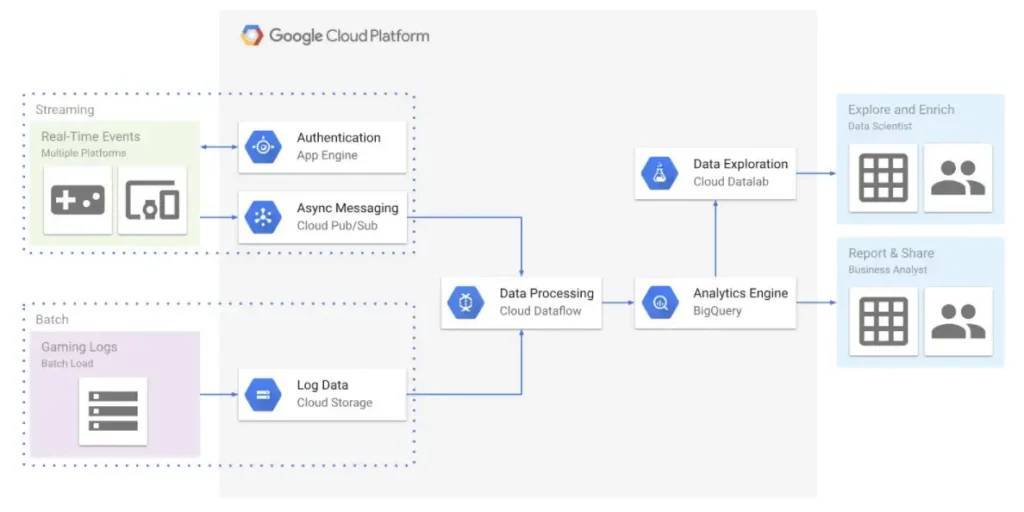

Bigquery from Google Cloud does a fantastic job of acting as a Datawarehouse as well as a great companion to Cloud Storage (which in itself can be used as a Data lake system) to build a data lake.It is serverless (and hence all the advantages we discussed in our last blog on Serverless compute) applied and has an innovative pricing model. There are a lot of other systems you would use to create your data pipeline along with Bigquery which are used in these wonderful Google Cloud Architectures.

File Storage

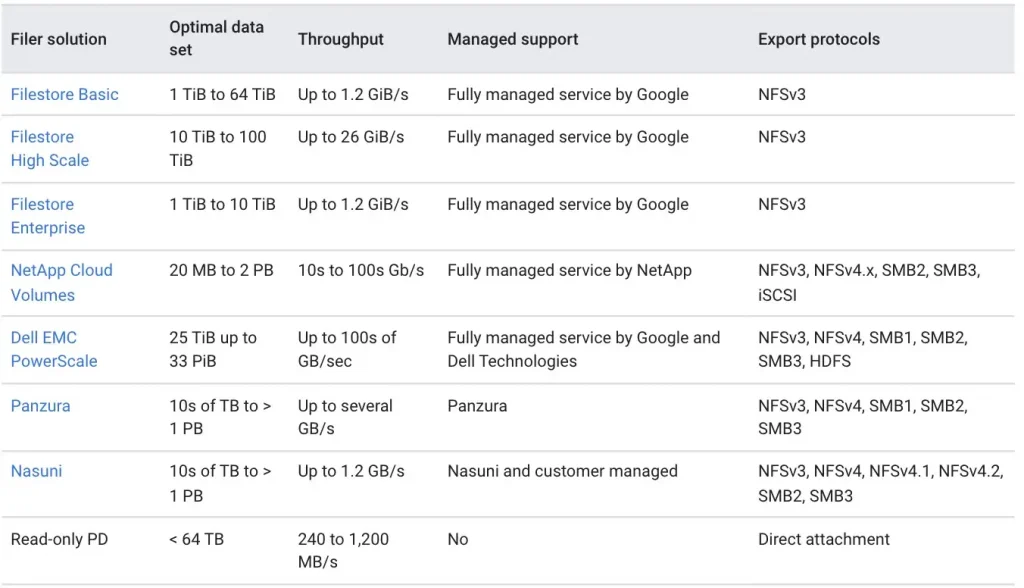

Another type of storage service that is often required in applications is a storage to keep unstructured data like images, videos and other files. In old days and some companies now also (because of the nature of application they use/requirement of certain COTS application/ or for internal use of sharing documents within an organisation) typically use a file server to store these files. File server play the role of taking care of responsibilities like permission management (who can access what), locking of files (only one person can write to a file at a time), and resolving conflicts in case multiple people are allowed to write to a file in certain cases. Its a typical application that uses various protocols to do the job

- SMB or Server Message Block which is natively supported for Windows and macOS systems. Linux and Unix systems generally have to use SAMBA or CIFSD (an open source version of Common Internet File System) to access or serve SMB.

- NFS or Network File System: Used primarily for Linux/Unix OS.

With the help of the above two protocols, users or applications get a remote file system for use/execution (watching a video on a shared file server). They can also copy a file and use locally. If the use case only involves downloading or uploading files over Internet SFTP(Secured File Transfer Protocol) is generally used.

If the file servers are set up in a cluster over a network that is typically called a Network Attached Storage and that is the most popular form of delivering an enterprise class file server.

GCP has the following options when it comes to using File Servers.

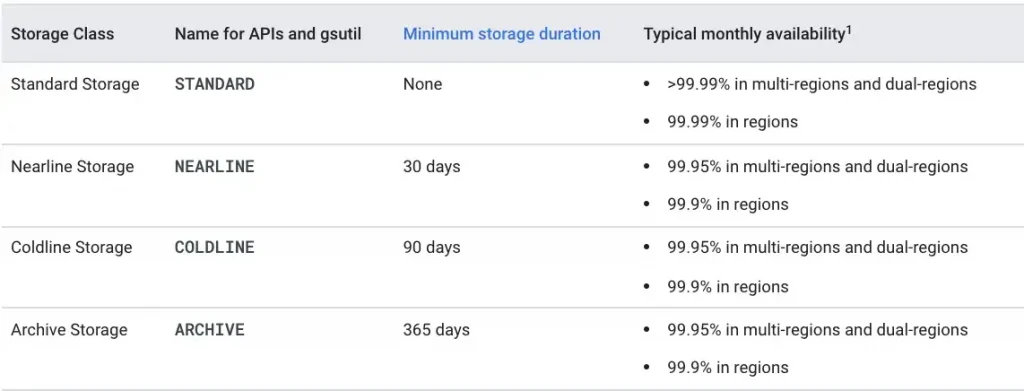

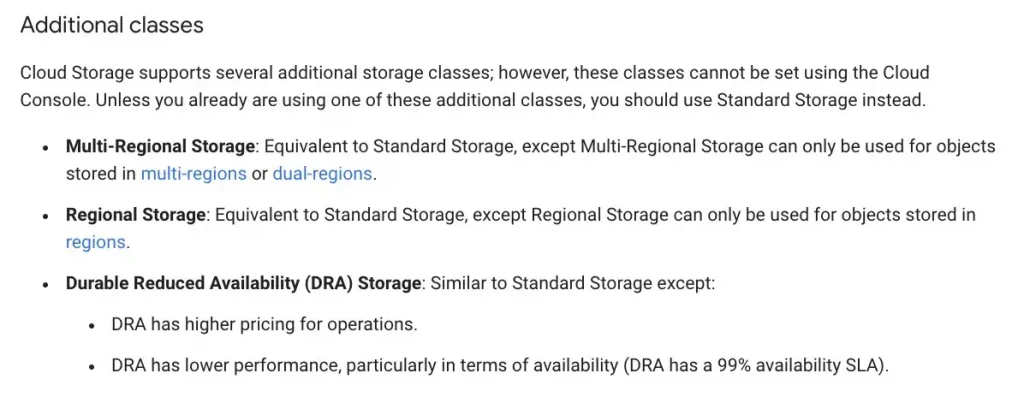

Object Storage

Another storage which is typically super useful (and also super popular) is Object Storage. It almost serves the same purpose as that of a file server which is storage and retrieval of Objects (like images, text files, videos, css and js files). The difference between Object and File storage is in its implementation as well as its architecture. While files from file servers are accessed over local area network (inside a VPC– more of that in the next blog) using protocols like SMB or NFS (which are operating system dependent) in which you are essentially map a network drive and store, retrieve and execute files, in an object storage system objects are accessed typically over internet (or you can have private connectivity options like Private Google Access) and the only protocol you need is HTTP/S, which is there inside your application, inside your operating system (using Cloud SDK like gsutil) and although not recommended but highly experimental can be mapped to your operating system also (using Cloud Storage FUSE).

The second difference lies in the architecture. Both are completely different systems. While File servers give you a hierarchical file system (C:/A/B/c.txt), object storage service can give the same view but its a flat file system. Cloud Storage is GCP’s answer to an Object storage service. It is hugely popular and also used (as discussed earlier) as a Datalake system, a backup storage, a transient storage system.

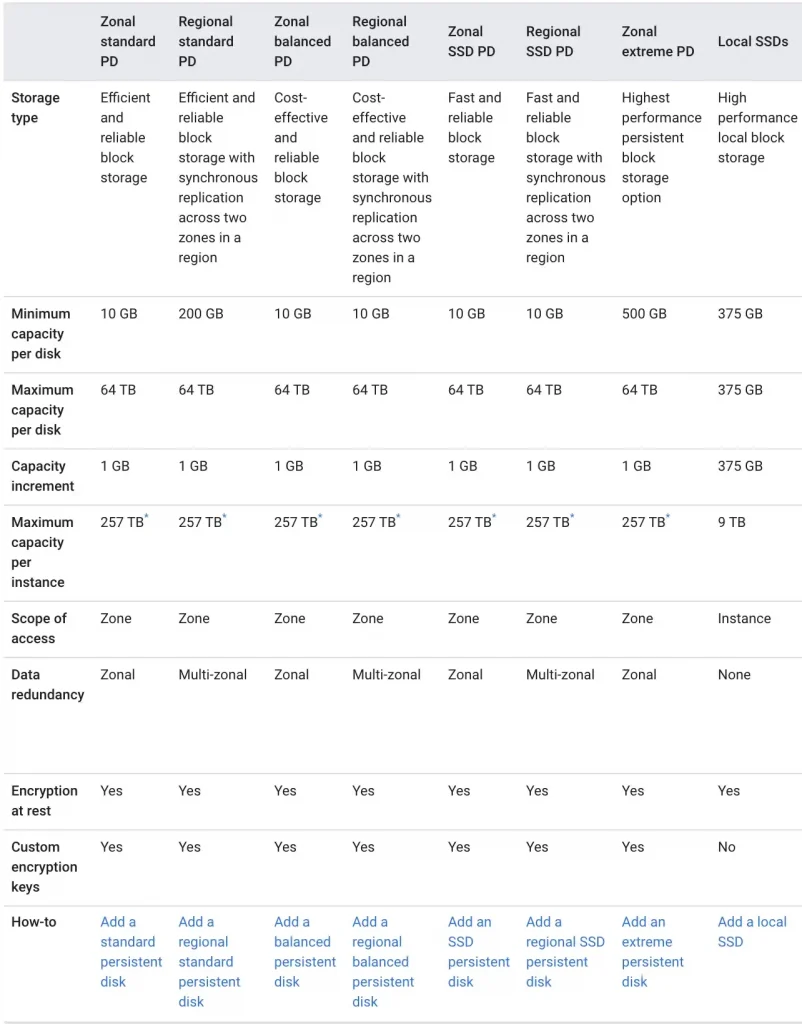

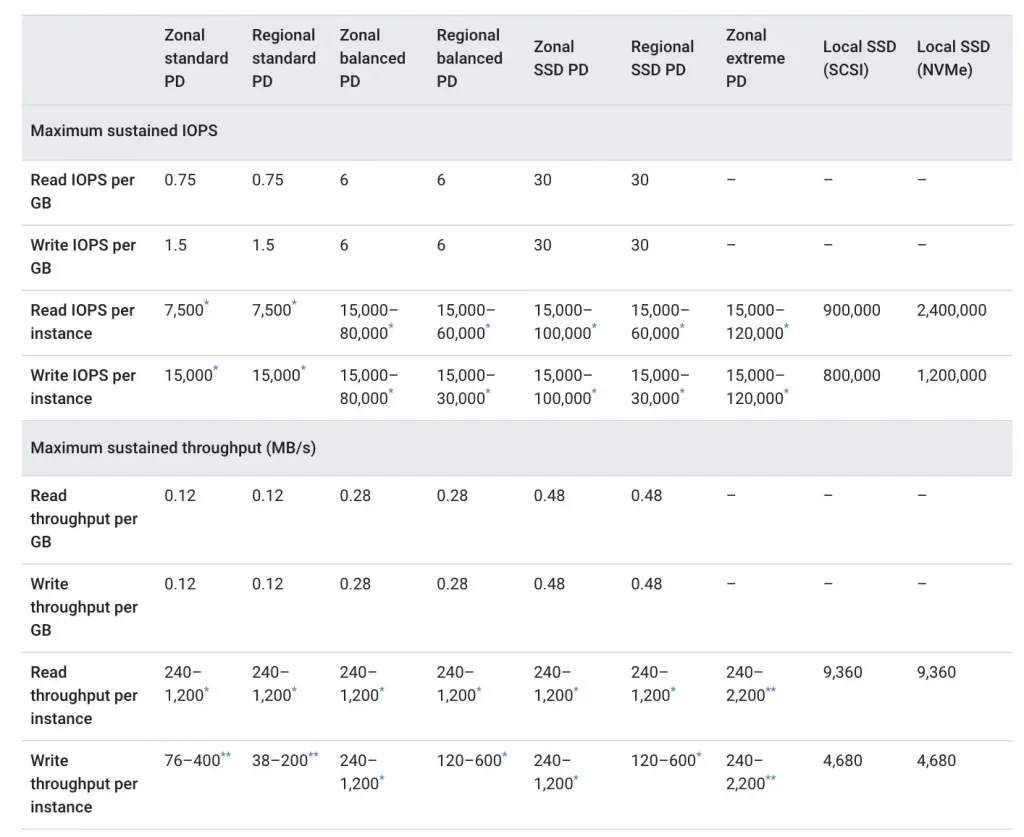

Block Storage

The last category of storage that I wanted to discuss is block storage. This is mostly utilized in case of a VM based architecture (described in my last blog) where when you launch a VM, you also specify the amount of storage that should be allocated for your VM on top of which your operating system would come preinstalled (in case of cloud, the customer configures and manages). You would typically need a boot drive for operating system and additional data drives to have the application binaries and other files stored. Since you chose an Infrastructure as a Service model, allocating a block storage volume, deleting when you delete a VM, backing it up (using snapshots- PD snapshots), increasing its size when the storage runs out (GCP provide you with tools but you have to do this operation) are all activities that needs to be done.

Note: GCP has a vey neat checkbox which does this automatically when checked in case of CloudSQL which is a PaaS Service (configuring is still customer’s job)

Block storage is available in various forms which defines the performance characteristics (latency, throughput, IOPS) and is zonal or regional mode where in the latter synchronous replication is configured across two zones in a region to make sure that the storage is highly available.

That was indeed a very long topic of discussion. But it is not over yet. In the next blog Part 5: How do I connect to my cloud : I would discuss on some of the networking and security components that you would typically use while building your application on cloud

Check out the other parts here:

- Learning Cloud through GCP — Part 1: What is Cloud ? where I have tried to demystify cloud in as simple manner as possible so that our mind can put a picture to it (Is Cloud Model similar to a Vending Machine / a utility company ?)

- Learning Cloud through GCP — Part 2: How can I consume Cloud ? where I would first discuss the layers of a software application and then understand the different service models in which those layers are bundled and sold by a Cloud vendor with a Shared Responsibility Model. (IaaS, PaaS, SaaS, FaaS, XaaS)

- Learning Cloud through GCP -Part 3: Where do I compute on Cloud ? where I would touch upon the several compute options available on cloud and how to choose between them. (VM vs Kubernetes vs Serverless vs Event Driven Serverless Framework)

- Learning Cloud through GCP -Part 4: Where do I store my data on Cloud ? (this blog)

- Learning Cloud through GCP -Part 5: How do I connect to my Cloud ? where I would discuss some of the important networking and security constructs available on cloud. (Load Balancers, DNS, CDN, WAF, VPC)

The original article published on Medium.