By Animesh Rastogi.May 17, 2022

Problem Statement

Recently, I was faced with a customer requirement where they had a regional NFS filestore yet their application VMs were present in multiple regions in US due to the unavailability of GPUs in the desired region.

Now, when the VM and the filestore were present in the same region, everything was fine but when the VM and the filestore were in different regions, they faced significant latency issues due to which user experience was getting compromised.

Since GPUs are in such acute shortage across hyperscalers and we can’t guarantee the availability of GPUs in the desired region, we decided to instead solve the issue at the storage layer. That means, we would require a multi regional storage service which replicates the data across multiple regions in US and offers consistent performance with respect to reads and writes when used by VMs spread across the US multi region.

Google Cloud Storage seems like an obvious choice in this case. It fulfils all the aforementioned criteria and it is much more cost effective compared to Filestore. You can also mount GCS as filesystems using GCSfuse and you can use it to upload and download Cloud Storage objects using standard file system semantics. Perfect right?? Nope

Cloud Storage FUSE has much higher latency than a local file system and while Cloud Storage FUSE has a file system interface, it is not like an NFS or CIFS file system on the backend. Cloud Storage FUSE retains the same fundamental characteristics of Cloud Storage, preserving the scalability of Cloud Storage in terms of size and aggregate performance which means it won’t be able to sustain the high iOPS requirement.

Potential Solution

The solution we thought to the problem is a hybrid approach — write all the data to a Local SSD and upload all the data to Cloud Storage during shutdown and during startup, download all the data from Cloud Storage back to Local SSD again. We decided to use Google’s Private network for the best price performance.

Now, this seems like it should work, however while we were doing the PoC for this, we ran into a performance issue highlighted below

Experiment

We used gsutil for our benchmarking since it is Google’s recommended command line utility to interact with GCS. Some constant parameters are as follows:

- Machine Type: n2-standard-4

- VM region: us-central1

- Bucket region: US multi region

- Disk Type and Size: Local SSD, 375 GB with NVME interface

I created a 90 GB file and then split it into ~90k chunks of 1 MB each

mkdir chunks/ fallocate -l 90G large_file split -b 1M large_file rm large_file

Upload Performance

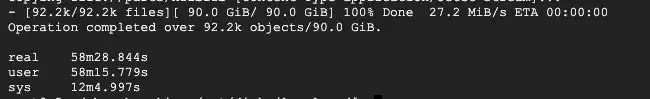

time gsutil -m cp -r chunks/ gs://searce-benchmarking-bucket/chunks/

It takes close to 1 hour to upload 92k objects over Google’s private network.

Download Performance

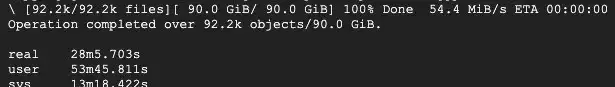

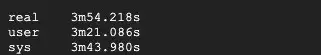

time gsutil -m cp -r gs://searce-benchmarking-bucket/chunks/ .

Much better performance in download as compared to upload. However, the throughput is embarrassingly low. According to the documentation, the ideal write throughput for Local SSD is 350 MB/s. So this is operating at 1/7 the potential.

At this point, it seemed that even though conceptually the solution might have been sound, it wasn’t very practical. That’s when I started looking though the Internet and stumbled upon s5cmd. It is an open source tool designed to significantly improve performance with S3 buckets. For uploads, s5cmd is 12x faster than aws-cli and for downloads, it can saturate a 40Gbps link whereas aws-cli can only reach 375MB/s. There are two reasons why s5cmd has superior performance:

- Written in a high-performance, concurrent language, Go, instead of Python. This means the application can take better advantage of multiple threads and is faster to run because it is compiled and not interpreted.

- Better utilisation of multiple tcp connections to transfer more data to and from the object store, resulting in higher throughput transfers.

Since gsutil is also written in python, maybe that is the reason for it’s poor performance and if I can use s5cmd, that should make my transfers significantly faster. So I configured the S3 interoperability settings for GCS. The Interoperability API allows Google Cloud Storage to interoperate with tools written for other cloud storage systems. Now let’s see the data transfer speeds with s5cmd.

Upload Performance

time s5cmd cp -c=80 chunks/ s3://searce-benchmarking-bucket/chunks/

Wow!! Almost 8.5x improvement over gsutil with throughput of about 214 MB/s compared to around 27 MB/s with gsutil.

Download Performance

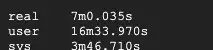

time s5cmd cp -c=80 s3://searce-benchmarking-bucket/chunks/* chunks/

As expected, the download was 7.2x faster than gsutil with the a throughput of a whopping 384 MB/s saturating the Local SSDs write throughput limit.

Conclusion

Google’s official tool gsutil is poorly optimised when it comes to high throughput data transfer between GCE VM and Cloud Storage due to the language of its choice. Instead, consider using s5cmd, an open source alternative which you can use with both AWS S3 and GCS.

Since s5cmd was designed with S3 in mind and treats S3 as a first class citizen, there may be scenarios where it might not be the right choice of tool and gsutil performs better. but for broad use cases of data transfers, it can significantly reduce your transfer time

References

- Use fallocate Command to Create Files of Specific Size in Linux

- 11 Useful Split Command Examples for Linux Systems

- S5cmd for High Performance Object Storage

- Optimizing your Cloud Storage performance: Google Cloud Performance Atlas

- Google Cloud / Fully migrate from Amazon S3 to Cloud Storage

The original article published on Medium.