By Kishore Jagannath.Nov 10, 2022

Kubernetes technology has come a long way in enterprise adoption. With the huge growth of microservices, enterprises are looking at a clean way of deploying their microservice applications in Kubernetes eco-system. Multi-cluster deployments not only enable clean separation, but also better disaster recovery, autoscaling and Identity management capabilities. In this three part series, we will discuss in depth on how to achieve native multi cluster deployments with GKE. In this initial post(part 1), I will explain what a multi cluster deployment is, the need for multi cluster deployments, different deployment models and the challenges. In part 2(coming soon), I will be explaining Multi Cluster communication via Service Export, and in part 3(coming soon)we will look at MultiClusterIngress which enables Kubernetes services and ingress to be deployed across multiple clusters .

Note: This series exclusively focusses on GKE capabilities. Other solutions like “Service Mesh” which provides multi-cluster communication capabilities will not be discussed in this blog series.

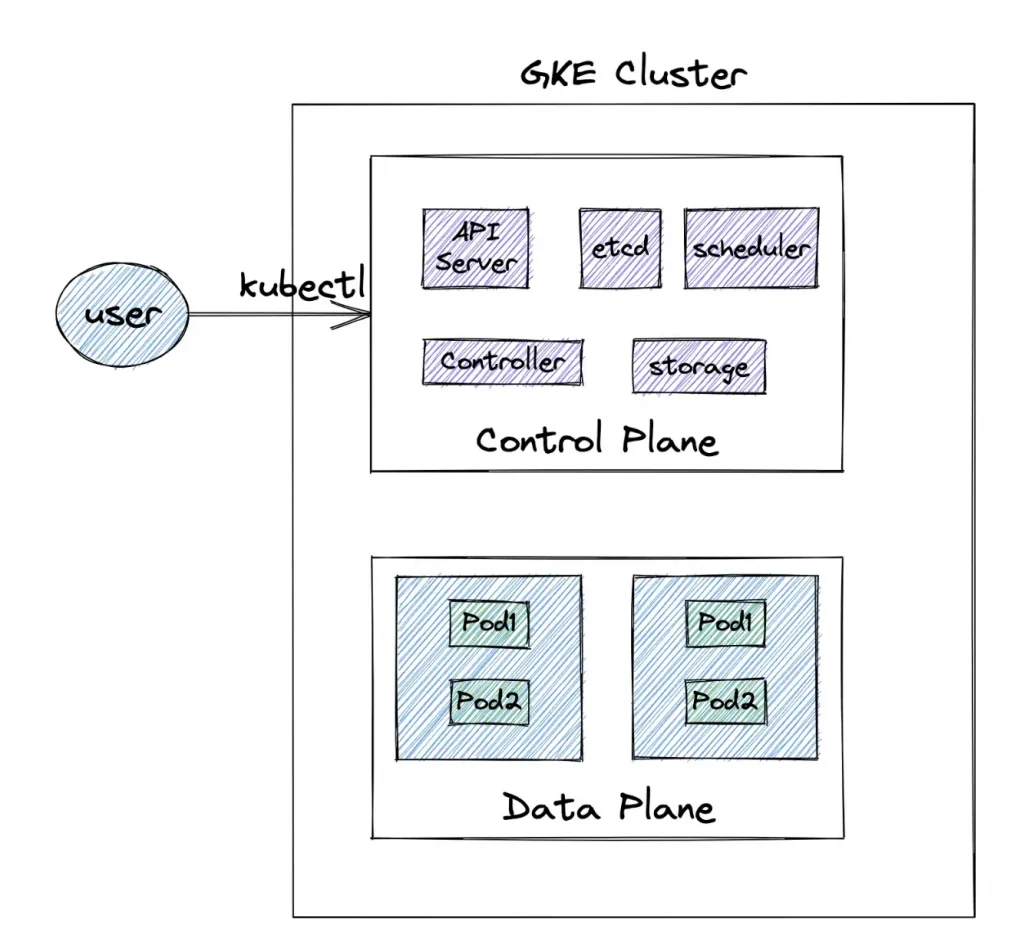

GKE Cluster

A GKE cluster consists of a control plane and data plane. The control plane is completely managed by Google Cloud and contains the Kubernetes components. The Kubernetes control plane consists of the Kubernetes API server, controllers , etcd, schedulers etc. Data plane on the other hand are the Kubernetes worker nodes, where enterprises deploy their application workloads.

Why Multiple Clusters

High Availability and Failover: GKE clusters can either be zonal based or deployed across multiple zones within a region. To insulate against regional failures, enterprises may want to deploy application instances and scale those across different regions. With multi-cluster support, applications can be deployed across regions in different clusters and serve end users in different geographies.

Data Gravity: GKE clusters can deployed in a region closer to the data residing in a DB within the same region. This reduces network latencies and also database round trip times.

Isolation: Different GKE clusters can be created to isolate different environments. Separate clusters can be created for Development, Production, Performance, UAT etc. Also separate clusters can be created for Business units or scrum teams.

Access Management: Multiple GKE clusters simplify access management. For e.g IAM policies can be created to provide access to individual teams for specific GKE clusters. This allows teams to own their individual GKE clusters and manage them independently.

Scale: Extend beyond the limits of a single cluster or project by deploying applications across multiple clusters within the same project or different projects

Maintenance: Kubernetes version upgrades can be performed to selected services running within a cluster, shielding other services running in different clusters. This lowers the risk of failures during maintenance events. Also maintenance events can be planned based on the nature of applications deployed within the cluster.

Compliance: Specific application workloads may have to run in specific regions for data compliance. Hence these applications can be deployed in clusters created in specific regions.

Challenges

Management: Kubernetes administrators will have additional management overhead of managing multiple clusters instead of a single cluster. It should be possible to manage these clusters from a single pane.

East-West Communication: Application services within different clusters should be able to communicate east-west across cluster boundaries. For e.g order service in Cluster A may have to communicate with payment service in different cluster.

North-South Communication: It should be possible to scale the services across cluster boundaries and route north south external traffic(Load balancer) to these services residing across multiple clusters

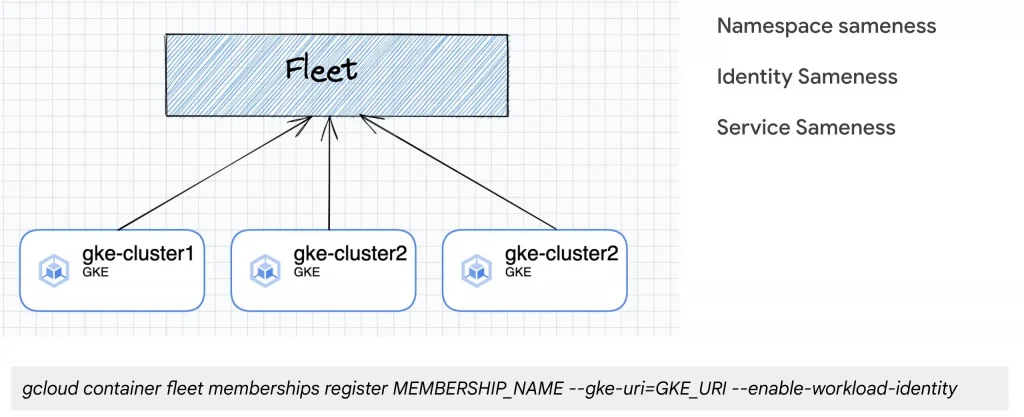

Fleet

Fleet is Google Kubernetes Engine’s solution to the challenges involved in a multi-cluster architecture. It is a logical grouping of multiple clusters that are registered to it. A fleet provides

Namespace sameness: Namespace names a Fleet are allocated across the entire fleet at Fleet level and not cluster level. Many of the components deployed within a namespace respect the name space sameness.

Service sameness: Services with same name residing in the same namespace across clusters are considered to be similar. Within a fleet it also possible to scale and load balance deployments across cluster boundaries.

Identity sameness: Services deployed across the cluster within the same namespace inherit the same identity both within and outside the cluster. In particular these identities are the workload identity to access google services externally and restricting inter service access via Anthos Service Mesh.

In GKE, a project is designated as Fleet host project and multiple clusters within the same project or different projects are registered to the Fleet. The clusters registered to the fleet should host services that are related to each other . For e.g multiple BU or team specific GKE clusters belonging to same environment(Prod, Dev etc). These clusters should ideally have a single administrator .

Multi-cluster deployment models

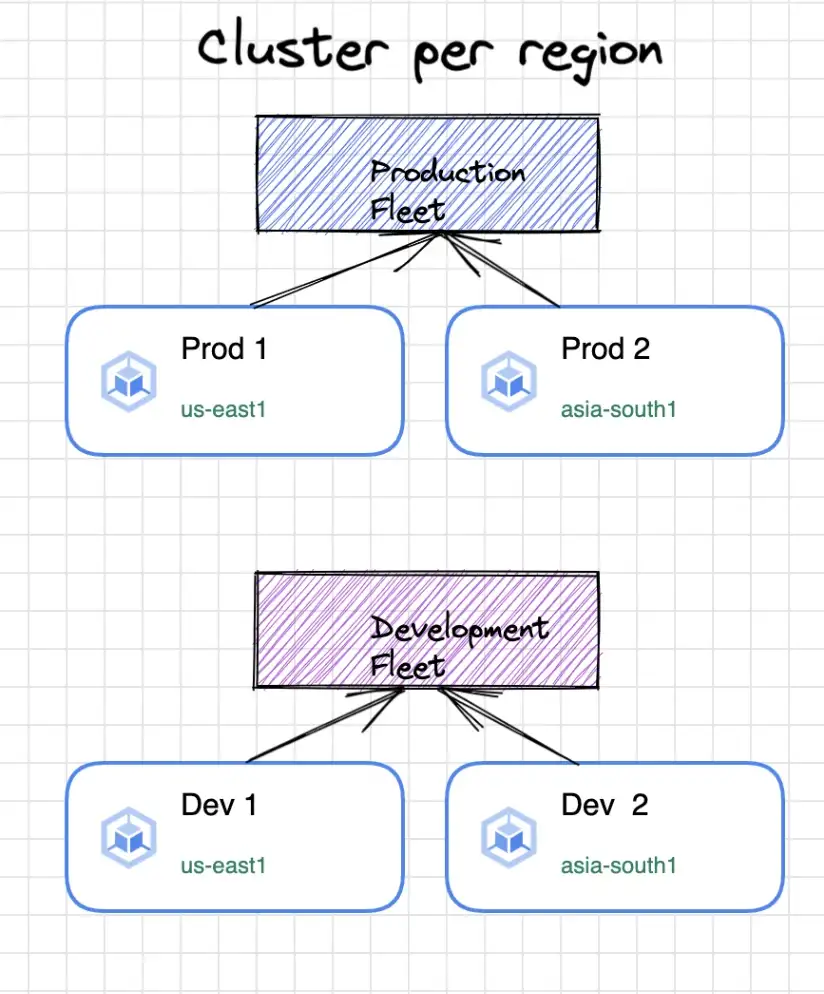

Cluster per region:

Create clusters across more than one region and scale your services across these regions. This model provides resilience against regional failures and also enables you to deploy your applications closer to the end users. Also typically separate fleets are created for different environments like “Dev, UAT, Prod” to manage them independently.

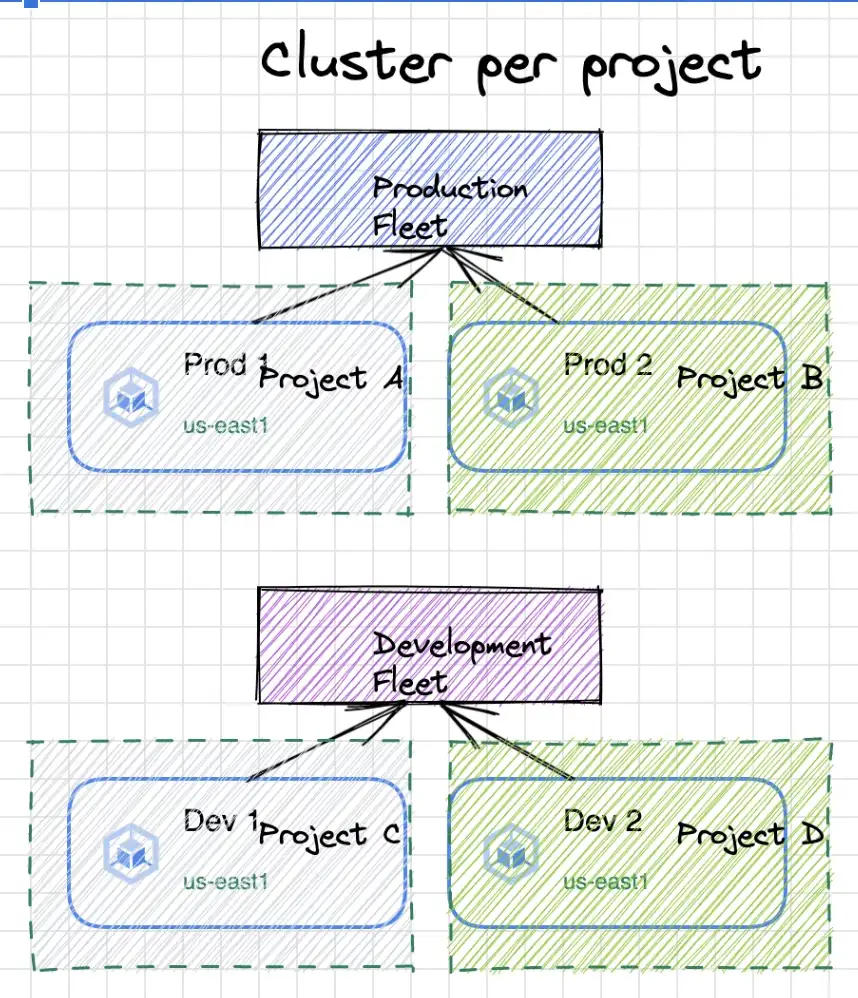

Cluster Per project:

If an enterprise has multiple independent BUs and teams with different GCP projects a separate cluster per team within each individual project can be created. This provides clean project level isolation between GKE clusters and better “Identity and access management”.

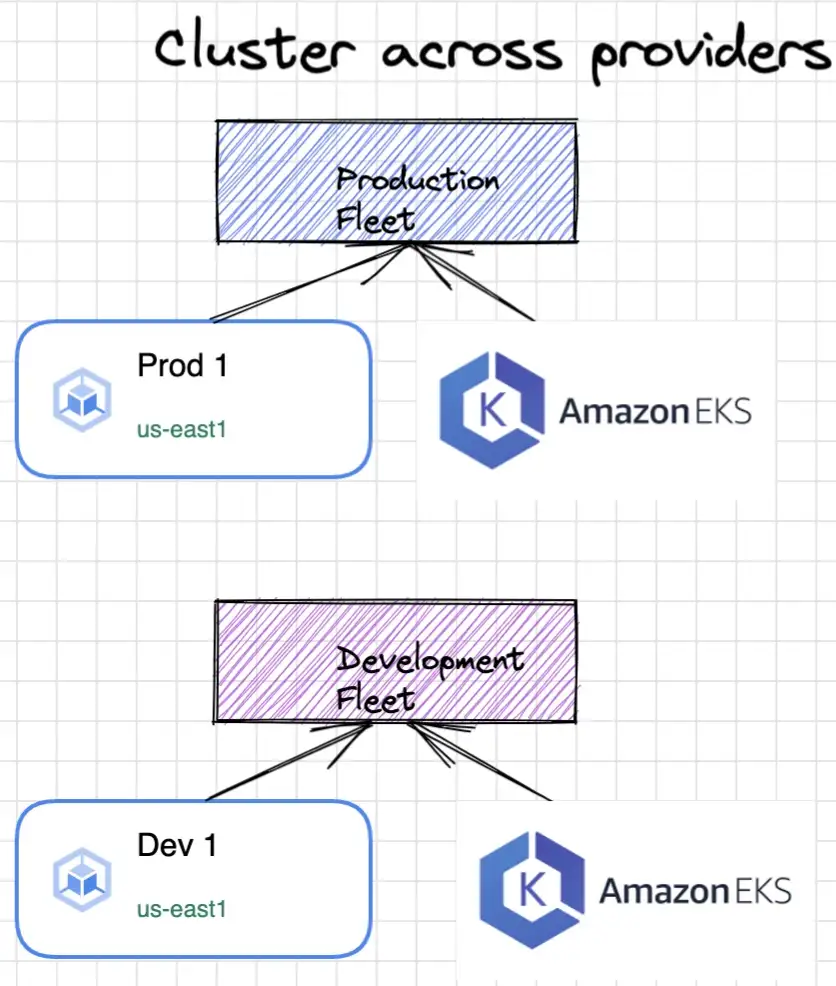

Clusters across cloud provider:

Deploy clusters across multiple cloud providers and manage these clusters with GKE. This model facilitates multi cloud deployments within an organisation adopting multi-cloud strategy. Deployment across cloud providers requires Anthos capabilities and this will not covered in this blog series.

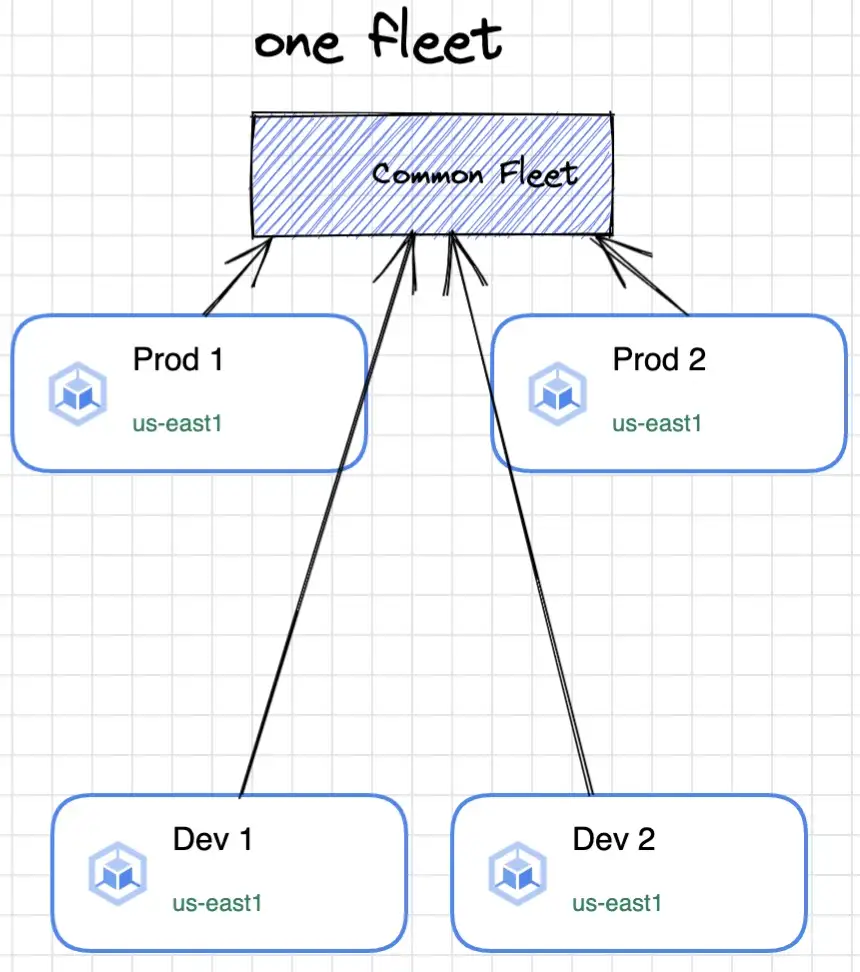

Clusters managed by Single Fleet:

While this is generally an anti-pattern, it is possible to manage all clusters across different environments in an organisation with a single Fleet. Since fleet provides namespace, service and identity sameness, care should be taken to isolate multiple environments(Dev, Prod, UAT etc)with different namespaces.

Conclusion

GKE Fleet helps to overcome multiple challenges in kubernetes multi- cluster deployments. They enable management of the clusters from a single pane of glass and also facilitate well governed communication and scaling across these clusters. Multi cluster deployments enable better disaster recovery, failover and also provide cleaner separation across multiple BU’s or Teams within an organisation. In the next two parts of this series(coming soon :-)), I will discuss two major capabilities of GKE Fleet — Service Export and Multi-cluster Ingress and how these can be used to overcome Multi cluster challenges.

The original article published on Medium.